I am running pyspark, spark 1.3, standalone mode, client mode.

I am trying to investigate my spark job by looking at the jobs from the past and comparing them. I want to view their logs, the configuration settings under which the jobs were submitted, etc. But I'm running into trouble viewing the logs of jobs after the context is closed.

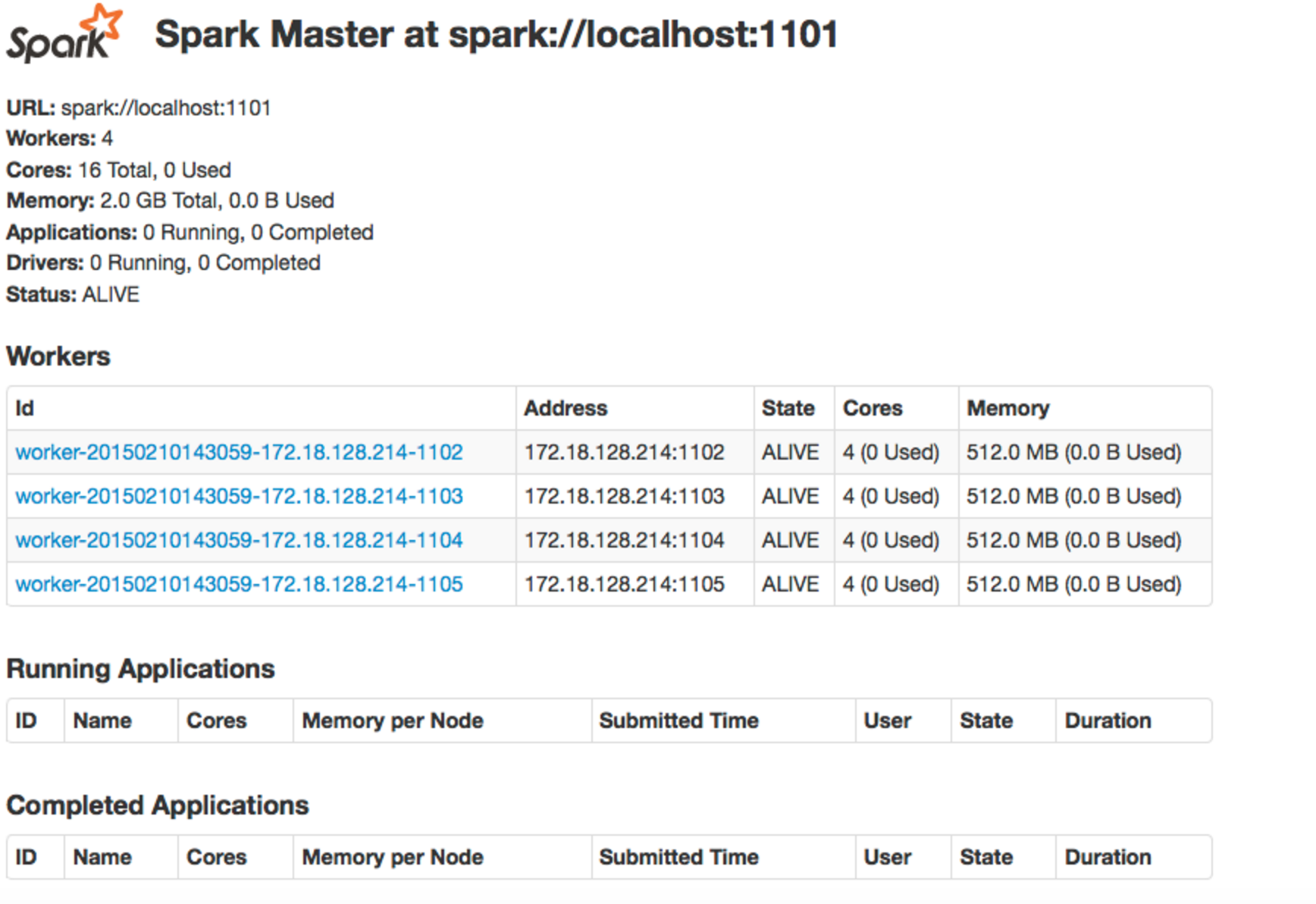

When I submit a job, of course I open a spark context. While the job is running, I'm able to open the spark web UI using ssh tunneling. And, I can access the forwarded port by localhost:<port no>. Then I can view the jobs currently running, and the ones that are completed, like this:

Then, if I wish to see the logs of a particular job, I can do so by using ssh tunnel port forwarding to see the logs on a particular port for a particular machine for that job.

Then, sometimes the job fails, but the context is still open. When this happens, I am still able to see the logs by the above method.

But, since I don't want to have all of these contexts open at once, when the job fails, I close the context. When I close the context, the job appears under "Completed Applications" in the image above. Now, when I try to view the logs by using ssh tunnel port forwarding, as before (localhost:<port no>), it gives me a page not found.

How do I view the logs of a job after the context is closed? And, what does this imply about the relationship between the spark context and where the logs are kept? Thank you.

Again, I am running pyspark, spark 1.3, standalone mode, client mode.

spark contextis closed, why is it that I can't view it in thewebUI? Is it because it shuts down the traffic on that port when the context is closed? – Hedvah