I am trying to use ActionCable and Turbo Stream in my Rails 7.0.4 app where the WebSocket connection to /cable is being closed unexpectedly in the production environment.

Ruby 3.0.5

64bit Amazon Linux 2/3.6.3

Implementing this Chat with Turbo Streams and OpenAI

https://gist.github.com/alexrudall/cb5ee1e109353ef358adb4e66631799d

This issue does not occur in development everything works perfectly there.

Redis Cable Config

production:

adapter: redis

url: <%= "redis://#{ENV.fetch('REDIS_HOST', 'localhost')}:6379/1"%>

channel_prefix: fist_bump_production

REDIS_HOST is the AWS Elasticache service endpoint and have tested the endpoint works for Sidekiq.

First I had a 404 issue in production with /cable but then I added this to the config.

location /cable {

proxy_pass http://my_app;

proxy_http_version 1.1;

proxy_set_header Upgrade websocket;

proxy_set_header Connection "upgrade";

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

No more 404 but then I started getting a 499 and need to refresh the page to see the updates.

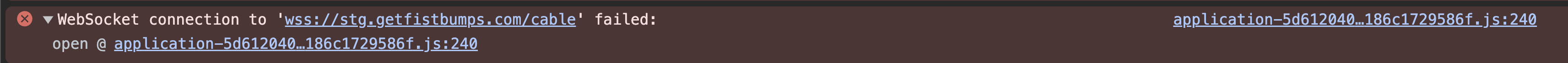

I also see this in the console.

Have tried this in the config to permit all urls.

config.action_cable.allowed_request_origins = [ %r{http://*}, %r{https://*} ]

- I have increased stickiness on the Classic Load Balancer.

- Changed listeners to TCP on both 80 and 443.

- Read about 3 pages worth of Google results.

- tried to get help from AWS "Experts" (and I had to explain what Rails was)

No real help. Any suggestions on how to get this thing working in Beanstalk?