This is my first experience with SSIS so bear with me... I am using SSIS to migrate tables from Oracle to SSMS, there are some very large tables I am trying to transfer (50 million rows +). SSIS is now completely freezing up and rebooting VS when I am just trying to save the package (not even running it). It keeps returning errors of insufficient memory, however, I am working on a remote server that has well over the RAM it takes to run this package.

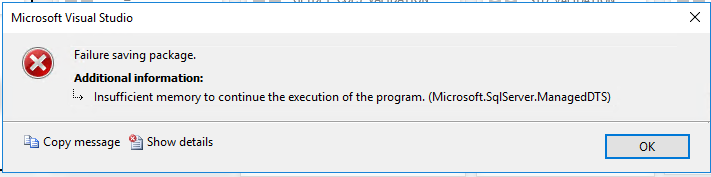

Error Message when trying to save

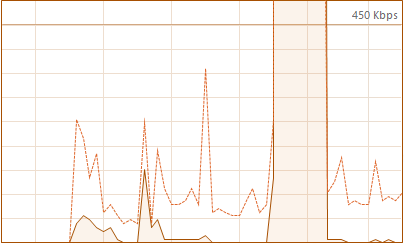

The only thing I can think of is when this package is attempting to run, my Ethernet Kbps are through the roof right as the package starts. Maybe need to update my pipeline?

Ethernet Graph

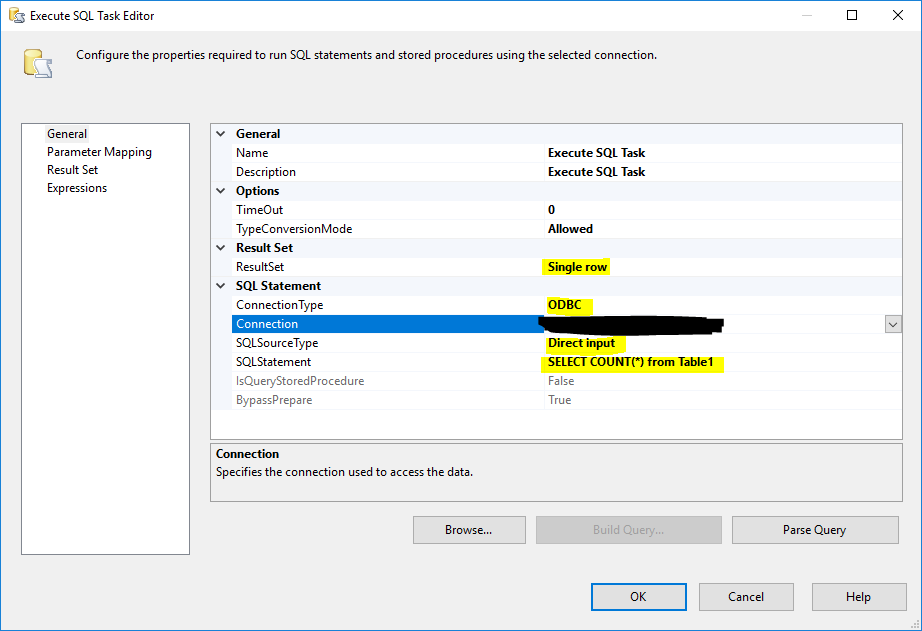

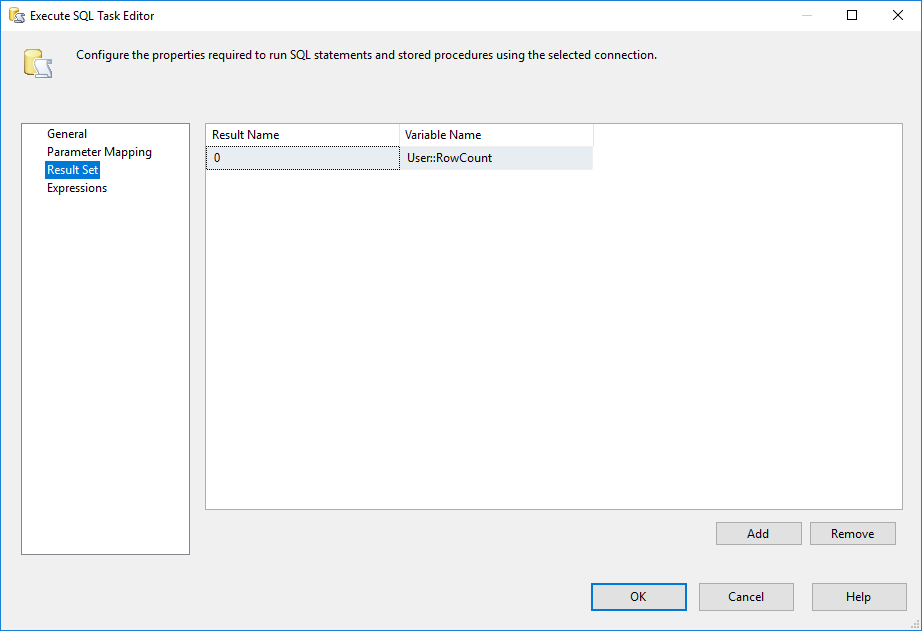

Also, my largest table will fail when importing due to BYTE sizes (again, not nearly using all the memory on the server). We are using ODBC Source as this was the only way we were able to get other large tables to upload more than 1 million rows.

I have tried creating a temporary buffer file to help with memory pressure, but that had no changes. I have changed the AutoAdjustBufferSize to True, no change in results. also changed DefaultBufferMaxRows and DefaultBufferSize.. no change.

ERRORS WHEN RUNNING LARGE TABLE:

Information: 0x4004300C at SRC_STG_TABLENAME, SSIS.Pipeline: Execute phase is beginning.

Information: 0x4004800D at SRC_STG_TABLENAME: The buffer manager failed a memory allocation call for 810400000 bytes, but was unable to swap out any buffers to relieve memory pressure. 2 buffers were considered and 2 were locked.

Either not enough memory is available to the pipeline because not enough are installed, other processes were using it, or too many buffers are locked.

Information: 0x4004800F at SRC_STG_TABLENAME: Buffer manager allocated 1548 megabyte(s) in 2 physical buffer(s).

Information: 0x40048010 at SRC_STG_TABLENAME: Component "ODBC Source" (60) owns 775 megabyte(s) physical buffer.

Information: 0x4004800D at SRC_STG_TABLENAME: The buffer manager failed a memory allocation call for 810400000 bytes, but was unable to swap out any buffers to relieve memory pressure. 2 buffers were considered and 2 were locked.

Either not enough memory is available to the pipeline because not enough are installed, other processes were using it, or too many buffers are locked.

Information: 0x4004800F at SRC_STG_TABLENAME: Buffer manager allocated 1548 megabyte(s) in 2 physical buffer(s).

Information: 0x40048010 at SRC_STG_TABLENAME: Component "ODBC Source" (60) owns 775 megabyte(s) physical buffer.

Information: 0x4004800D at SRC_STG_TABLENAME: The buffer manager failed a memory allocation call for 810400000 bytes, but was unable to swap out any buffers to relieve memory pressure. 2 buffers were considered and 2 were locked.

Either not enough memory is available to the pipeline because not enough are installed, other processes were using it, or too many buffers are locked.

Information: 0x4004800F at SRC_STG_TABLENAME: Buffer manager allocated 1548 megabyte(s) in 2 physical buffer(s).

Information: 0x40048010 at SRC_STG_TABLENAME: Component "ODBC Source" (60) owns 775 megabyte(s) physical buffer.

Error: 0xC0047012 at SRC_STG_TABLENAME: A buffer failed while allocating 810400000 bytes.

Error: 0xC0047011 at SRC_STG_TABLENAME: The system reports 26 percent memory load. There are 68718940160 bytes of physical memory with 50752466944 bytes free. There are 4294836224 bytes of virtual memory with 914223104 bytes free. The paging file has 84825067520 bytes with 61915041792 bytes free.

Information: 0x4004800F at SRC_STG_TABLENAME: Buffer manager allocated 1548 megabyte(s) in 2 physical buffer(s).

Information: 0x40048010 at SRC_STG_TABLENAME: Component "ODBC Source" (60) owns 775 megabyte(s) physical buffer.

Error: 0x279 at SRC_STG_TABLENAME, ODBC Source [60]: Failed to add row to output buffer.

Error: 0x384 at SRC_STG_TABLENAME, ODBC Source [60]: Open Database Connectivity (ODBC) error occurred.

Error: 0xC0047038 at SRC_STG_TABLENAME, SSIS.Pipeline: SSIS Error Code DTS_E_PRIMEOUTPUTFAILED. The PrimeOutput method on ODBC Source returned error code 0x80004005. The component returned a failure code when the pipeline engine called PrimeOutput(). The meaning of the failure code is defined by the component, but the error is fatal and the pipeline stopped executing. There may be error messages posted before this with more information about the failure.

This is really holding up my work. HELP!