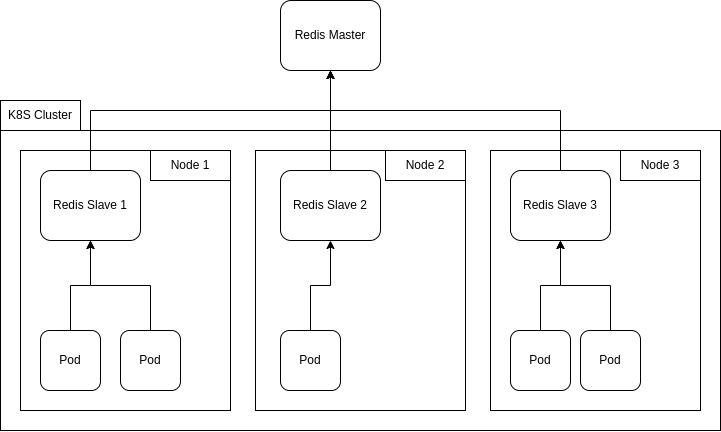

A graph is always better than the last sentences, so here is what I would like to do :

To sum up:

- I want to have a Redis master instance outside (or inside, this is not relevant here) my K8S cluster

- I want to have a Redis slave instance per node replicating the master instance

- I want that when removing a node, the Redis slave pod gets unregistered from master

- I want that when adding a node, a Redis slave pod is added to the node and registered to the master

- I want all pods in one node to consume only the data of the local Redis slave (easy part I think)

Why do I want such an architecture?

- I want to take advantage of Redis master/slave replication to avoid dealing with cache invalidation myself

- I want to have ultra-low latency calls to Redis cache, so having one slave per node is the best I can get (calling on local host network)

Is it possible to automate such deployments, using Helm for instance? Are there domcumentation resources to make such an architecture with clean dynamic master/slave binding/unbinding?

And most of all, is this architecture a good idea for what I want to do? Is there any alternative that could be as fast?