Why not using the built-in md5 function?

md5(e: Column): Column Calculates the MD5 digest of a binary column and returns the value as a 32 character hex string.

You could then use it as follows:

val new_df = load_df.withColumn("New_MD5_Column", md5($"Duration"))

You have to make sure that the column is of binary type so in case it's int you may see the following error:

org.apache.spark.sql.AnalysisException: cannot resolve 'md5(Duration)' due to data type mismatch: argument 1 requires binary type, however, 'Duration' is of int type.;;

You should then change the type to be md5-compatible, i.e. binary type, using bin function.

bin(e: Column): Column An expression that returns the string representation of the binary value of the given long column. For example, bin("12") returns "1100".

A solution could be as follows then:

val solution = load_df.

withColumn("bin_duration", bin($"duration")).

withColumn("md5", md5($"bin_duration"))

scala> solution.show(false)

+--------+------------+--------------------------------+

|Duration|bin_duration|md5 |

+--------+------------+--------------------------------+

|1 |1 |c4ca4238a0b923820dcc509a6f75849b|

+--------+------------+--------------------------------+

You could also "chain" functions together and do the conversion and calculating MD5 in one withColumn, but I prefer to keep steps separate in case there's an issue to resolve and having intermediate steps usually helps.

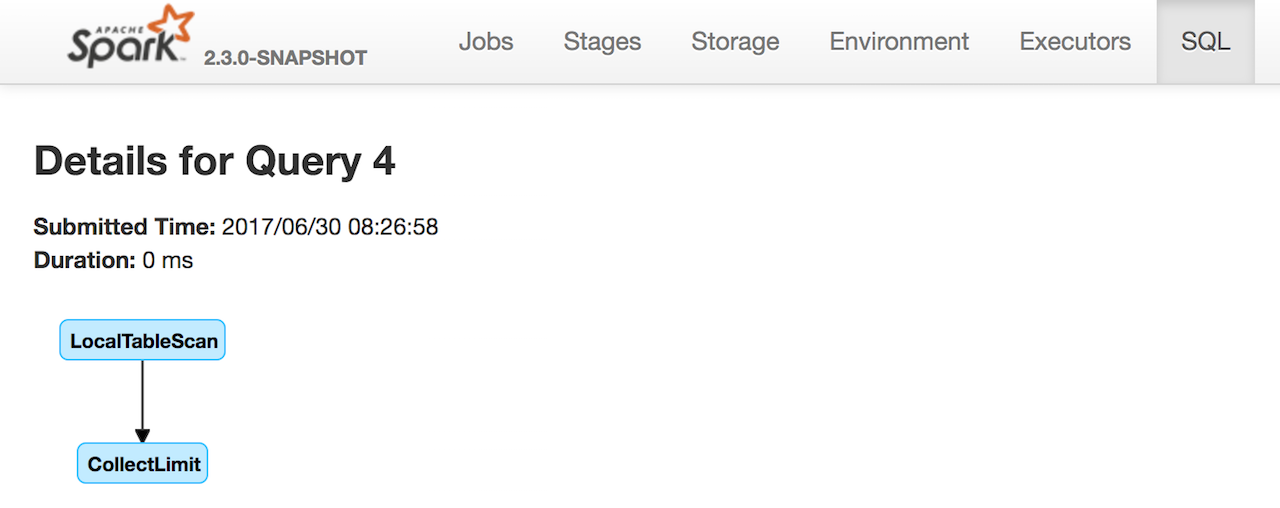

Performance

The reason why you would consider using the build-in functions bin and md5 over custom user-defined functions (UDFs) is that you could get a better performance as Spark SQL is in full control and would not add extra steps for serialization to and deserialization from an internal row representation.

![enter image description here]()

It is not the case here, but still requires less to import and work with.

Exception in thread "main" org.apache.spark.sql.AnalysisException: cannot resolve 'md5(Duration)' due to data type mismatch: argument 1 requires binary type, however, 'Duration' is of int type.;;– Poppo