The following led me to a wild chase through various ffmpeg options, but all this is as far as I could tell never really documented, so I hope it's going to be useful to others who are as puzzled as I am by these fairly cryptic behaviors.

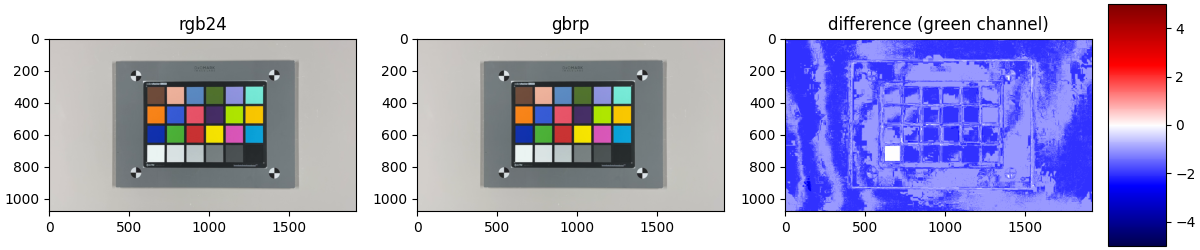

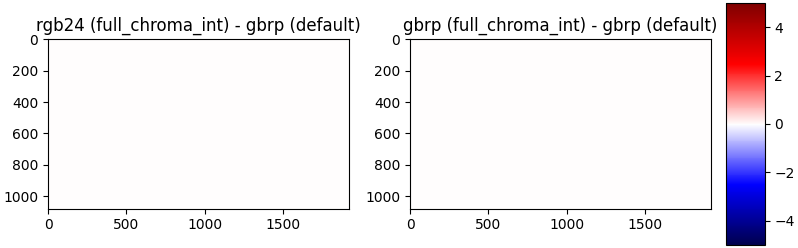

The difference is caused by the default parameters for libswscale, the ffmpeg component responsible for converting from YUV to RGB; in particular, adding the full_chroma_int+bitexact+accurate_rnd flags eliminates the difference between the frames:

ffmpeg -i video.mp4 -frames 1 -color_range pc -f rawvideo -pix_fmt rgb24 -sws_flags full_chroma_int+bitexact+accurate_rnd output_good.rgb24

ffmpeg -i video.mp4 -frames 1 -color_range pc -f rawvideo -pix_fmt gbrp -sws_flags full_chroma_int+bitexact+accurate_rnd output_good.gbrp

Note that various video forums tout these flags (or a subset thereof) as "better" without really providing explanations, which doesn't really satisfy me. They are indeed better for the issue here, let's see how.

First, the new outputs all align with the gbrp output for the default options, which is good news!

buffer_rgb24_good = load_buffer_rgb24("output_good.rgb24")

buffer_gbrp_good = load_buffer_gbrp("output_good.gbrp")

diff1 = buffer_rgb24_good.astype(float) - buffer_gbrp.astype(float)

diff2 = buffer_gbrp_good.astype(float) - buffer_gbrp.astype(float)

fig, (ax1, ax2) = plt.subplots(ncols=2, constrained_layout=True, figsize=(8, 2.5))

ax1.imshow(diff1[..., 1], vmin=-5, vmax=+5, cmap="seismic")

ax1.set_title("rgb24 (new) - gbrp (default)")

im = ax2.imshow(diff2[..., 1], vmin=-5, vmax=+5, cmap="seismic")

ax2.set_title("gbrp (new) - gbrp (default)")

plt.colorbar(im, ax=ax2)

plt.show()

![Difference maps between new and default flags]()

The ffmpeg source code uses the following functions internally to do the conversions in libswscale/output.c:

yuv2rgb_full_1_c_template (and other variants) for rgb24 with full_chroma_intyuv2rgb_1_c_template (and other variants) for rgb24 without full_chroma_intyuv2gbrp_full_X_c (and other variants) for gbrp, independently of full_chroma_int

An important conclusion is that the full_chroma_int parameter seems to be ignored for gbrp format but not for rgb24 and is the main cause of the uniform bias.

Note that in non-rawvideo outputs, ffmpeg can select a supported pixel format depending on the selected format, and as such might get by default in either case without the user being aware of it.

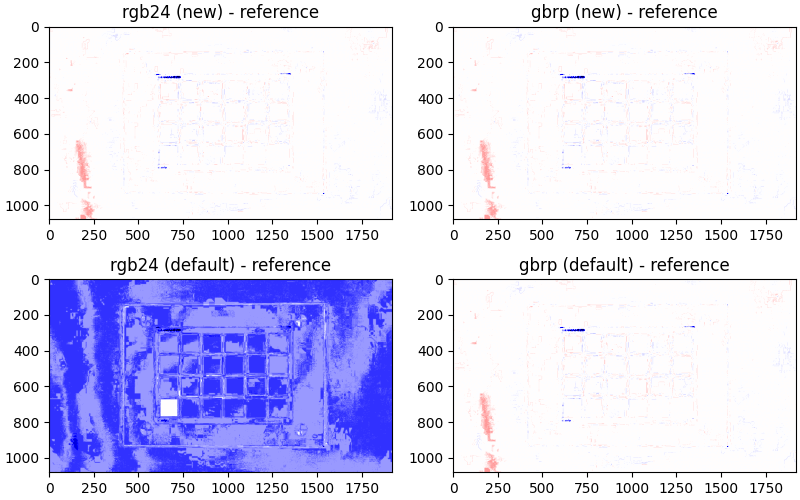

An additional question is: are these the correct values? In other words, is it possible that both may be biased the same way? Taking the colour-science Python package, we can convert YUV data to RGB using a different implementation than ffmpeg to gain more confidence.

Ffmpeg can output raw YUV frames in the native format, which can be decoded provided you know how they're laid out.

$ ffmpeg -i video.mp4 -frames 1 -f rawvideo -pix_fmt yuv444p output.yuv

...

Output #0, rawvideo, to 'output.yuv':

...

Stream #0:0(und): Video: rawvideo... yuv444p

We can read that with Python:

def load_buffer_yuv444p(path, width=1920, height=1080):

"""Load an yuv444 8-bit raw buffer from a file"""

data = np.frombuffer(open(path, "rb").read(), dtype=np.uint8)

img_yuv444 = np.moveaxis(data.reshape((3, height, width)), 0, 2)

return img_yuv444

buffer_yuv = load_buffer_yuv444p("output.yuv")

Then this can be converted to RGB:

import colour

rgb_ref = colour.YCbCr_to_RGB(buffer_yuv, colour.WEIGHTS_YCBCR["ITU-R BT.709"], in_bits=8, in_legal=True, in_int=True, out_bits=8, out_legal=False, out_int=True)

...and used as a reference:

diff1 = buffer_rgb24_good.astype(float) - rgb_ref.astype(float)

diff2 = buffer_gbrp_good.astype(float) - rgb_ref.astype(float)

diff3 = buffer_rgb24.astype(float) - rgb_ref.astype(float)

diff4 = buffer_gbrp.astype(float) - rgb_ref.astype(float)

fig, axes = plt.subplots(ncols=2, nrows=2, constrained_layout=True, figsize=(8, 5))

im = axes[0, 0].imshow(diff1[..., 1], vmin=-5, vmax=+5, cmap="seismic")

axes[0, 0].set_title("rgb24 (new) - reference")

im = axes[0, 1].imshow(diff2[..., 1], vmin=-5, vmax=+5, cmap="seismic")

axes[0, 1].set_title("gbrp (new) - reference")

im = axes[1, 0].imshow(diff3[..., 1], vmin=-5, vmax=+5, cmap="seismic")

axes[1, 0].set_title("rgb24 (default) - reference")

im = axes[1, 1].imshow(diff4[..., 1], vmin=-5, vmax=+5, cmap="seismic")

axes[1, 1].set_title("gbrp (default) - reference")

plt.show()

![Comparison between rgb24/gbrp and old/new flags with reference]()

There are remaining differences due to slightly different interpolation methods and rounding errors but no uniform bias, so the two implementations mostly agree up to that.

(Note: In this example the output.yuv file is in yuv444p, converted automatically from the native format of yuv420p by ffmpeg in the above command-line without going to the full RGB to YUV conversion. A more complete test would do all the previous conversions from a single raw YUV frame instead of a regular video to better isolate the differences.)