The COCO dataset is very large for me to upload it to google colab. Is there any way I can directly download the dataset to google colab?

You can download it directly with wget

!wget http://images.cocodataset.org/zips/train2017.zip

Also, you should use GPU instance which gives larger space at 350 GB.

One more approach could be uploading just the annotations file to Google Colab. There's no need to download the image dataset. We will make use of the PyCoco API. Next, when preparing an image, instead of accessing the image file from Drive / local folder, you can read the image file with the URL!

# The normal method. Read from folder / Drive

I = io.imread('%s/images/%s/%s'%(dataDir,dataType,img['file_name']))

# Instead, use this! Url to load image

I = io.imread(img['coco_url'])

This method will save you plenty of space, download time and effort. However, you'll require a working internet connection during training to fetch the images (which of course you have, since you are using colab).

If you are interested in working with the COCO dataset, you can have a look at my post on medium.

You can download it directly with wget

!wget http://images.cocodataset.org/zips/train2017.zip

Also, you should use GPU instance which gives larger space at 350 GB.

You can download it to google drive and then mount the drive to Colab.

from google.colab import drive

drive.mount('/content/drive')

then you can cd to the folder containing the dataset, for eg.

import os

os.chdir("drive/My Drive/cocodataset")

Using drive is better for further use. Also unzip the zip with using colab ( !unzip ) because using zip extractor on drive takes longer. I've tried :D

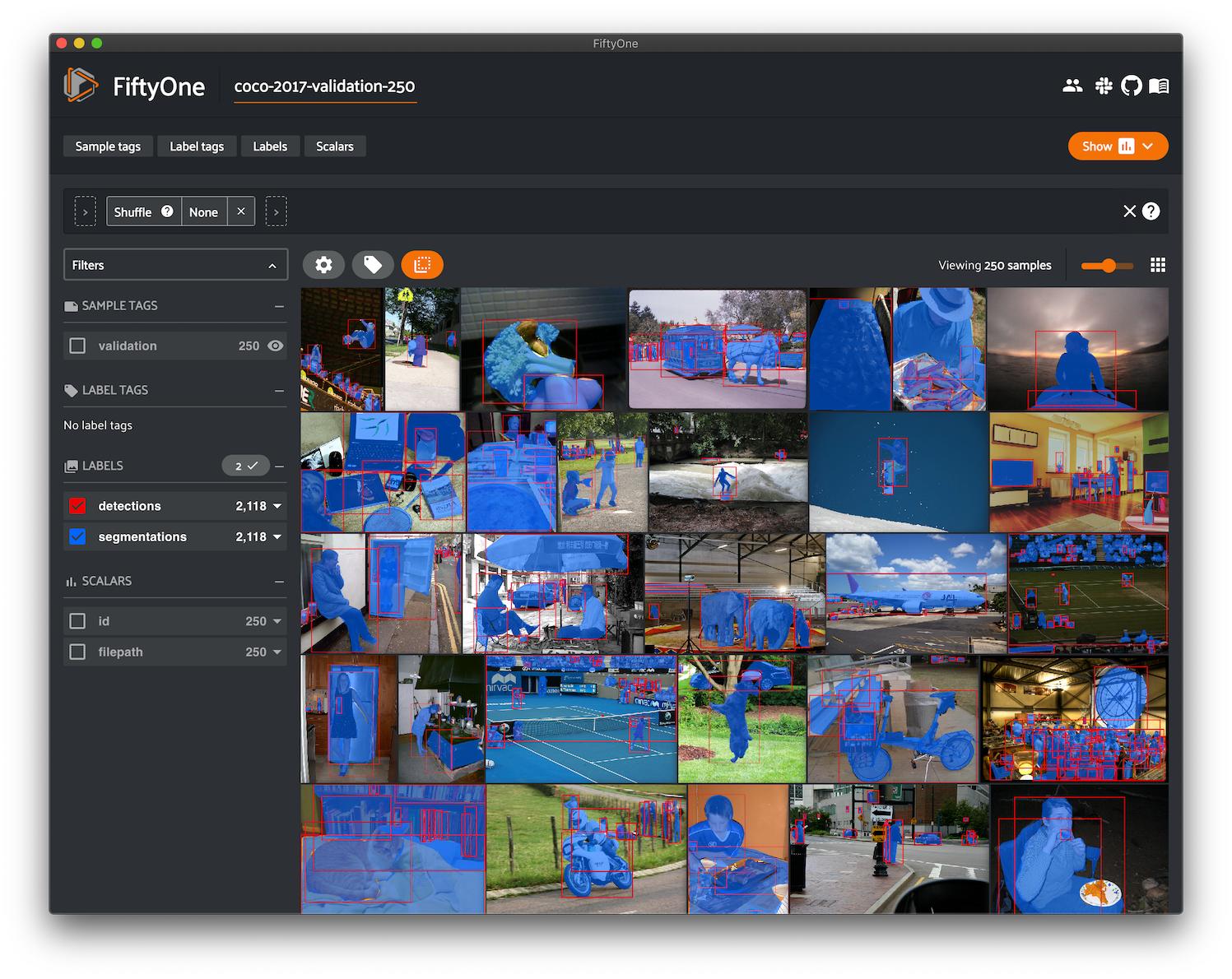

These days, the easiest way to download COCO is to use the Python tool, fiftyone. It lets you download, visualize, and evaluate the dataset as well as any subset you are interested in.

It also works directly in Colab so you can perform your entire workflow there.

import fiftyone as fo

import fiftyone.zoo as foz

#

# Only the required images will be downloaded (if necessary).

# By default, only detections are loaded

#

dataset = foz.load_zoo_dataset(

"coco-2017",

splits=["validation","train"],

classes=["person", "car"],

# max_samples=50,

)

# Visualize the dataset in the FiftyOne App

session = fo.launch_app(dataset)

© 2022 - 2024 — McMap. All rights reserved.