If your original data has negative values, then a zero point can offset the range, allowing you to store them in an unsigned integer. So if your zero point was 128, then unscaled negative values -127 to -1 could be represented by 1 to 127, and positive values 0 to 127 could be represented by 128 to 255. Note the quantized value of 0 (or input -128 here) is intentionally unused to keep range symmetry on both ends, hence a total range of 256-1 values instead of 256. Then larger real values can be mapped using the scale factor.

e.g. Given an input tensor with data ranging from -1000 to +1000 (inclusive both ends), and an element in it with value 39.215686275, that element's quantized value would be 133 when using 128 as the zero point:

quantizedValue = round(realValue / scale + quantizedZeroPoint)

## round(39.215686275 / 7.843137255 + 128 ) = 133

## round(0 / 7.843137255 + 128 ) = 128

## round(1000 / 7.843137255 + 128 ) = 255

## round(-1000 / 7.843137255 + 128 ) = 1

Using:

## Determine the maximum range of the data (either known or via reduce min/max):

realRangeMinValue = -1000

realRangeMaxValue = 1000

## Use a zero point halfway between the quantized data type range.

## Note 256/2 is symmetric, with 127 on either side, as q=0 isn't really used.

quantizedRange = 2^8 - 1 = 255

quantizedRangeMinValue = 1

quantizedRangeMaxValue = 255

quantizedZeroPoint = 128

## Precompute scale, using the same zero point and scale for the entire tensor.

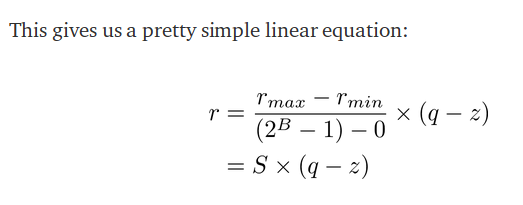

scale = (realRangeMaxValue - realRangeMinValue) / quantizedRange

## = (1000 - -1000 ) / 255

## = 7.843137255

##

## Alternately, you could precompute the inverse scale and multiply rather than divide

## (inverseScale = 0.1275).

Conversely from quantized value to real:

realValue = (quantizedValue - quantizedZeroPoint) * scale

## (133 - 128 ) * 7.843137255 = 39.215686275

## (128 - 128 ) * 7.843137255 = 0

## (255 - 128 ) * 7.843137255 = 1000

## (1 - 128 ) * 7.843137255 = -1000