This is a classic situation for dilate. The idea is that adjacent text corresponds with the same question while text that is farther away is part of another question. Whenever you want to connect multiple items together, you can dilate them to join adjacent contours into a single contour. Here's a simple approach:

Obtain binary image. Load the image, convert to grayscale, Gaussian blur, then Otsu's threshold to obtain a binary image.

Remove small noise and artifacts. We create a rectangular kernel and morph open to remove small noise and artifacts in the image.

Connect adjacent words together. We create a larger rectangular kernel and dilate to merge individual contours together.

Detect questions. From here we find contours, sort contours from top-to-bottom using imutils.sort_contours(), filter with a minimum contour area, obtain the rectangular bounding rectangle coordinates and highlight the rectangular contours. We then crop each question using Numpy slicing and save the ROI image.

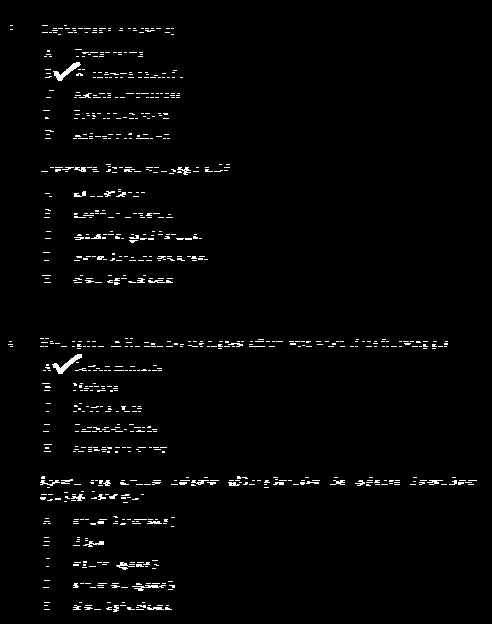

Otsu's threshold to obtain a binary image

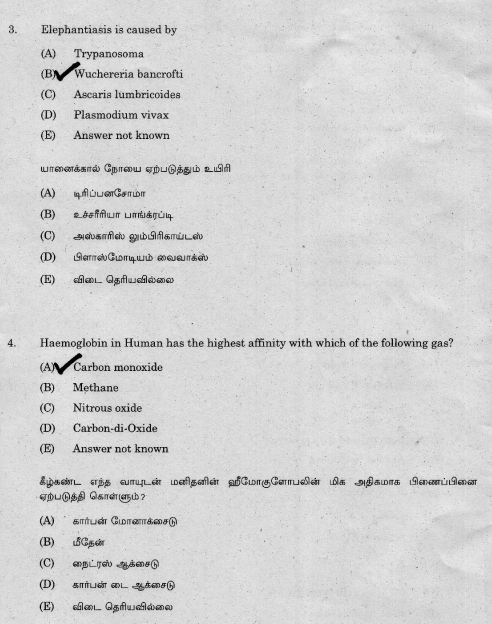

![enter image description here]()

Here's where the interesting section happens. We assume that adjacent text/characters are part of the same question so we merge individual words into a single contour. A question is a section of words that are close together so we dilate to connect them all together.

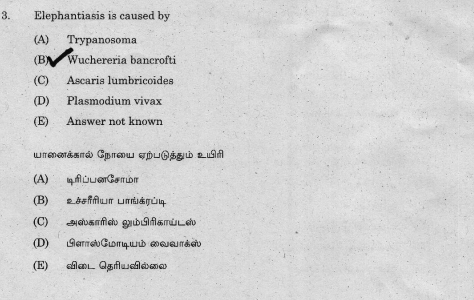

![enter image description here]()

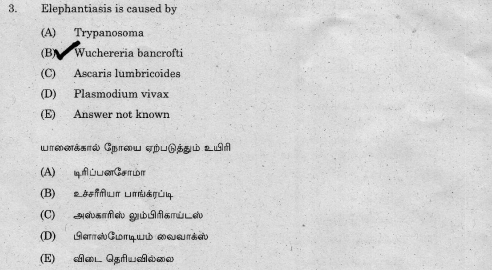

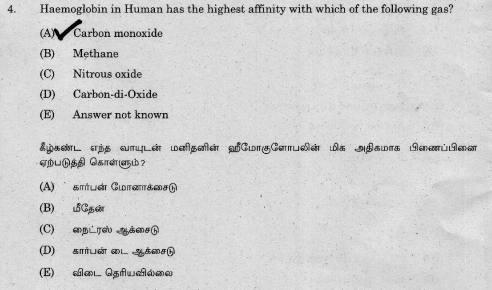

Individual questions highlighted in green

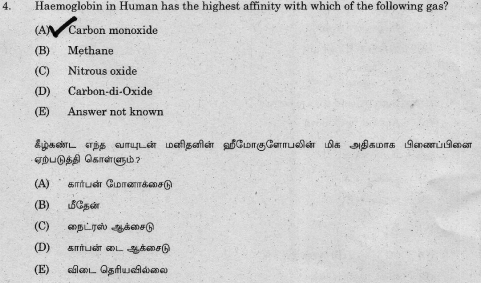

![enter image description here]()

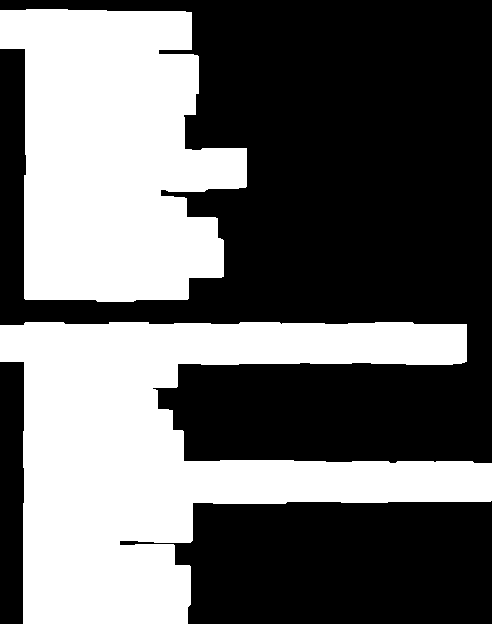

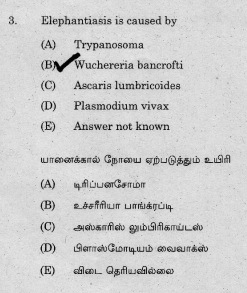

Top question

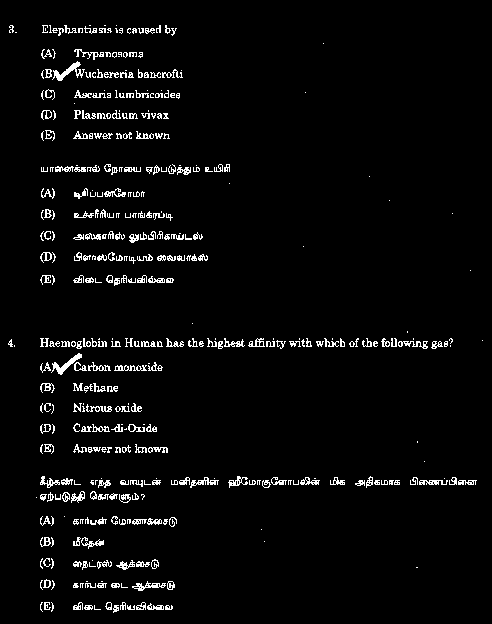

![enter image description here]()

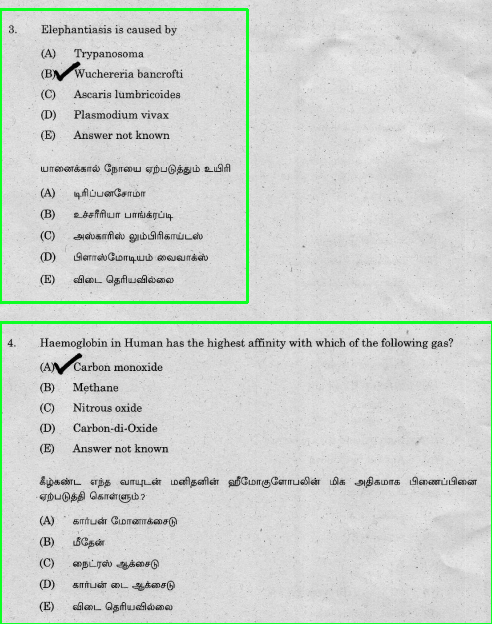

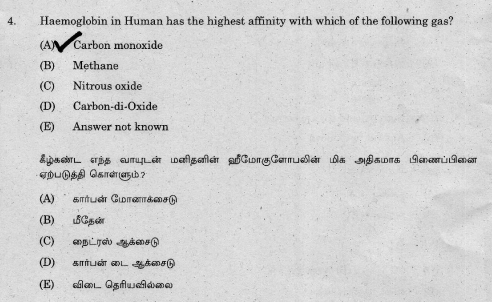

Bottom question

![enter image description here]()

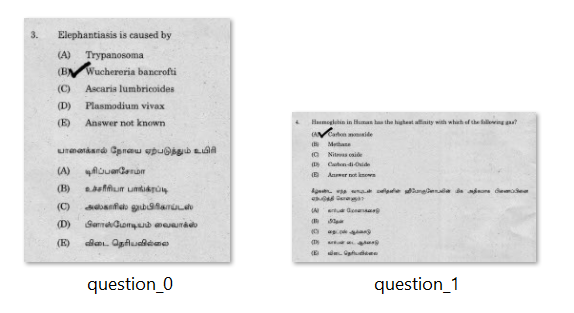

Saved ROI questions (assumption is from top-to-bottom)

![enter image description here]()

Code

import cv2

from imutils import contours

# Load image, grayscale, Gaussian blur, Otsu's threshold

image = cv2.imread('1.png')

original = image.copy()

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

blur = cv2.GaussianBlur(gray, (7,7), 0)

thresh = cv2.threshold(blur, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)[1]

# Remove small artifacts and noise with morph open

open_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (5,5))

opening = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, open_kernel, iterations=1)

# Create rectangular structuring element and dilate

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (9,9))

dilate = cv2.dilate(opening, kernel, iterations=4)

# Find contours, sort from top to bottom, and extract each question

cnts = cv2.findContours(dilate, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

(cnts, _) = contours.sort_contours(cnts, method="top-to-bottom")

# Get bounding box of each question, crop ROI, and save

question_number = 0

for c in cnts:

# Filter by area to ensure its not noise

area = cv2.contourArea(c)

if area > 150:

x,y,w,h = cv2.boundingRect(c)

cv2.rectangle(image, (x, y), (x + w, y + h), (36,255,12), 2)

question = original[y:y+h, x:x+w]

cv2.imwrite('question_{}.png'.format(question_number), question)

question_number += 1

cv2.imshow('thresh', thresh)

cv2.imshow('dilate', dilate)

cv2.imshow('image', image)

cv2.waitKey()