In my pipeline I use WriteToBigQuery something like this:

| beam.io.WriteToBigQuery(

'thijs:thijsset.thijstable',

schema=table_schema,

write_disposition=beam.io.BigQueryDisposition.WRITE_APPEND,

create_disposition=beam.io.BigQueryDisposition.CREATE_IF_NEEDED)

This returns a Dict as described in the documentation as follows:

The beam.io.WriteToBigQuery PTransform returns a dictionary whose BigQueryWriteFn.FAILED_ROWS entry contains a PCollection of all the rows that failed to be written.

How do I print this dict and turn it into a pcollection or how do I just print the FAILED_ROWS?

If I do: | "print" >> beam.Map(print)

Then I get: AttributeError: 'dict' object has no attribute 'pipeline'

I must have read a hundred pipelines but never have I seen anything after the WriteToBigQuery.

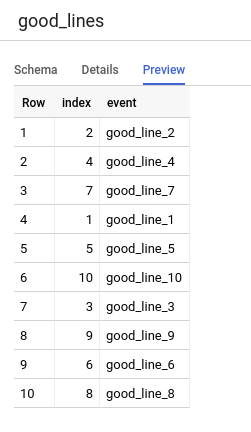

[edit] When I finish the pipeline and store the results in a variable I have the following:

{'FailedRows': <PCollection[WriteToBigQuery/StreamInsertRows/ParDo(BigQueryWriteFn).FailedRows] at 0x7f0e0cdcfed0>}

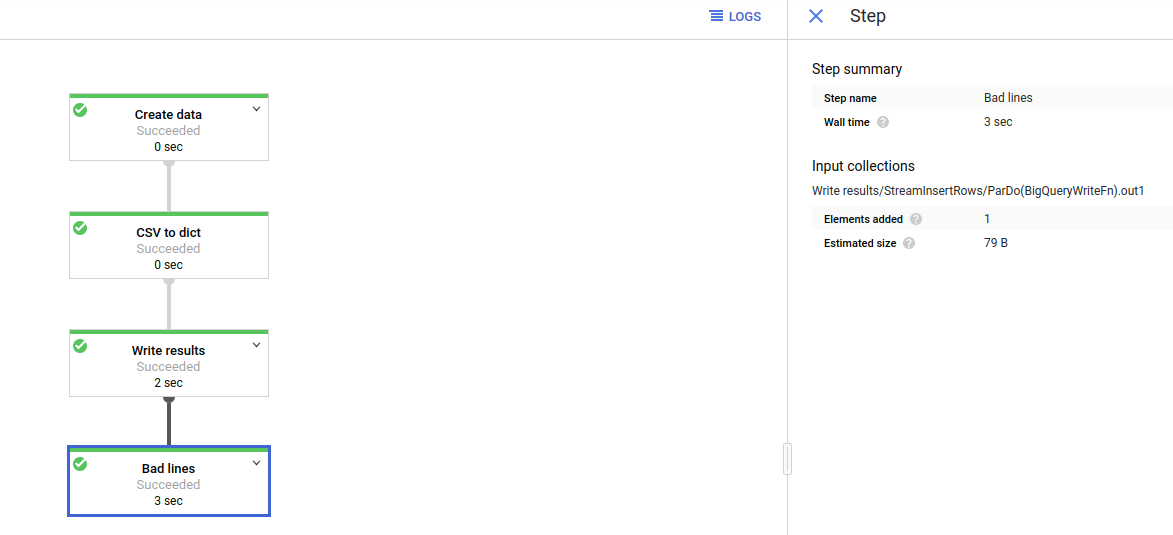

But I do not know how to use this result in the pipeline like this:

| beam.io.WriteToBigQuery(

'thijs:thijsset.thijstable',

schema=table_schema,

write_disposition=beam.io.BigQueryDisposition.WRITE_APPEND,

create_disposition=beam.io.BigQueryDisposition.CREATE_IF_NEEDED)

| ['FailedRows'] from previous step

| "print" >> beam.Map(print)