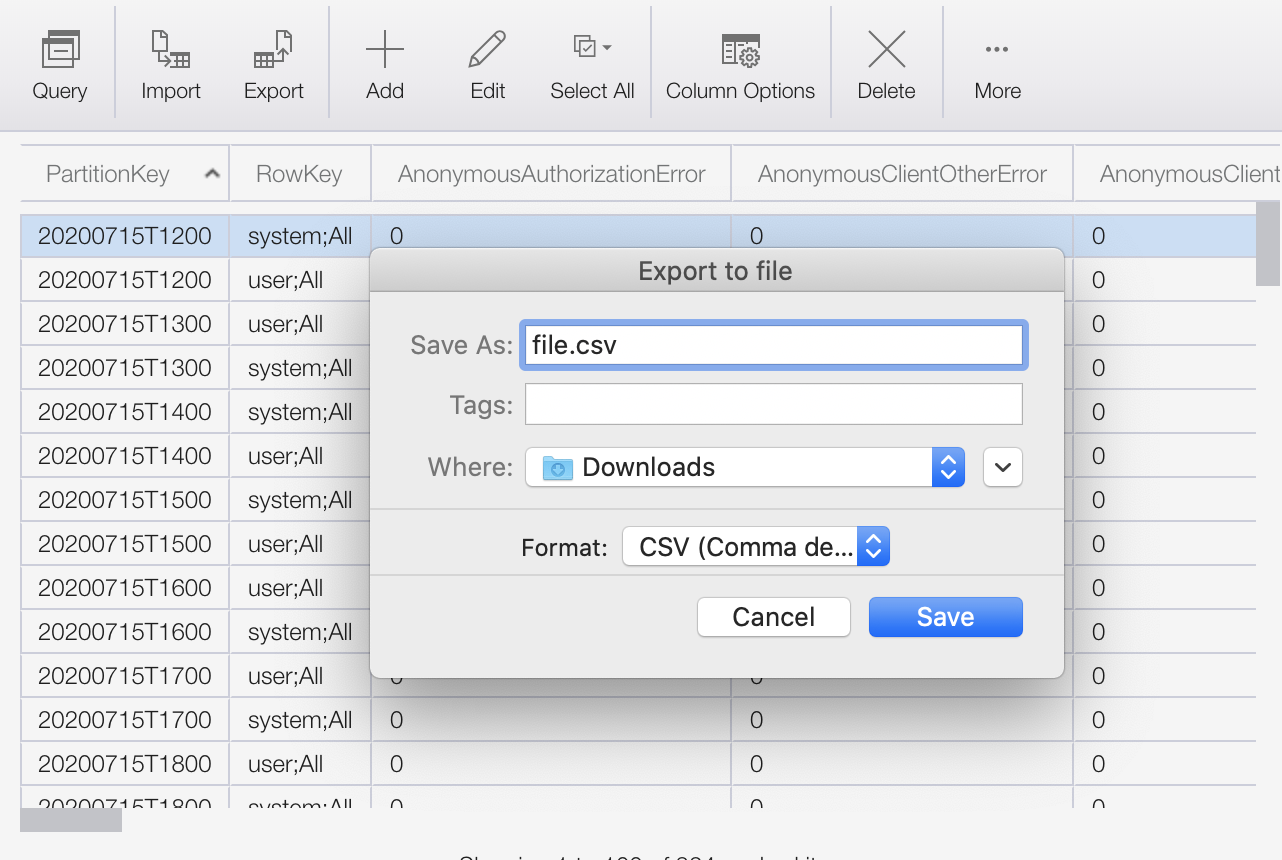

If you're looking for a tool to export data from Azure table storage to a flat file, may I suggest you take a look at Cerebrata's Azure Management Studio (Commercial, NOT Free) or ClumsyLeaf's TableXplorer (Commercial, NOT Free). Both of these tools have the capability to export data into CSV and XML file format.

Since both of the tools are GUI based, I don't think you can automate the export process. For automation, I would suggest you look into Cerebrata's Azure Management Cmdlets as it provides a PowerShell based interface to export data into CSV or XML format.

Since I was associated with Cerebrata in the past, I can only talk about that. The tool won't export on a partition-by-partition basis but if you know the all the PartitionKey values in your table, you can specify a query to export data for each partition.

If automation is one of the key requirement, you could simply write a console application which runs once per hour and extracts the data for the past hour. You could use .Net Storage Client library to fetch the data. To do so, first define a class which derives from TableEntity class. Something like below:

public class CustomEntity : TableEntity

{

public string Attribute1

{

get;

set;

}

public string Attribute2

{

get;

set;

}

public string AttributeN

{

get;

set;

}

public static string GetHeaders(string delimiter)

{

return "\"Attribute1\"" + delimiter + "\"Attribute2\"" + delimiter + "\"AttributeN\"";

}

public string ToDelimited(string delimiter)

{

return "\"" + Attribute1 + "\"" + delimiter + "\"" + Attribute2 + "\"" + delimiter + "\"" + AttributeN + "\"";

}

}

Then your application could query the table storage on an hourly basis and save the data to a file:

DateTime currentDateTime = DateTime.UtcNow;

//Assuming the PartitionKey follows the following strategy for naming: YYYYMMDDHH0000

var fromPartitionKey = currentDateTime.AddHours(-1).ToString("YYYYmmDDHH0000");

var toPartitionKey = currentDateTime.ToString("YYYYmmDDHH0000");

var filterExpression = string.Format("PartitionKey ge '{0}' and PartitionKey lt '{1}'", fromPartitionKey, toPartitionKey);

var tableName = "<your table name>";

var cloudStorageAccount = new CloudStorageAccount(new StorageCredentials("<account name>", "<account key>"), true);

var cloudTableClient = cloudStorageAccount.CreateCloudTableClient();

var table = cloudTableClient.GetTableReference(tableName);

TableQuery<CustomEntity> query = new TableQuery<CustomEntity>()

{

FilterString = filterExpression,

};

var entities = table.ExecuteQuery<CustomEntity>(query).ToList();

if (entities.Count > 0)

{

StringBuilder sb = new StringBuilder();

sb.Append(CustomEntity.GetHeaders(",") + "\n");

foreach (var entity in entities)

{

sb.Append(entity.ToDelimited(",") + "\n");

}

var fileContents = sb.ToString();

//Now write this string to a file.

}

As far as importing this data into a relational database, I'm pretty sure if you look around you'll find many utilities which will be able to do that.