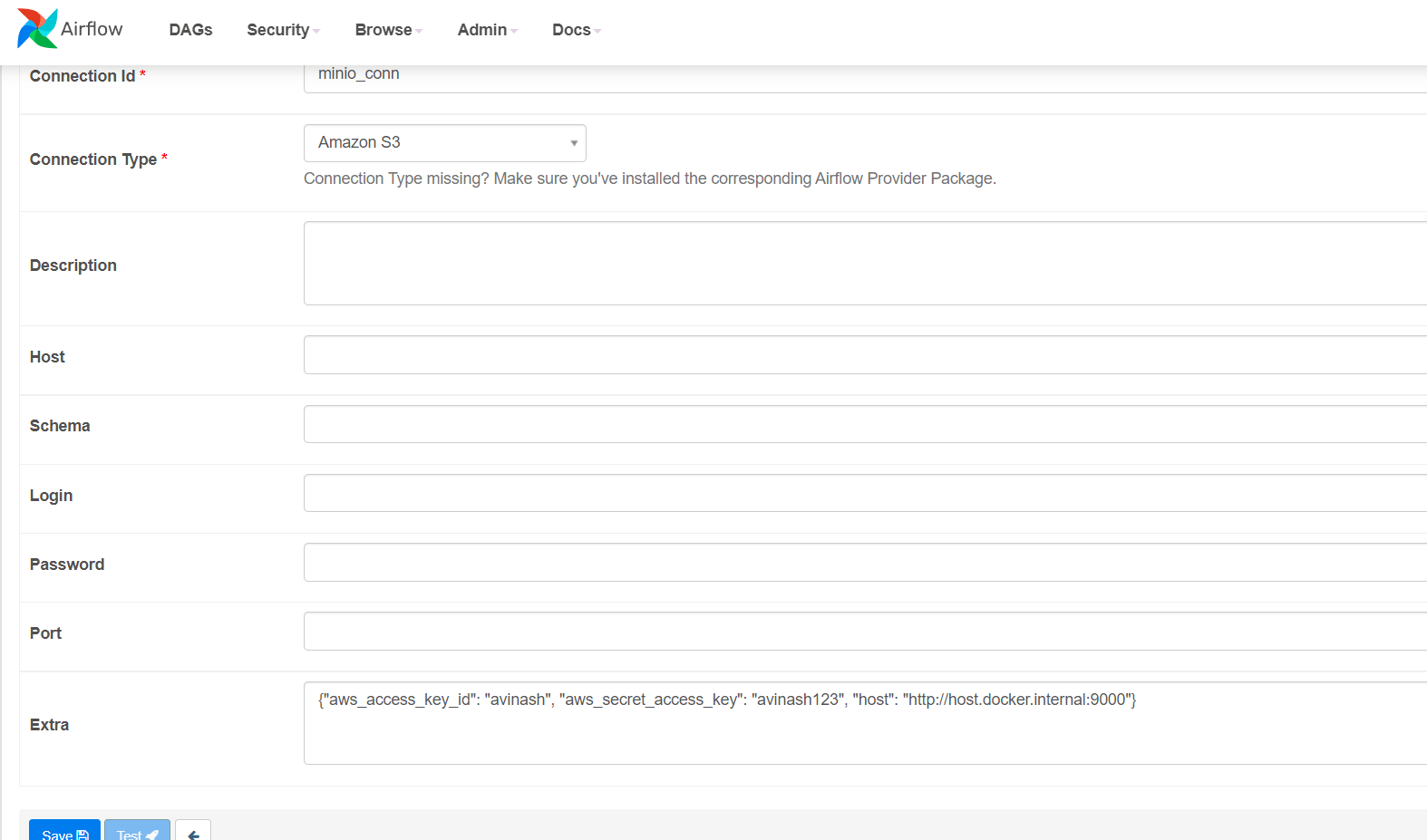

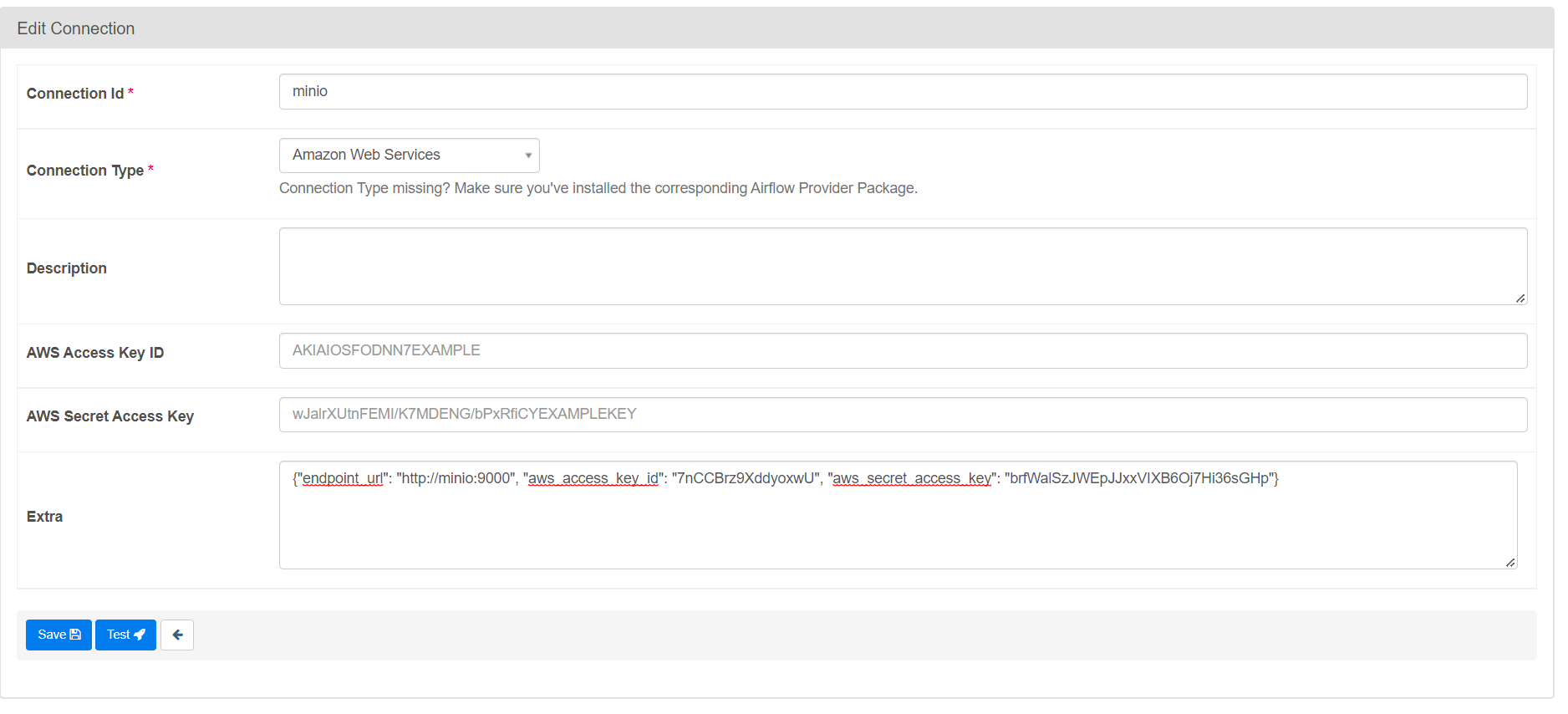

I am trying to add a running instance of MinIO to Airflow connections, I thought it should be as easy as this setup in the GUI (never mind the exposed credentials, this is a blocked of environment and will be changed afterwards):

Airflow as well as minio are running in docker containers, which both use the same docker network. Pressing the test button results in the following error:

'ClientError' error occurred while testing connection: An error occurred (InvalidClientTokenId) when calling the GetCallerIdentity operation: The security token included in the request is invalid.

I am curious about what I am missing. The idea was to set up this connection and then use a bucket for data-aware scheduling (= I want to trigger a DAG as soon as someone uploads a file to the bucket)