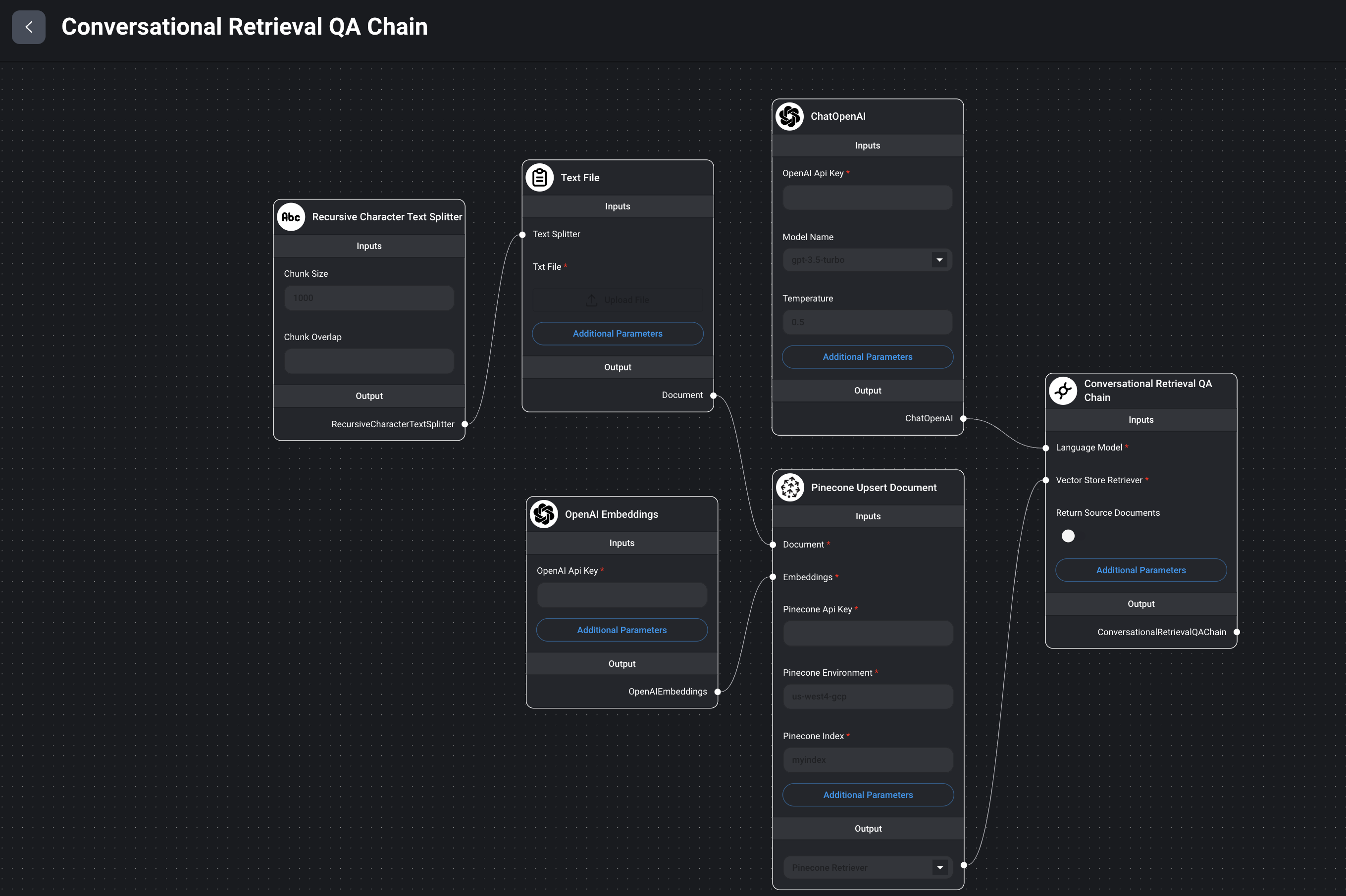

Im creating a text document QA chatbot, Im using Langchainjs along with OpenAI LLM for creating embeddings and Chat and Pinecone as my vector Store.

After successfully uploading embeddings and creating an index on pinecone. I am using Next JS app to communicate with OpenAI and Pinecone.

The current structure of my App is like so:

1: Frontend -> user inputs a question and makes a POST call to a NEXT js server API route /ask

2: The server func looks like the following:

const vectorStore = await PineconeStore.fromExistingIndex(

new OpenAIEmbeddings(),

{ pineconeIndex })

const model = new ChatOpenAI({ temperature: 0.5, modelName: 'gpt-3.5-turbo' })

const memory = new ConversationSummaryMemory({

memoryKey: 'chat_history',

llm: new ChatOpenAI({ modelName: 'gpt-3.5-turbo', temperature: 0 }),

})

const chain = ConversationalRetrievalQAChain.fromLLM(

model,

vectorStore.asRetriever(),

{

memory,

}

)

const result = await chain.call({

question,

})

return NextResponse.json({ data: result.text })

The ISSUE: The Chatbot never has access to any history because the memory always ONLY has ONLY the latest message in storage.

console.log('memory:', await memory.loadMemoryVariables({}))

I also tried BufferMemory and same Issue, the memory buffer only has ONLY the message just asked, if new query comes in the buffer is cleared and the new query is the only message in memory.

I may be unclear on how exactly to correctly store the history so the

const result = await chain.call({question,}) call has access to the previous info

UPDATE: I have successfully used Upstash Redis-Backed Chat Memory with langchain, however still curious if it is possible to store messages using User Browser?

await chain.call({question: "Was that nice?",chat_history: chatHistory,});but tbh I didn't try it out: js.langchain.com/docs/modules/chains/index_related_chains/… – MicrosomeRedisChatMessageHistoryinBufferMemory. Does Pinecone have a similar solution? – Magdau