We're prototyping a chatbot application using Azure OpenAI gpt-3.5-turbo model using the standard tier.

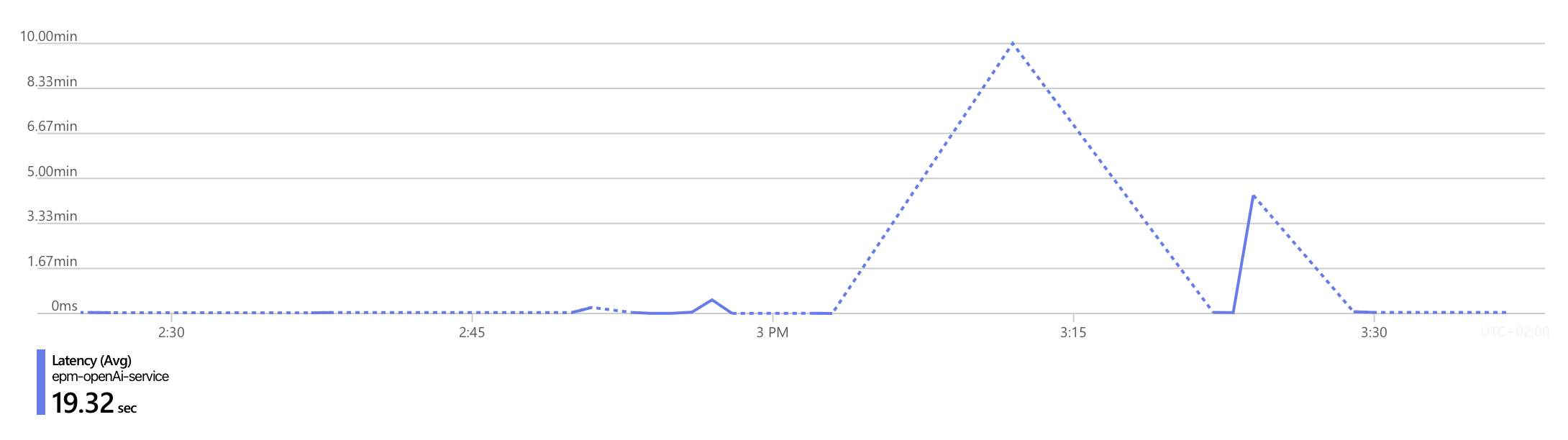

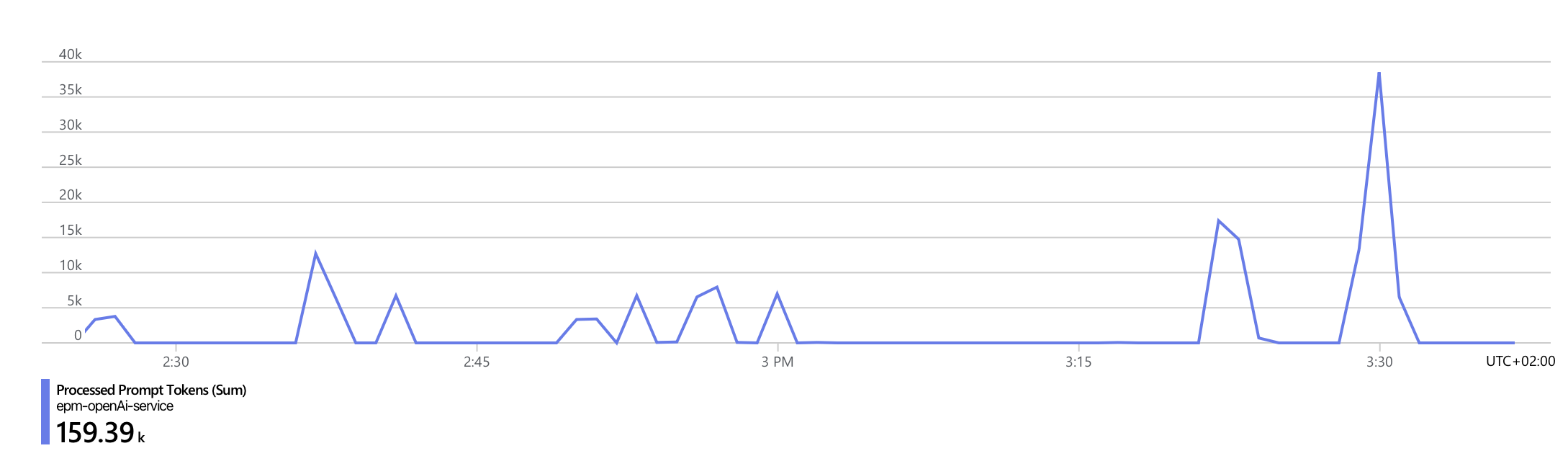

We're facing random latency bursts, which sometimes go between 3-20 minutes. Below I have screenshots with the metrics provided by the portal. As you can see the usage/rate limit don't indicate high load.

In fact, the application isn't yet deployed on production, it's only being used for testing purposes by our development team. Understanding these latency spikes will help us prove our PoC and use Azure OpenAI service on production.

Any ideas on how to solve this issue?

Model Properties Model name: gpt-35-turbo Model version: 0301 Deployment type: Standard Content Filter: Default Tokens per Minute Rate Limit (thousands): 120 Rate limit (Tokens per minute): 120000 Rate limit (Requests per minute): 720

Diagrams