I'm trying to learn how to reduce dimensionality in datasets. I came across some tutorials on Principle Component Analysis and Singular Value Decomposition. I understand that it takes the dimension of greatest variance and sequentially collapses dimensions of the next highest variance (overly simplified).

I'm confused on how to interpret the output matrices. I looked at the documentation but it wasn't much help. I followed some tutorials and was not too sure what the resulting matrices were exactly. I provided some code to get a feel for the distribution of each variable in the dataset (sklearn.datasets) .

My initial input array is a (n x m) matrix of n samples and m attributes. I could do a common PCA plot of PC1 vs. PC2 but how do I know which dimensions each PC represents?

Sorry if this is a basic question. A lot of the resources are very math heavy which I'm fine with but a more intuitive answer would be useful. No where I've seen talks about how to interpret the output in terms of the original labeled data.

I'm open to using sklearn's decomposition.PCA

#Singular Value Decomposition

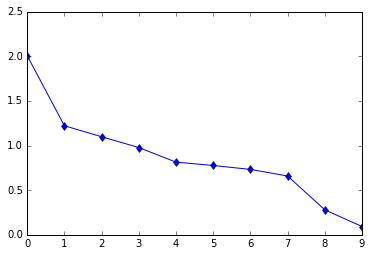

U, s, V = np.linalg.svd(X, full_matrices=True)

print(U.shape, s.shape, V.shape, sep="\n")

(442, 442)

(10,)

(10, 10)