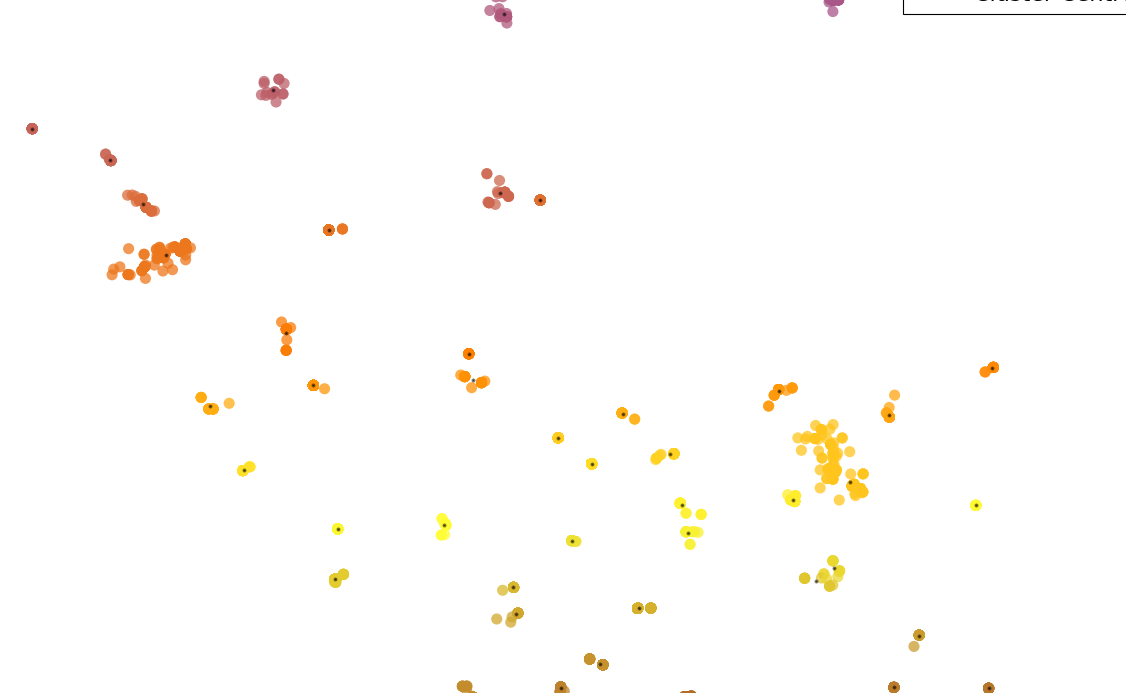

I’m working with geo-located social media posts and clustering their locations (latitude/longitude) using DBSCAN. In my data set, I have many users who have posted multiple times, which allows me to derive their trajectory (a time ordered sequence of positions from place to place). Ex:

3945641 [[38.9875, -76.94], [38.91711157, -77.02435118], [38.8991, -77.029], [38.8991, -77.029], [38.88927534, -77.04858468])

I have derived trajectories for my entire data set, and my next step is to cluster or aggregate the trajectories in order to identify areas with dense movements between locations. Any ideas on how to tackle trajectory clustering/aggregation in Python?

Here is some code I've been working with to create trajectories as line strings/JSON dicts:

import pandas as pd

import numpy as np

import ujson as json

import time

# Import Data

data = pd.read_csv('filepath.csv', delimiter=',', engine='python')

#print len(data),"rows"

#print data

# Create Data Fame

df = pd.DataFrame(data, columns=['user_id','timestamp','latitude','longitude','cluster_labels])

#print data.head()

# Get a list of unique user_id values

uniqueIds = np.unique(data['user_id'].values)

# Get the ordered (by timestamp) coordinates for each user_id

output = [[id,data.loc[data['user_id']==id].sort_values(by='timestamp')[['latitude','longitude']].values.tolist()] for id in uniqueIds]

# Save outputs as csv

outputs = pd.DataFrame(output)

#print outputs

outputs.to_csv('filepath_out.csv', index=False, header=False)

# Save outputs as JSON

#outputDict = {}

#for i in output:

# outputDict[i[0]]=i[1]

#with open('filepath.json','w') as f:

#json.dump(outputDict, f, sort_keys=True, indent=4, ensure_ascii=False,)

EDIT

I've come across a python package, NetworkX, and was debating the idea of creating a network graph from my clusters as opposed to clustering the trajectory lines/segments. Any opinions on clustering trajectories v.s. turning clusters into a graph to identify densely clustered movements between locations.