At the moment, there is no global optimization method that is built-in tensorflow. There is a window opened on the scipy world via ScipyOptimizerInterface, but it (currently?) only wraps scipy's minimize, which is a local minimizer.

However, you can still treat tensorflow's execution result as any other function, that can be fed to the optimizer of your choice. Say you want to experiment with scipy's basinhopping global optimizer. You could write

import numpy as np

from scipy.optimize import basinhopping

import tensorflow as tf

v = tf.placeholder(dtype=tf.float32, shape=(2,))

x = v[0]

y = v[1]

f1 = y - x*x

f2 = x - (y - 2)*(y - 2) + 1.1

sq = f1 * f1 + f2 * f2

starting_point = np.array([-1.3, 2.0], np.float32)

with tf.Session() as sess:

o = basinhopping(lambda x: sess.run(sq, {v: x}), x0=starting_point, T=10, niter=1000)

print(o.x)

# [0.76925635 0.63757862]

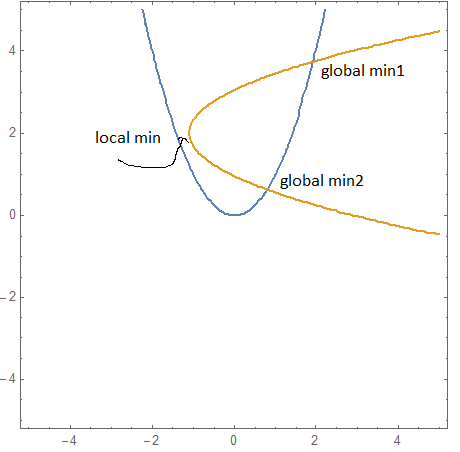

(I had to tweak basinhopping's temperatures and number of iterations, as the default values would often not let the solution get out of the basin of the local minimum taken as the starting point here).

What you loose by treating tensorflow as a black box to the optimizer is that the later does not have access to the gradients that are automatically computed by tensorflow. In that sense, it is not optimal -- though you still benefit from the GPU acceleration to compute your function.

EDIT

Since you can provide explicitly the gradients to the local minimizer used by basinhopping, you could feed in the result of tensorflow's gradients:

import numpy as np

from scipy.optimize import basinhopping

import tensorflow as tf

v = tf.placeholder(dtype=tf.float32, shape=(2,))

x = v[0]

y = v[1]

f1 = y - x*x

f2 = x - (y - 2)*(y - 2) + 1.1

sq = f1 * f1 + f2 * f2

sq_grad = tf.gradients(sq, v)[0]

init_value = np.array([-1.3, 2.0], np.float32)

with tf.Session() as sess:

def f(x):

return sess.run(sq, {v: x})

def g(x):

return sess.run(sq_grad, {v: x})

o = basinhopping(f, x0 = init_value, T=10.0, niter=1000, minimizer_kwargs={'jac': g})

print(o.x)

# [0.79057982 0.62501636]

For some reason, this is much slower than without providing the gradient -- however it could be that gradients are provided, the minimization algorithm is not the same, so the comparison may not make sense.