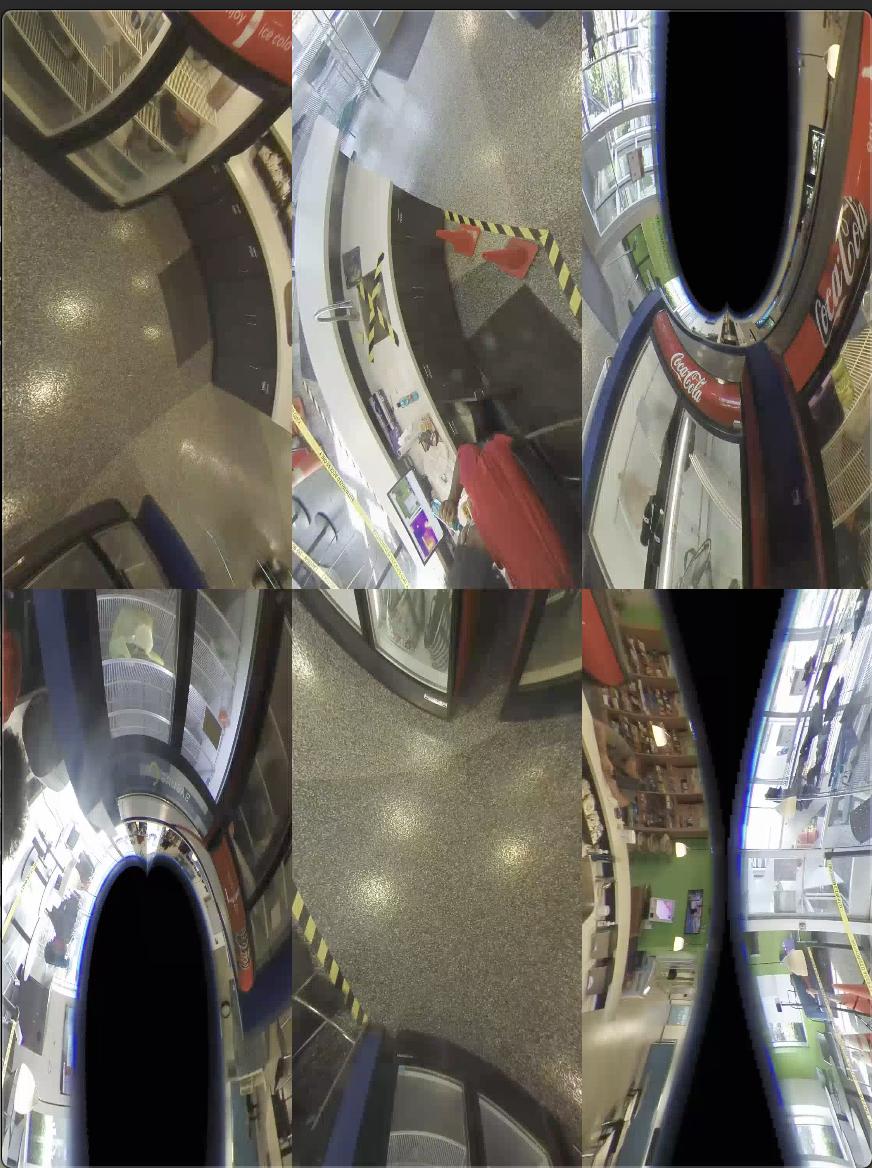

Let's say I have video from an IP-camera that has a 180 degree or 360 degree fisheye lens and I want to dewarp the image in some way. Ideally I would be able to select some rectangular area of the input image and dewarp that into a "normal" looking output video, but it would also be acceptable to dewarp the the video into some sort of Equirectangular or Equi-Angular Cubemap projection. The input video looks like this

I'm aware of two filters that might be used for this

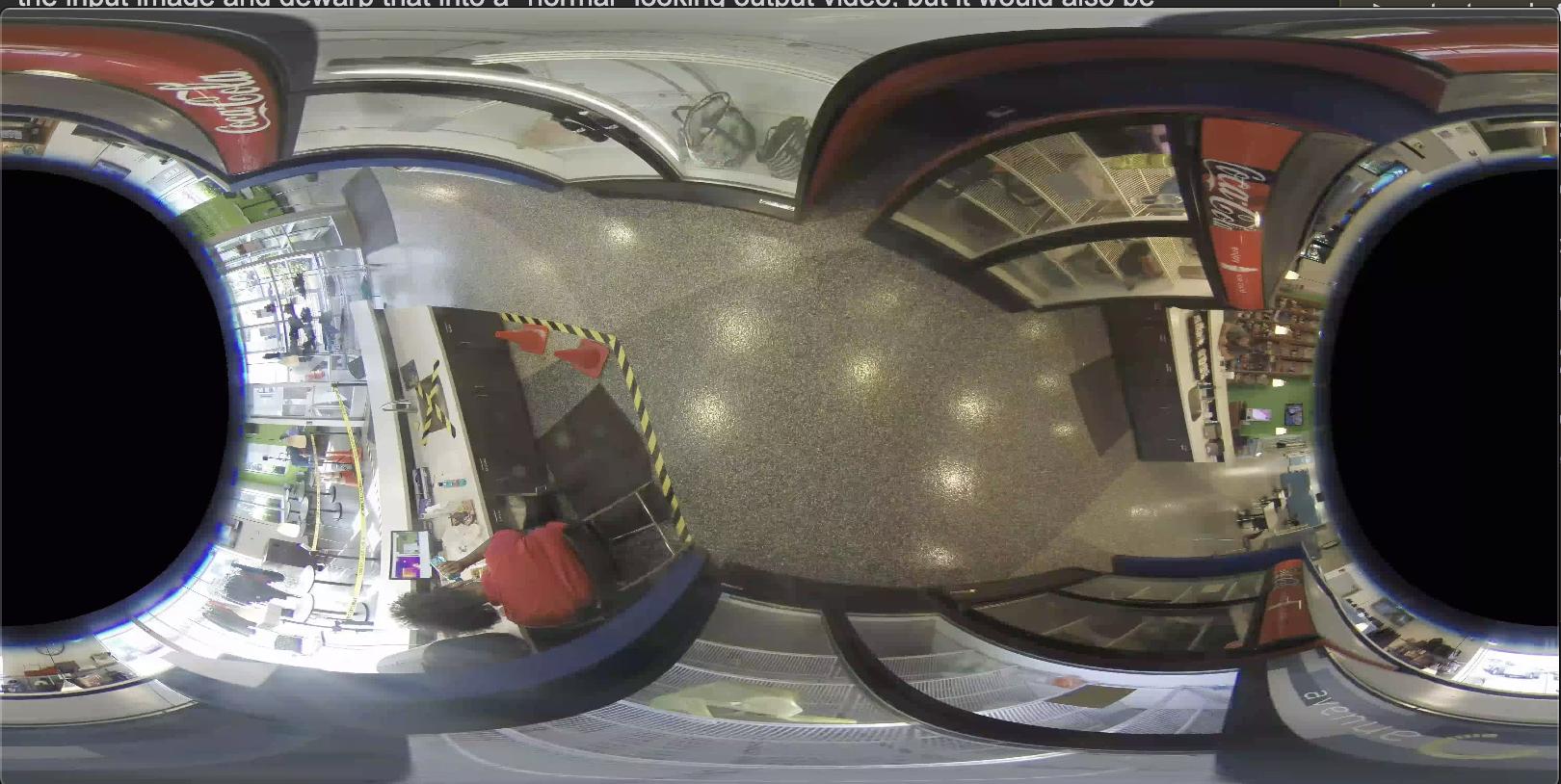

lenscorrectfilter - I think that this is on the right course but all of the example that I can find with this filter are only for "minor" fisheye lenses and I can't seem to get this to work correctly for videos with 360 degree fisheye lenses, it simply doesn't dewarp enough.v360filter. I thought that this must be the correctly filter but it seems that it's intended for 360 videos and not 360 degree fisheye lenses? I didn't know that there was a difference but I can't get it to work. When I try to take my input video and map it through an equirectangular projection I get some odd output like this

I've tried a dozen or so different combinations of parameters but none of them seem to give me the output that I want which is a single dewarped image. Can someone help me with the filter graph parameters to use this filter?

Is there something that I'm missing? Are either of these filters the correct way forward?

EDIT -

I've been experimenting with the v360 filter and I think I've gotten closer. What I want to do is map a fisheye input to an equirectangular output, so I've tried this

ffmpeg -i input.mp4 -vf v360=fisheye:equirect:id_fov=360 output.mp4

This should mean that my input is a fisheye lens with a diagonal field of view of 360 degrees and I want my output to be an equirectangular projection but this is what I get