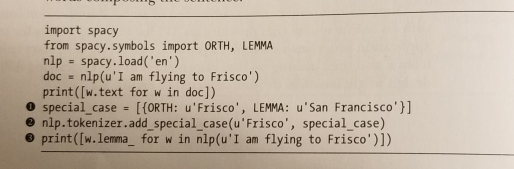

I'm following an example from Chapter #2 in the book: Natural Language Processing with Python and spaCy by Yuli Vasiliev 2020

The example is suppose to produce the lemmatization output:

['I', 'am' , 'flying' , 'to', 'Frisco']

['-PRON-', 'be' , 'fly' , 'to', 'San Francisco']

I get the following error:

nlp.tokenizer.add_special_case(u'Frisco', sf_special_case)

File "spacy\tokenizer.pyx", line 601, in spacy.tokenizer.Tokenizer.add_special_case

File "spacy\tokenizer.pyx", line 589, in spacy.tokenizer.Tokenizer._validate_special_case

ValueError: [E1005] Unable to set attribute 'LEMMA' in tokenizer exception for 'Frisco'. Tokenizer exceptions are only allowed to specify ORTH and NORM.

Could someone please advise for a workaround? I'm not sure if SpaCy version 3.0.3 was changed to no longer allow LEMMA to be part of tokenizer exception? Thanks!

pip install spacy==2.2.4orpip install spacy==2.3.5. – Sausage