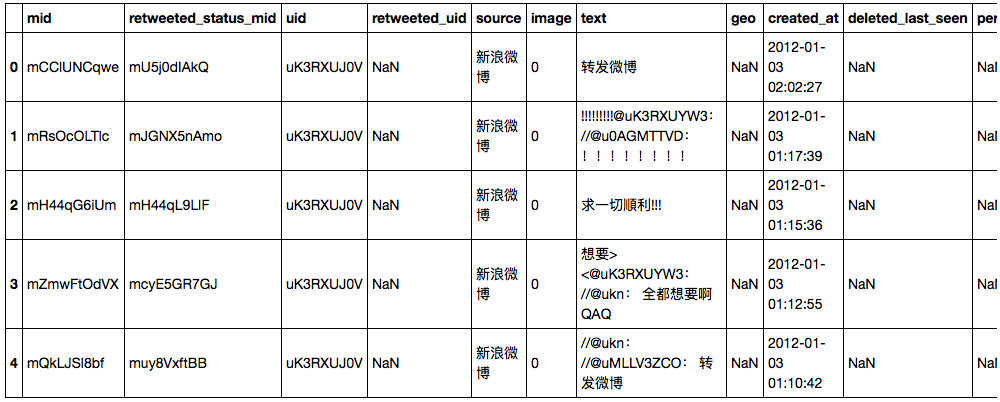

I am trying to load a dataset into pandas and cannot get seem to get past step 1. I am new so please forgive if this is obvious, I have searched previous topics and not found an answer. The data is mostly in Chinese characters, which may be the issue.

The .csv is very large, and can be found here: http://weiboscope.jmsc.hku.hk/datazip/ I am trying on week 1.

In my code below, I identify 3 types of decoding I attempted, including an attempt to see what encoding was used

import pandas

import chardet

import os

#this is what I tried to start

data = pandas.read_csv('week1.csv', encoding="utf-8")

#spits out error: UnicodeDecodeError: 'utf-8' codec can't decode byte 0x9a in position 69: invalid start byte

#Code to check encoding -- this spits out ascii

bytes = min(32, os.path.getsize('week1.csv'))

raw = open('week1.csv', 'rb').read(bytes)

chardet.detect(raw)

#so i tried this! it also fails, which isn't that surprising since i don't know how you'd do chinese chars in ascii anyway

data = pandas.read_csv('week1.csv', encoding="ascii")

#spits out error: UnicodeDecodeError: 'ascii' codec can't decode byte 0xe6 in position 0: ordinal not in range(128)

#for god knows what reason this allows me to load data into pandas, but definitely not correct encoding because when I print out first 5 lines its gibberish instead of Chinese chars

data = pandas.read_csv('week1.csv', encoding="latin1")

Any help would be greatly appreciated!

EDIT: The answer provided by @Kristof does in fact work, as does the program a colleague of mine put together yesterday:

import csv

import pandas as pd

def clean_weiboscope(file, nrows=0):

res = []

with open(file, 'r', encoding='utf-8', errors='ignore') as f:

reader = csv.reader(f)

for i, row in enumerate(f):

row = row.replace('\n', '')

if nrows > 0 and i > nrows:

break

if i == 0:

headers = row.split(',')

else:

res.append(tuple(row.split(',')))

df = pd.DataFrame(res)

return df

my_df = clean_weiboscope('week1.csv', nrows=0)

I also wanted to add for future searchers that this is the Weiboscope open data for 2012.

pd.read_csv(...., converters= {'text'}: your_func})– Coplin