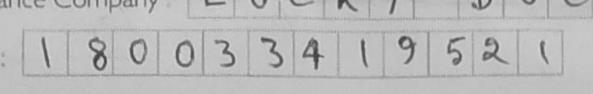

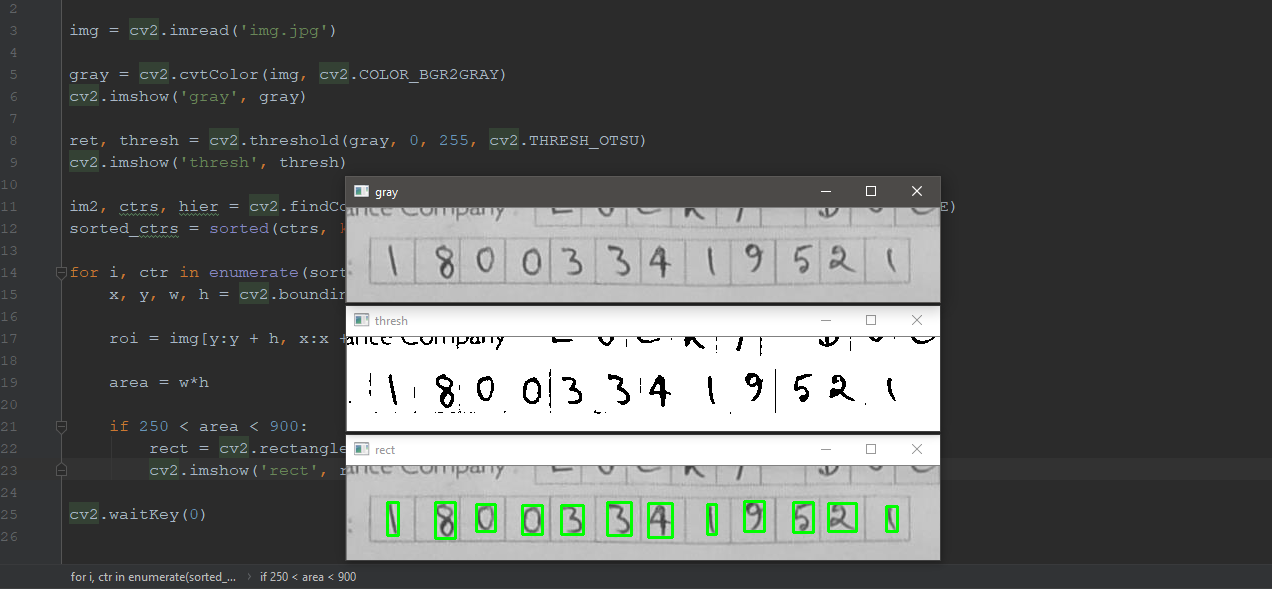

I have this type of image from that I only want to extract the characters.

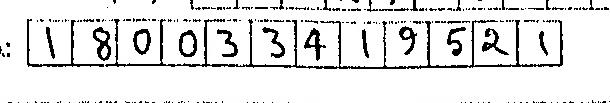

After binarization, I am getting this image

img = cv2.imread('the_image.jpg')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

thresh = cv2.adaptiveThreshold(gray, 255, cv2.ADAPTIVE_THRESH_MEAN_C, cv2.THRESH_BINARY, 11, 9)

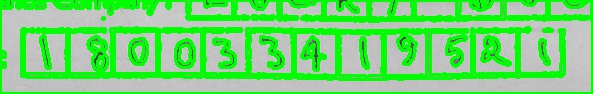

Then find contours on this image.

(im2, cnts, _) = cv2.findContours(thresh.copy(), cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE)

cnts = sorted(cnts, key=cv2.contourArea, reverse=True)

for contour in cnts[:2000]:

x, y, w, h = cv2.boundingRect(contour)

aspect_ratio = h/w

area = cv2.contourArea(contour)

cv2.drawContours(img, [contour], -1, (0, 255, 0), 2)

I am getting

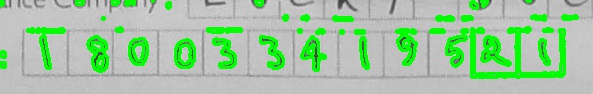

I need a way to filter the contours so that it selects only the characters. So I can find the bounding boxes and extract roi.

I can find contours and filter them based on the size of areas, but the resolution of the source images are not consistent. These images are taken from mobile cameras.

Also as the borders of the boxes are disconnected. I can't accurately detect the boxes.

Edit:

If I deselect boxes which has an aspect ratio less than 0.4. Then it works up to some extent. But I don't know if it will work or not for different resolution of images.

for contour in cnts[:2000]:

x, y, w, h = cv2.boundingRect(contour)

aspect_ratio = h/w

area = cv2.contourArea(contour)

if aspect_ratio < 0.4:

continue

print(aspect_ratio)

cv2.drawContours(img, [contour], -1, (0, 255, 0), 2)

cv2.CV_RETR_EXTERNALin the find contour to remove the contours that are outside, however you get a contour surrounding the whole image... and some numbers touch the boxes (or at least it looks like it) and may not work. You can try withcv2.CV_RETR_TREEand go down some hierarchy levels until you get only the numbers – Murphy