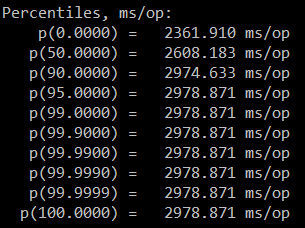

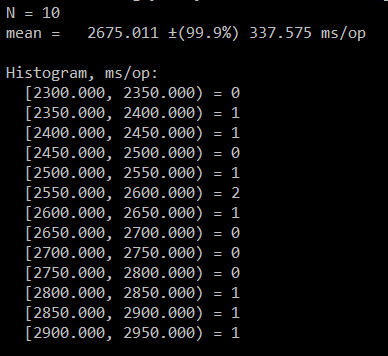

I'm using JMH to benchmark DOM parser. I got really weird results as the first iteration actually run faster than later iterations

Can anyone explain why this happens? Also, what do percentiles and all the figures mean and why it starts getting stable after the third iteration? Does one iteration mean one iteration of the entire benchmarking method? Below is the method I'm running

@Benchmark

@BenchmarkMode(Mode.SingleShotTime)

@OutputTimeUnit(TimeUnit.MILLISECONDS)

@Warmup(iterations = 13, time = 1, timeUnit = TimeUnit.MILLISECONDS)

public void testMethod_no_attr() {

try {

File fXmlFile = new File("500000-6.xml");

DocumentBuilderFactory dbFactory = DocumentBuilderFactory.newInstance();

DocumentBuilder dBuilder = dbFactory.newDocumentBuilder();

Document doc = dBuilder.parse(fXmlFile);

} catch (Exception e) {

e.printStackTrace();

}

}