I'm adding some batch normalization to my model in order to improve the training time, following some tutorials. This is my model:

model = Sequential()

model.add(Conv2D(16, kernel_size=(3, 3), activation='relu', input_shape=(64,64,3)))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(64, kernel_size=(3, 3), activation='relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(128, kernel_size=(3, 3), activation='relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(256, kernel_size=(3, 3), activation='relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten())

model.add(Dense(512, activation='relu'))

model.add(Dropout(0.5))

#NB: adding more parameters increases the probability of overfitting!! Try to cut instead of adding neurons!!

model.add(Dense(units=512, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(units=20, activation='softmax'))

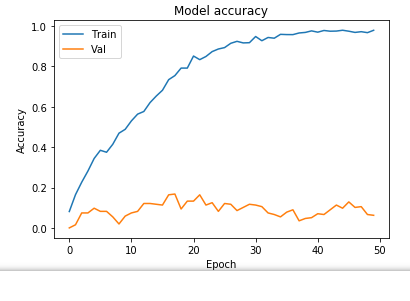

Without batch normalization, i get around 50% accuracy on my data. Adding batch normalization destroys my performance, with a validation accuracy reduced to 10%.

Why is this happening?