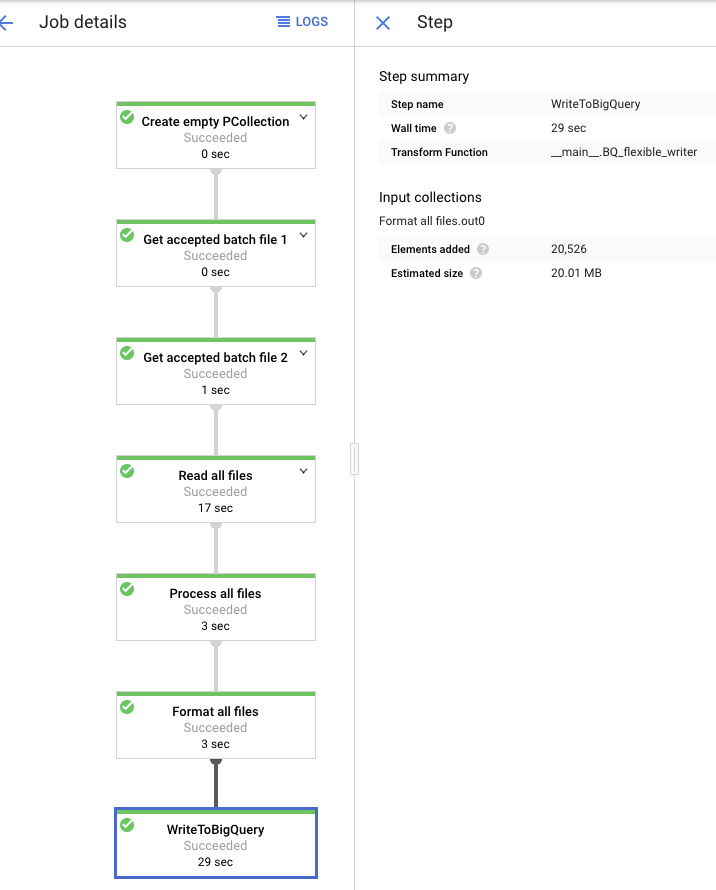

EDIT: I got this to work using beam.io.WriteToBigQuery with the sink experimental option turned on. I actually had it on but my issue was I was trying to "build" the full table reference from two variables (dataset + table) wrapped in str(). This was taking the whole value provider arguments data as a string instead of calling the get() method to get just the value.

OP

I am trying to generate a Dataflow template to then call from a GCP Cloud Function.(For reference, my dataflow job is supposed to read a file with a bunch of filenames in it and then reads all of those from GCS and writes the to BQ). Because of this I need to write it in such a way so that I can use runtime value providers to pass the BigQuery dataset/table.

At the bottom of my post is my code currently, omitting some stuff that's not relevant to the question from it. Pay attention to the BQ_flexible_writer(beam.DoFn) specifically - that's where I am trying to "customise" beam.io.WriteToBigQuery so that it accepts the runtime value providers.

My template generates fine and when I test run the pipeline without supplying runtime variables (relying on the defaults) it succeeds and I see the rows added when looking at the job in the console. However, when checking BigQuery there's no data (tripple checked the dataset/table name is correct in the logs). Not sure where it goes or what logging I can add to understand what's happening to the elements?

Any ideas what's happening here? Or suggestions on how I can write to BigQuery using runtime variables? Can I even call beam.io.WriteToBigQuery the way I've included it in my DoFn or do I have to take the actual code behind beam.io.WriteToBigQuery and work with that?

#=========================================================

class BQ_flexible_writer(beam.DoFn):

def __init__(self, dataset, table):

self.dataset = dataset

self.table = table

def process(self, element):

dataset_res = self.dataset.get()

table_res = self.table.get()

logging.info('Writing to table: {}.{}'.format(dataset_res,table_res))

beam.io.WriteToBigQuery(

#dataset= runtime_options.dataset,

table = str(dataset_res) + '.' + str(table_res),

schema = SCHEMA_ADFImpression,

project = str(PROJECT_ID), #options.display_data()['project'],

create_disposition = beam.io.BigQueryDisposition.CREATE_IF_NEEDED, #'CREATE_IF_NEEDED',#create if does not exist.

write_disposition = beam.io.BigQueryDisposition.WRITE_APPEND #'WRITE_APPEND' #add to existing rows,partitoning

)

# https://cloud.google.com/dataflow/docs/guides/templates/creating-templates#valueprovider

class FileIterator(beam.DoFn):

def __init__(self, files_bucket):

self.files_bucket = files_bucket

def process(self, element):

files = pd.read_csv(str(element), header=None).values[0].tolist()

bucket = self.files_bucket.get()

files = [str(bucket) + '/' + file for file in files]

logging.info('Files list is: {}'.format(files))

return files

# https://mcmap.net/q/1914928/-ways-of-using-value-provider-parameter-in-python-apache-beam

class OutputValueProviderFn(beam.DoFn):

def __init__(self, vp):

self.vp = vp

def process(self, unused_elm):

yield self.vp.get()

class RuntimeOptions(PipelineOptions):

@classmethod

def _add_argparse_args(cls, parser):

parser.add_value_provider_argument(

'--dataset',

default='EDITED FOR PRIVACY',

help='BQ dataset to write to',

type=str)

parser.add_value_provider_argument(

'--table',

default='EDITED FOR PRIVACY',

required=False,

help='BQ table to write to',

type=str)

parser.add_value_provider_argument(

'--filename',

default='EDITED FOR PRIVACY',

help='Filename of batch file',

type=str)

parser.add_value_provider_argument(

'--batch_bucket',

default='EDITED FOR PRIVACY',

help='Bucket for batch file',

type=str)

#parser.add_value_provider_argument(

# '--bq_schema',

#default='gs://dataflow-samples/shakespeare/kinglear.txt',

# help='Schema to specify for BQ')

#parser.add_value_provider_argument(

# '--schema_list',

#default='gs://dataflow-samples/shakespeare/kinglear.txt',

# help='Schema in list for processing')

parser.add_value_provider_argument(

'--files_bucket',

default='EDITED FOR PRIVACY',

help='Bucket where the raw files are',

type=str)

parser.add_value_provider_argument(

'--complete_batch',

default='EDITED FOR PRIVACY',

help='Bucket where the raw files are',

type=str)

#=========================================================

def run():

#====================================

# TODO PUT AS PARAMETERS

#====================================

JOB_NAME_READING = 'adf-reading'

JOB_NAME_PROCESSING = 'adf-'

job_name = '{}-batch--{}'.format(JOB_NAME_PROCESSING,_millis())

pipeline_options_batch = PipelineOptions()

runtime_options = pipeline_options_batch.view_as(RuntimeOptions)

setup_options = pipeline_options_batch.view_as(SetupOptions)

setup_options.setup_file = './setup.py'

google_cloud_options = pipeline_options_batch.view_as(GoogleCloudOptions)

google_cloud_options.project = PROJECT_ID

google_cloud_options.job_name = job_name

google_cloud_options.region = 'europe-west1'

google_cloud_options.staging_location = GCS_STAGING_LOCATION

google_cloud_options.temp_location = GCS_TMP_LOCATION

#pipeline_options_batch.view_as(StandardOptions).runner = 'DirectRunner'

# # If datflow runner [BEGIN]

pipeline_options_batch.view_as(StandardOptions).runner = 'DataflowRunner'

pipeline_options_batch.view_as(WorkerOptions).autoscaling_algorithm = 'THROUGHPUT_BASED'

#pipeline_options_batch.view_as(WorkerOptions).machine_type = 'n1-standard-96' #'n1-highmem-32' #'

pipeline_options_batch.view_as(WorkerOptions).max_num_workers = 10

# [END]

pipeline_options_batch.view_as(SetupOptions).save_main_session = True

#Needed this in order to pass table to BQ at runtime

pipeline_options_batch.view_as(DebugOptions).experiments = ['use_beam_bq_sink']

with beam.Pipeline(options=pipeline_options_batch) as pipeline_2:

try:

final_data = (

pipeline_2

|'Create empty PCollection' >> beam.Create([None])

|'Get accepted batch file 1/2:{}'.format(OutputValueProviderFn(runtime_options.complete_batch)) >> beam.ParDo(OutputValueProviderFn(runtime_options.complete_batch))

|'Get accepted batch file 2/2:{}'.format(OutputValueProviderFn(runtime_options.complete_batch)) >> beam.ParDo(FileIterator(runtime_options.files_bucket))

|'Read all files' >> beam.io.ReadAllFromText(skip_header_lines=1)

|'Process all files' >> beam.ParDo(ProcessCSV(),COLUMNS_SCHEMA_0)

|'Format all files' >> beam.ParDo(AdfDict())

#|'WriteToBigQuery_{}'.format('test'+str(_millis())) >> beam.io.WriteToBigQuery(

# #dataset= runtime_options.dataset,

# table = str(runtime_options.dataset) + '.' + str(runtime_options.table),

# schema = SCHEMA_ADFImpression,

# project = pipeline_options_batch.view_as(GoogleCloudOptions).project, #options.display_data()['project'],

# create_disposition = beam.io.BigQueryDisposition.CREATE_IF_NEEDED, #'CREATE_IF_NEEDED',#create if does not exist.

# write_disposition = beam.io.BigQueryDisposition.WRITE_APPEND #'WRITE_APPEND' #add to existing rows,partitoning

# )

|'WriteToBigQuery' >> beam.ParDo(BQ_flexible_writer(runtime_options.dataset,runtime_options.table))

)

except Exception as exception:

logging.error(exception)

pass