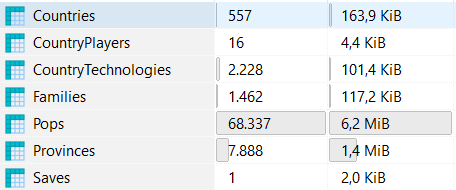

I have a Save object which has several collections associated. Total size of the objects is as follows:

The relations between objects can be infered from this mapping, and seem correctly represented in the database. Also querying works just fine.

modelBuilder.Entity<Save>().HasKey(c => c.SaveId).HasAnnotation("DatabaseGenerated",DatabaseGeneratedOption.Identity);

modelBuilder.Entity<Save>().HasMany(c => c.Families).WithOne(x => x.Save).HasForeignKey(x => x.SaveId);

modelBuilder.Entity<Save>().HasMany(c => c.Countries).WithOne(x => x.Save).HasForeignKey(x => x.SaveId);

modelBuilder.Entity<Save>().HasMany(c => c.Provinces).WithOne(x => x.Save).HasForeignKey(x => x.SaveId);

modelBuilder.Entity<Save>().HasMany(c => c.Pops).WithOne(x => x.Save).HasForeignKey(x => x.SaveId);

modelBuilder.Entity<Country>().HasOne(c => c.Save);

modelBuilder.Entity<Country>().HasMany(c => c.Technologies).WithOne(x => x.Country).HasForeignKey(x => new {x.SaveId, x.CountryId});

modelBuilder.Entity<Country>().HasMany(c => c.Players).WithOne(x => x.Country).HasForeignKey(x => new {x.SaveId, x.CountryId});

modelBuilder.Entity<Country>().HasMany(c => c.Families).WithOne(x => x.Country).HasForeignKey(x => new {x.SaveId, x.OwnerId});

modelBuilder.Entity<Country>().HasMany(c => c.Provinces).WithOne(x => x.Owner);

modelBuilder.Entity<Country>().HasKey(c => new { c.SaveId, c.CountryId });

modelBuilder.Entity<Family>().HasKey(c => new { c.SaveId, c.FamilyId });

modelBuilder.Entity<Family>().HasOne(c => c.Save);

modelBuilder.Entity<CountryPlayer>().HasKey(c => new { c.SaveId, c.CountryId, c.PlayerName });

modelBuilder.Entity<CountryPlayer>().HasOne(c => c.Country);

modelBuilder.Entity<CountryPlayer>().Property(c => c.PlayerName).HasMaxLength(100);

modelBuilder.Entity<CountryTechnology>().HasKey(c => new { c.SaveId, c.CountryId, c.Type });

modelBuilder.Entity<CountryTechnology>().HasOne(c => c.Country);

modelBuilder.Entity<Province>().HasKey(c => new { c.SaveId, c.ProvinceId });

modelBuilder.Entity<Province>().HasMany(c => c.Pops).WithOne(x => x.Province);

modelBuilder.Entity<Province>().HasOne(c => c.Save);

modelBuilder.Entity<Population>().HasKey(c => new { c.SaveId, c.PopId });

modelBuilder.Entity<Population>().HasOne(c => c.Province);

modelBuilder.Entity<Population>().HasOne(c => c.Save);

I parse the entire save from a file so I can't add all the collections one by one. After the parsing I have a Savewith all its associated collections, adding up to 80k objects, none of which are present in the database.

Then, when I call dbContext.Add(save)it takes around 44 seconds to process, with RAM usage going up from 100mb to around 700mb.

Then, when I call dbContext.SaveChanges() (I tried also the regular BulkSaveChanges() method from EF Extensions with no significant difference) it takes an additional 60s, with RAM usage going up to 1,3Gb.

What is going on here? Why so long and so much memory usage? The actual uploading to the database only takes about the last 5 seconds.

PS: I also tried disabling change detection with no effect.

PS2: Actual usage and full code as requested in comments:

public class HomeController : Controller

{

private readonly ImperatorContext _db;

public HomeController(ImperatorContext db)

{

_db = db;

}

[HttpPost]

[RequestSizeLimit(200000000)]

public async Task<IActionResult> UploadSave(List<IFormFile> files)

{

[...]

await using (var stream = new FileStream(filePath, FileMode.Open))

{

var save = ParadoxParser.Parse(stream, new SaveParser());

if (_db.Saves.Any(s => s.SaveKey == save.SaveKey))

{

response = "The save you uploaded already exists in the database.";

}

else

{

_db.Saves.Add(save);

}

_db.BulkSaveChanges();

}

[...]

}

}

BulkSaveChanges()– Cancellatesaveobject, I can't loop though it as there is only one object at the top. – Painful