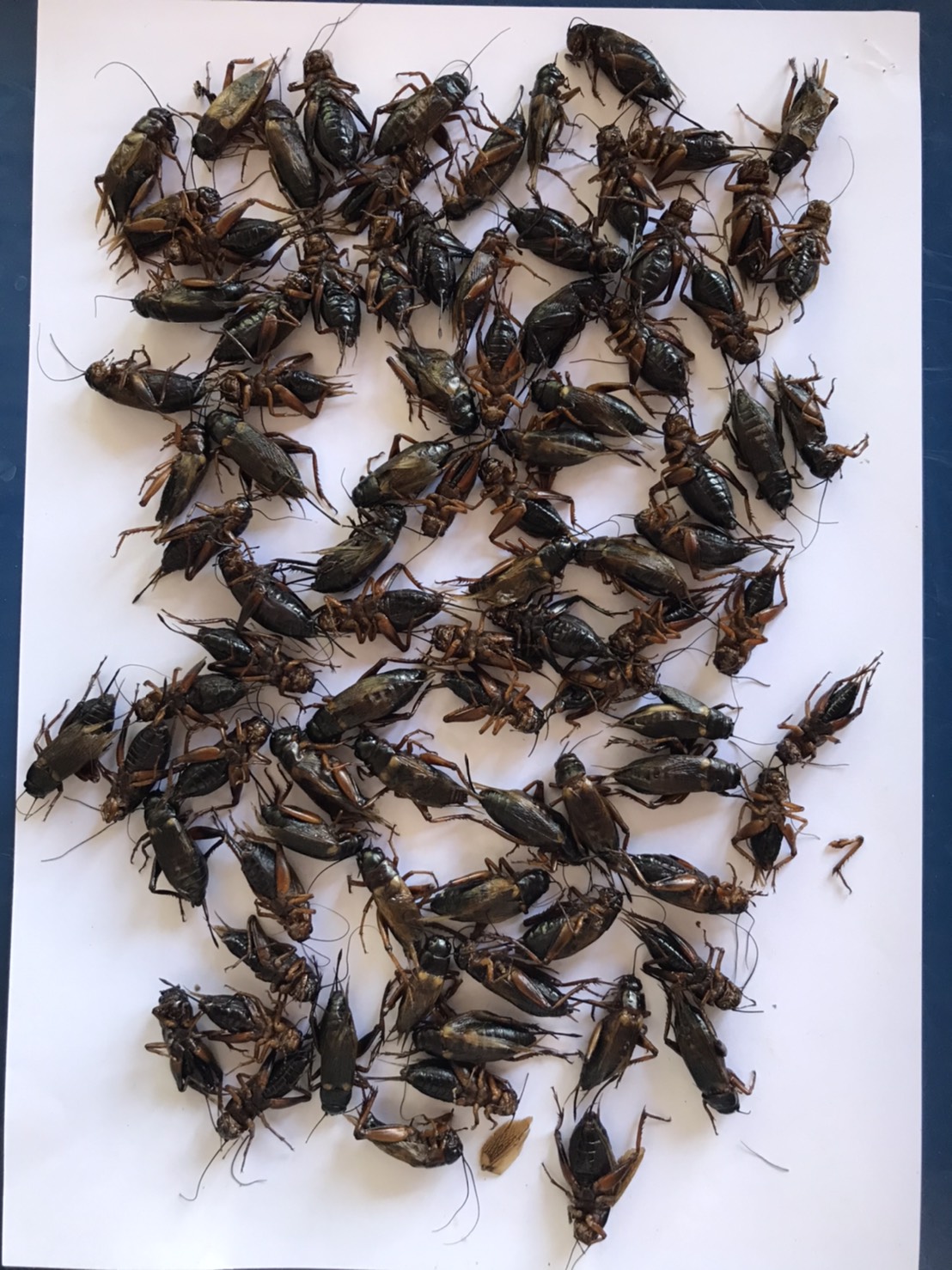

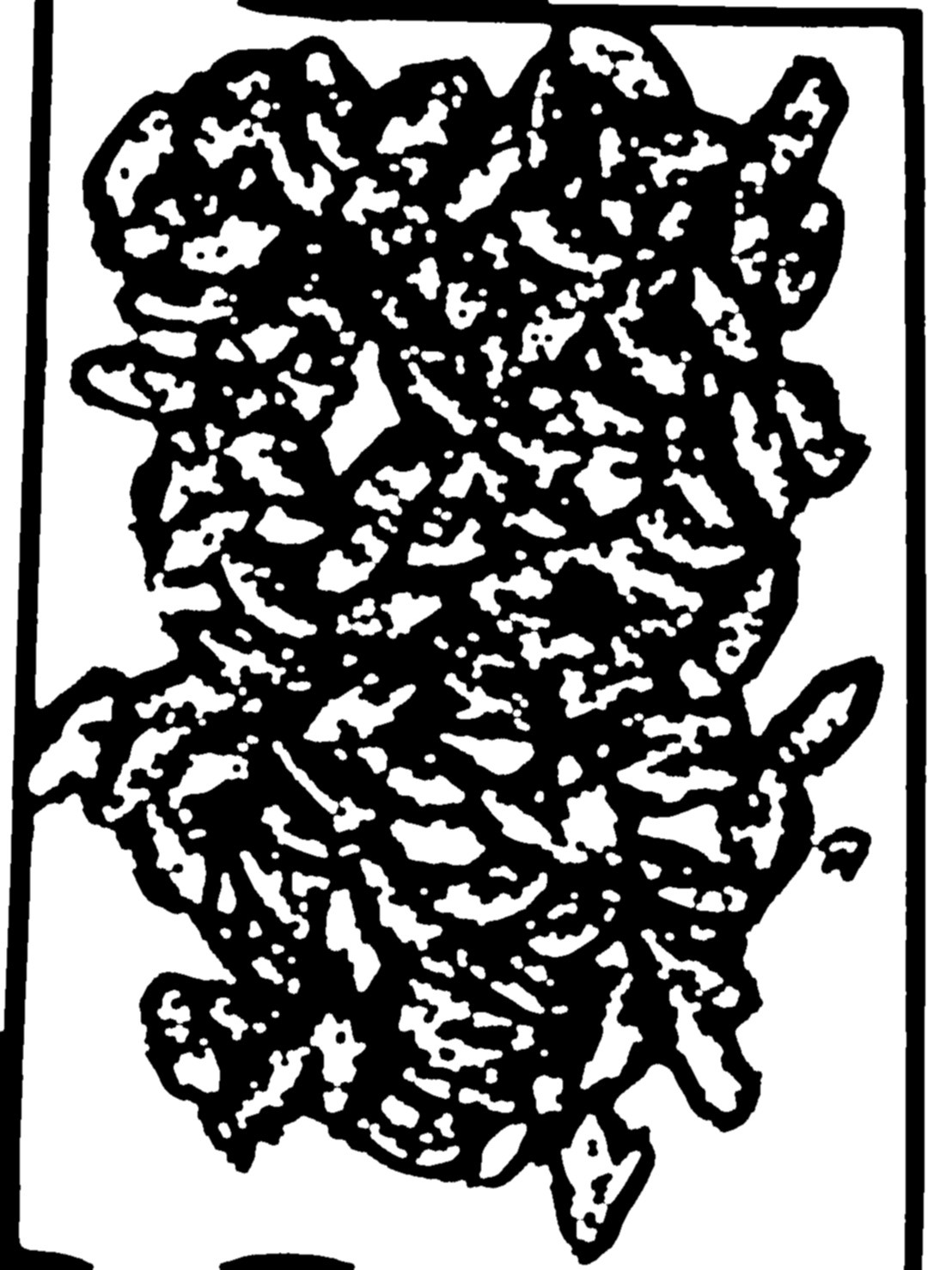

I'm trying to count the number of a group of crickets(insect). I will use the image processing method, by openCV library. This will provide more accuracy practice, when the farmers sell their crickets. The photo was taken from a smartphone. Unfortunately, the results were not as expected. Since, most crickets overlap on each other, my code couldn't separate them into individual, resulting in an incorrect count.

what method that I should apply to this issue? Is there something wrong with my code?

Crickets image

And here is my code.

import cv2

import numpy as np

img = cv2.imread("c1.jpg",1)

roi=img[0:1500,0:1100]

gray = cv2.cvtColor(roi,cv2.COLOR_BGR2GRAY)

gray_blur=cv2.GaussianBlur(gray,(15,15),0)

thresh = cv2.adaptiveThreshold(gray_blur,255,cv2.ADAPTIVE_THRESH_GAUSSIAN_C,cv2.THRESH_BINARY_INV,11,1)

kernel=np.ones((1,1),np.uint8)

closing=cv2.morphologyEx(thresh,cv2.MORPH_CLOSE,kernel,iterations=10)

result_img=closing.copy()

contours,hierachy=cv2.findContours(result_img,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

counter=0

for cnt in contours:

area = cv2.contourArea(cnt)

if area < 150 :

#if area< 300 :

continue

counter+=1

ellipse = cv2.fitEllipse(cnt)

cv2.ellipse(roi,ellipse,(0,255,0),1)

cv2.putText(roi,"Crickets="+str(counter),(100,70),cv2.FONT_HERSHEY_SIMPLEX,1,(255,0,0),1,cv2.LINE_AA)

cv2.imshow('ImageOfCrickets',roi)

#cv2.imshow('ImageOfGray',gray)

#cv2.imshow('ImageOfGray_blur',gray_blur)

#cv2.imshow('ImageOfThreshold',thresh)

#cv2.imshow('ImageOfMorphology',closing)

print('Crickets = '+ str(counter))

cv2.waitKey(0)

cv2.destroyAllWindows()

Now, I'm using closing morphology and Contours Hierarchy for ellipse shape method.