We've built an MS Bot Framework bot that consumes our existing, internal, on-premises APIs during conversations. We'd like to release this bot by dropping a Web Chat Component into the DOM of our existing, internally-facing, on-premises application.

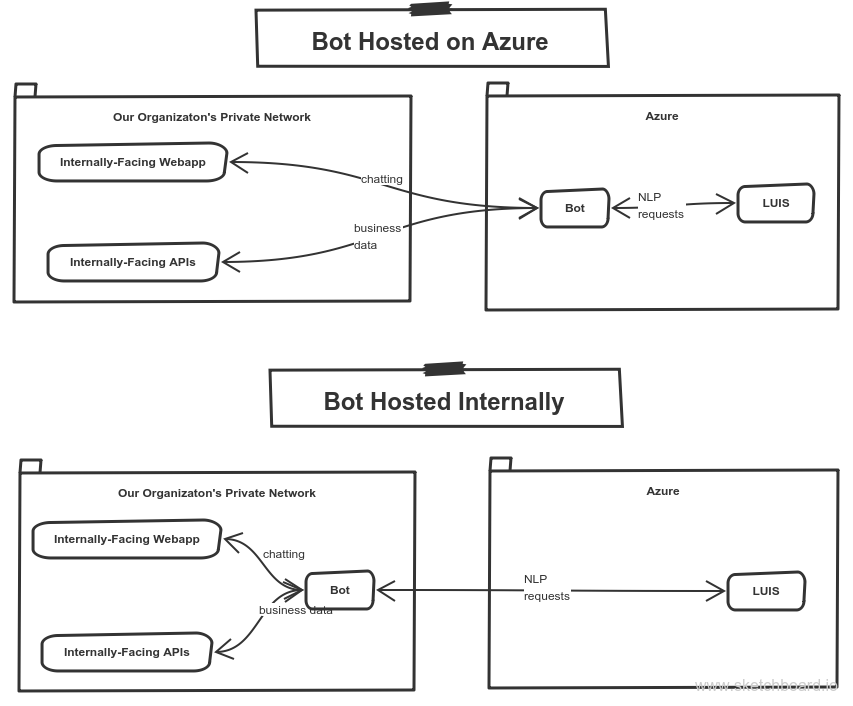

With our existing architecture, naturally, we want to host this bot internally too--to leverage all our existing configuration and deployment processes. We understand that, regardless, the bot will have to communicate with LUIS--which is fine by us; it doesn't require the more complex (larger attack surface, less central IT buy-in) setup of Azure connecting directly to our internal business data API.

I think this diagram makes it more clear:

Can we achieve what's depicted in the bottom hosting configuration?

EDIT 1: Can we also host the direct line or a similar connector on-premises without having to write a custom connector? Additionally, can we chat with our bot over such a connector without having to write a custom chat component/widget for the DOM? (The web chat component would work just fine as long as it's pointed at our channel.)

The end goal here is to get all of our chat traffic to stay on-premises because this is a data-driven chatbot serving sensitive numbers. It will take less time to redevelop this in another framework that can run wholly on-premises than get approval from our central IT.

Side Note: I'm aware of the Azure Stack Preview. The minimum hardware requirements (and probably subscription costs too) are extreme overkill. (We're talking about a single Node app, after all.)

This is not a duplicate of this question because this question also addresses the key element of direct/line connector on-prem hosting where the other question assumed that the connector would still run on Azure.