I'm trying to test ARMA models, and working through the examples provided here:

http://www.statsmodels.org/dev/examples/notebooks/generated/tsa_arma_0.html

I can't tell if there is a straightforward way to train a model on a training dataset then test it on a test dataset. It seems to me that you have to fit the model on an entire dataset. Then you can do in-sample predictions, which use the same dataset as you used to train the model. Or you can do an out of sample prediction, but that has to start at the end of your training dataset. What I would like to do instead is fit the model on a training dataset, then run the model over an entirely different dataset that wasn't part of the training dataset and get a series of 1 step ahead predictions.

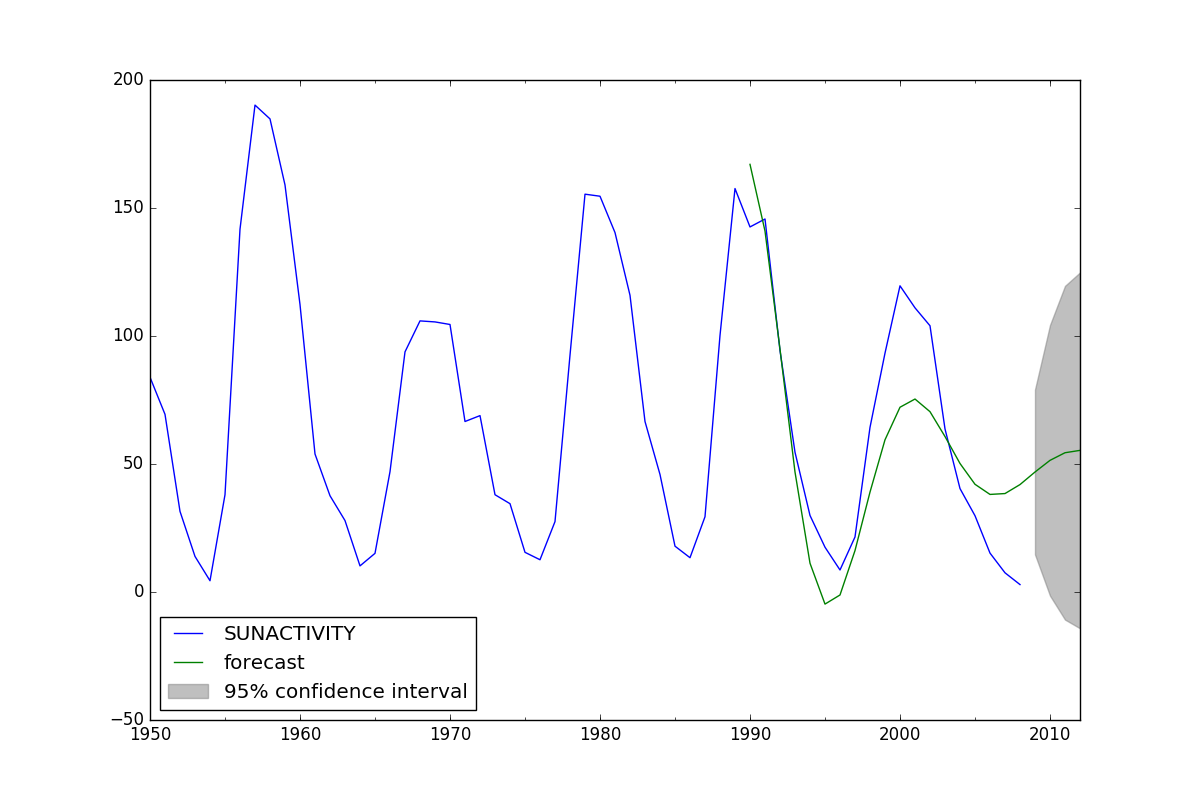

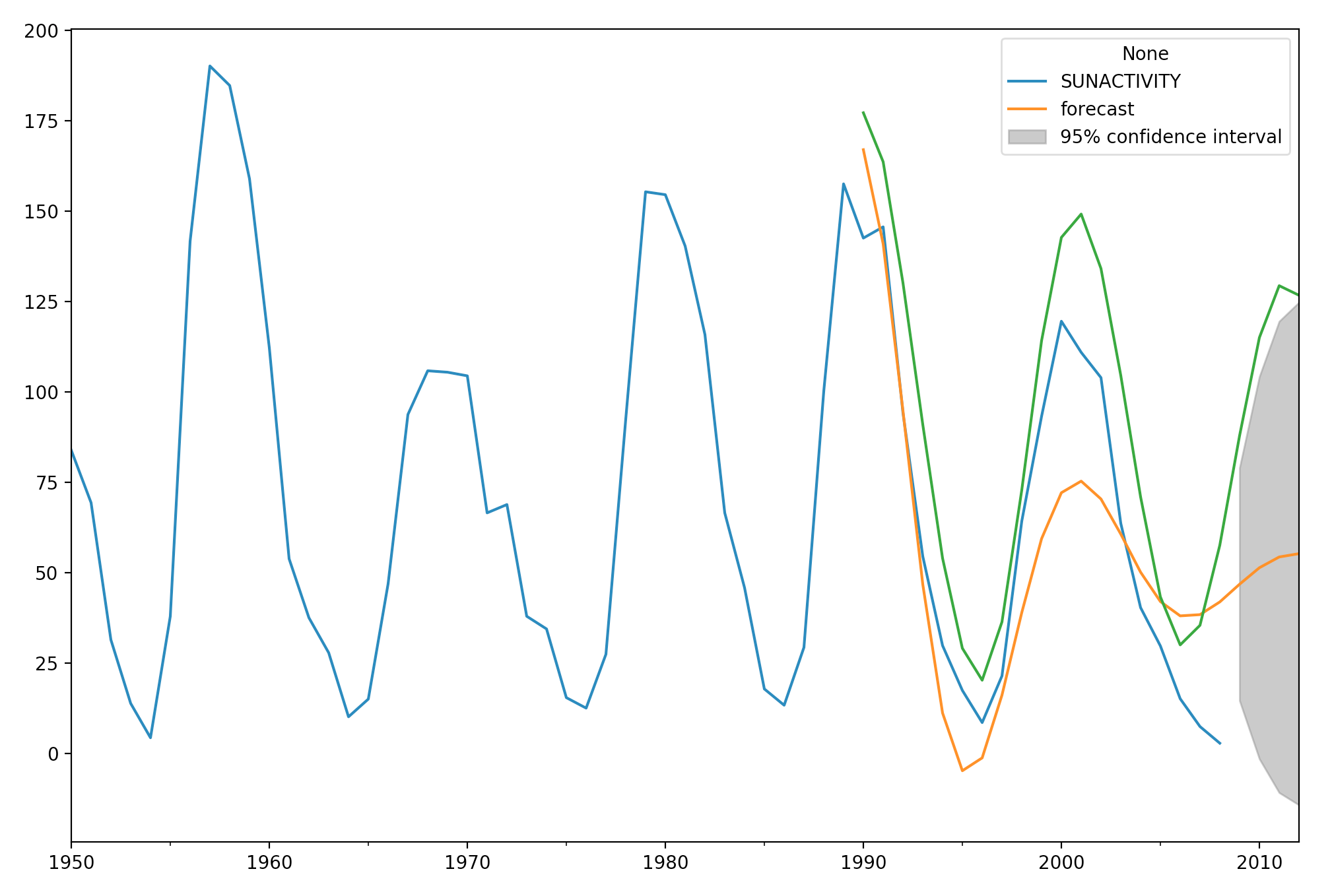

To illustrate the issue, here is abbreviated code from the link above. You see that the model is fitting data for 1700-2008 then predicting 1990-2012. The problem I have is that 1990-2008 were already part of the data that was used to fit the model, so I think I'm predicting and training on the same data. I want to be able to get a series of 1 step predictions that don't have look-ahead bias.

import numpy as np

import matplotlib.pyplot as plt

import statsmodels.api as sm

dta = sm.datasets.sunspots.load_pandas().data

dta.index = pandas.Index(sm.tsa.datetools.dates_from_range('1700', '2008'))

dta = dta.drop('YEAR',1)

arma_mod30 = sm.tsa.ARMA(dta, (3, 0)).fit(disp=False)

predict_sunspots = arma_mod30.predict('1990', '2012', dynamic=True)

fig, ax = plt.subplots(figsize=(12, 8))

ax = dta.ix['1950':].plot(ax=ax)

fig = arma_mod30.plot_predict('1990', '2012', dynamic=True, ax=ax, plot_insample=False)

plt.show()