Theory

There is a blog post about Lucene term index and lookup performance. It clearly reveals all the details of complexity of looking up a document by id. This post is quite old, but nothing was changed since then.

Here is some highlights related to your question:

- Lucene is a search engine where the minimum element of retrieval is a text term, so this means: binary, number and string fields are represented as strings in the BlockTree terms dictionary.

- In general, the complexity of lookup depends on the term length: Lucene uses an in-memory prefix-trie index structure to perform a term lookup. Due to restrictions of real-world hardware and software implementations (in order to avoid superfluous disk reads and memory overflow for extremely large tries), Lucene uses a BlockTree structure. This means it stores prefix-trie in small chunks on disk and loads only one chunk at time. This is why it's so important to generate keys in an easy-to-read order. So let's arrange the factors according to the degree of their influence:

- term's length - more chunks to load

- term's pattern - to avoid superfluous reads

- terms count - to reduce chunks count

Algorithms and Complexity

Let term be a single string and let term dictionary be a large set of terms. If we have a term dictionary, and we need to know whether a single term is inside the dictionary, the trie (and minimal deterministic acyclic finite state automaton (DAFSA) as a subclass) is the data structure that can help us. On your question: “Why use tries if a hash lookup can do the same?”, here are a few reasons:

- The tries can find strings in O(L) time (where L represents the length of a single term). This is a bit faster compared to hash table in the worst case (hash table requires linear scan in case of hash collisions and sophisticated hashing algorithm like MurmurHash3), or similar to a hash table in perfect case.

- The hash tables can only find terms of a dictionary that exactly match with the single term that we are looking for; whereas the trie allows us to find terms that have a single different character, a prefix in common, a character missing, etc.

- The trie can provide an alphabetical ordering of the entries by key, so we can enumerate all terms in alphabetical order.

- The trie (and especially DAFSA) provides a very compact representation of terms with deduplication.

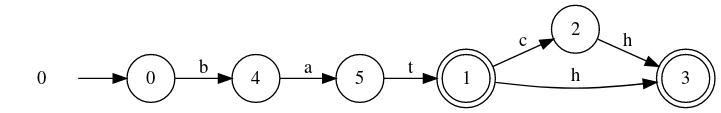

Here is an example of DAFSA for 3 terms: bath, bat and batch:

![Example of DAFSA Data Structure]()

In case of key lookup, notice that lowering a single level in the automata (or trie) is done in constant time, and every time that the algorithm lowers a single level in the automata (trie), a single character is cut from the term, so we can conclude that finding a term in a automata (trie) can be done in O(L) time.

optimizefunction ( lucene.apache.org/core/7_2_0/core/org/apache/lucene/index/… ) . Or are you refering tomaybeRefresh? – AtlasmaybeMerge... – AtlasmaxNumSegments=1. – Shanghai