I have followed the TensorFlow MNIST Estimator tutorial and I have trained my MNIST model.

It seems to work fine, but if I visualize it on Tensorboard I see something weird: the input shape that the model requires is 100 x 784.

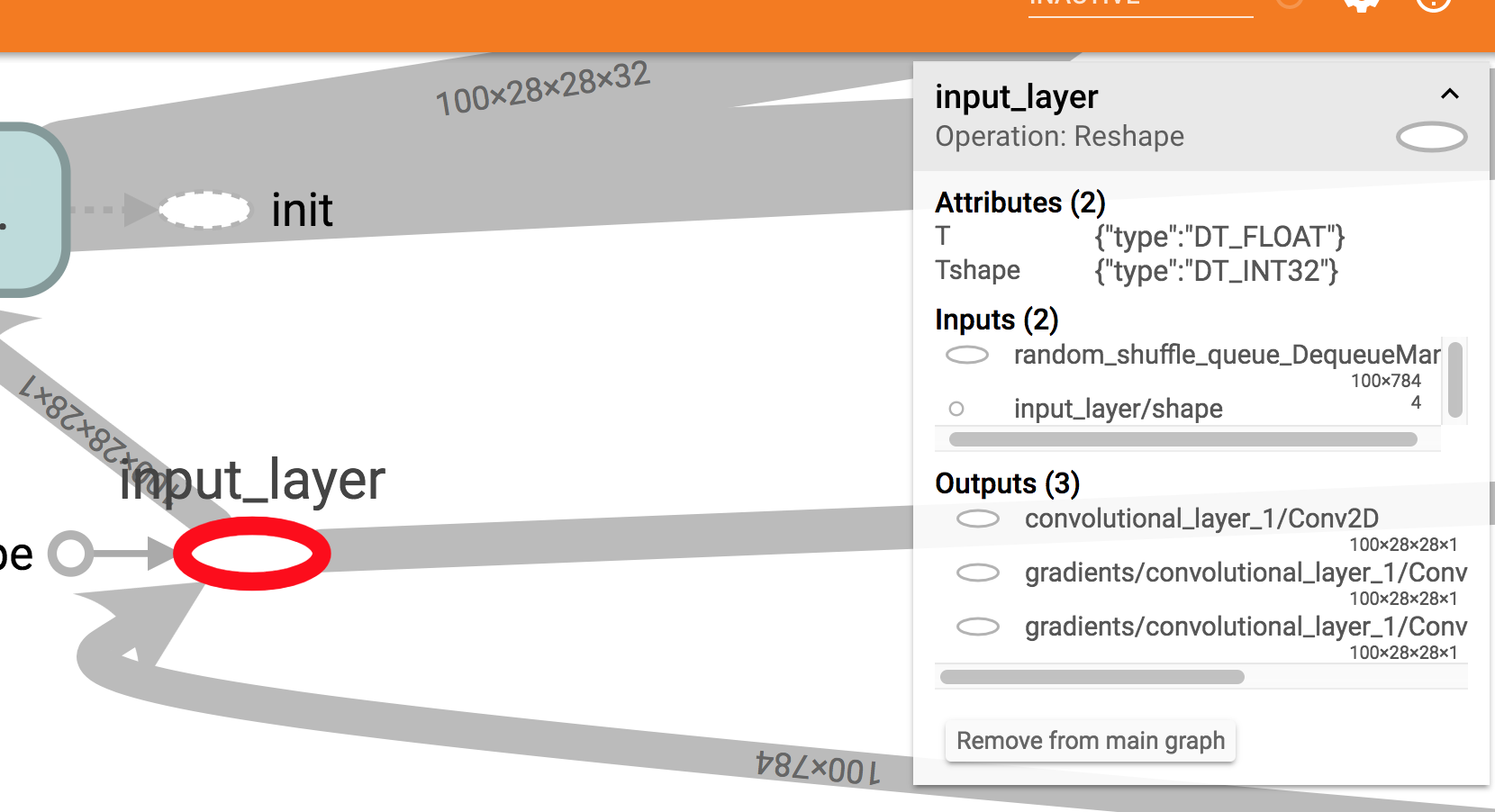

Here is a screenshot: as you can see in the right box, expected input size is 100x784.

I thought I would see ?x784 there.

Now, I did use 100 as a batch size in training, but in the Estimator model function I also specified that the amount of input samples size is variable. So I expected ? x 784 to be shown in Tensorboard.

input_layer = tf.reshape(features["x"], [-1, 28, 28, 1], name="input_layer")

I tried to use the estimator.train and estimator.evaluate methods on the same model with different batch sizes (e.g. 50), and to use the Estimator.predict method passing a single sample at a time. In these cases, everything seemed to works fine.

On the contrary, I do get problems if I try to use the model without passing through the Estimator interface. For example, if I freeze my model and try to load it in a GraphDef and run it in a session, like this:

with tf.gfile.GFile("/path/to/my/frozen/model.pb", "rb") as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

with tf.Graph().as_default() as graph:

tf.import_graph_def(graph_def, name="prefix")

x = graph.get_tensor_by_name('prefix/input_layer:0')

y = graph.get_tensor_by_name('prefix/softmax_tensor:0')

with tf.Session(graph=graph) as sess:

y_out = sess.run(y, feed_dict={x: 28_x_28_image})

I will get the following error:

ValueError: Cannot feed value of shape (1, 28, 28, 1) for Tensor 'prefix/input_layer:0', which has shape '(100, 28, 28, 1)'

This worries me a lot, because in production I do need to freeze, optimize and convert my models to run them on TensorFlow Lite. So I won't be using the Estimator interface.

What am I missing?

batch = features["x"] # shape (100, 784)reshaped = tf.reshape(batch, (-1, 28, 28, 1)) # shape (100, 28, 28, 1)reshape_layer = tf.placeholder_with_default(reshaped, (None, 28, 28, 1), name="reshape_layer") # shape (?, 28, 28, 1)– Luker