I'm trying to finetune the Facebook BART model, I'm following this article in order to classify text using my own dataset.

And I'm using the Trainer object in order to train:

training_args = TrainingArguments(

output_dir=model_directory, # output directory

num_train_epochs=1, # total number of training epochs - 3

per_device_train_batch_size=4, # batch size per device during training - 16

per_device_eval_batch_size=16, # batch size for evaluation - 64

warmup_steps=50, # number of warmup steps for learning rate scheduler - 500

weight_decay=0.01, # strength of weight decay

logging_dir=model_logs, # directory for storing logs

logging_steps=10,

)

model = BartForSequenceClassification.from_pretrained("facebook/bart-large-mnli") # bart-large-mnli

trainer = Trainer(

model=model, # the instantiated 🤗 Transformers model to be trained

args=training_args, # training arguments, defined above

compute_metrics=new_compute_metrics, # a function to compute the metrics

train_dataset=train_dataset, # training dataset

eval_dataset=val_dataset # evaluation dataset

)

This is the tokenizer I used:

from transformers import BartTokenizerFast

tokenizer = BartTokenizerFast.from_pretrained('facebook/bart-large-mnli')

But when I use trainer.train() I get the following:

Printing the following:

***** Running training *****

Num examples = 172

Num Epochs = 1

Instantaneous batch size per device = 4

Total train batch size (w. parallel, distributed & accumulation) = 16

Gradient Accumulation steps = 1

Total optimization steps = 11

Followed by this error:

RuntimeError: Caught RuntimeError in replica 1 on device 1.

Original Traceback (most recent call last):

File "/databricks/python/lib/python3.9/site-packages/torch/nn/parallel/parallel_apply.py", line 61, in _worker

output = module(*input, **kwargs)

File "/databricks/python/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "/databricks/python/lib/python3.9/site-packages/transformers/models/bart/modeling_bart.py", line 1496, in forward

outputs = self.model(

File "/databricks/python/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "/databricks/python/lib/python3.9/site-packages/transformers/models/bart/modeling_bart.py", line 1222, in forward

encoder_outputs = self.encoder(

File "/databricks/python/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "/databricks/python/lib/python3.9/site-packages/transformers/models/bart/modeling_bart.py", line 846, in forward

layer_outputs = encoder_layer(

File "/databricks/python/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "/databricks/python/lib/python3.9/site-packages/transformers/models/bart/modeling_bart.py", line 323, in forward

hidden_states, attn_weights, _ = self.self_attn(

File "/databricks/python/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "/databricks/python/lib/python3.9/site-packages/transformers/models/bart/modeling_bart.py", line 191, in forward

query_states = self.q_proj(hidden_states) * self.scaling

File "/databricks/python/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "/databricks/python/lib/python3.9/site-packages/torch/nn/modules/linear.py", line 114, in forward

return F.linear(input, self.weight, self.bias)

RuntimeError: CUDA error: CUBLAS_STATUS_NOT_INITIALIZED when calling `cublasCreate(handle)`

I've searched this site and GitHub and hugging face forum but still didn't find anything that helped me fix this for me (I tried adding more memory, lowering batches and warmup, restarting, specifying CPU or GPU, and more, but none worked for me)

Databricks Clusters:

- Runtime: 12.2 LTS ML (includes Apache Spark 3.3.2, GPU, Scala 2.12) Worker Type: Standard_NC24s_v3 with 4 GPUs, 2 to 10 workers, I think 16GB RAM and 448GB memory for the host

- Runtime: 12.1 ML (includes Apache Spark 3.3.1, Scala 2.12) Worker Type: Standard_L8s (Memory optimized), 2 to 10 workers, 64GB memory with 8 cores

Update: With the second cluster, depending on the flag combination, I sometimes get the error IndexError: Target {i} is out of bounds where i change from time to time

If you require any other information, comment and I'll add it up asap

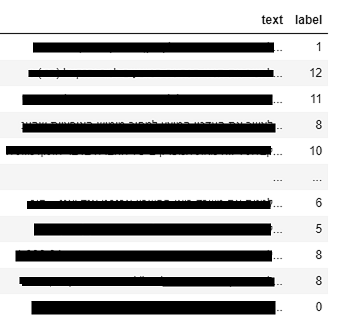

My dataset is holding private information but here is an image of how it's built:

Updates:

I also tried setting the:

fp16=Truegradient_checkpointing=Truegradient_accumulation_steps=4

Flags but still had the same error when putting each separately and together

Second cluster error (it get this error only sometimes, based on the flag combination):

IndexError Traceback (most recent call last)

File <command-2692616476221798>:1

----> 1 trainer.train()

File /databricks/python/lib/python3.9/site-packages/transformers/trainer.py:1527, in Trainer.train(self, resume_from_checkpoint, trial, ignore_keys_for_eval, **kwargs)

1522 self.model_wrapped = self.model

1524 inner_training_loop = find_executable_batch_size(

1525 self._inner_training_loop, self._train_batch_size, args.auto_find_batch_size

1526 )

-> 1527 return inner_training_loop(

1528 args=args,

1529 resume_from_checkpoint=resume_from_checkpoint,

1530 trial=trial,

1531 ignore_keys_for_eval=ignore_keys_for_eval,

1532 )

File /databricks/python/lib/python3.9/site-packages/transformers/trainer.py:1775, in Trainer._inner_training_loop(self, batch_size, args, resume_from_checkpoint, trial, ignore_keys_for_eval)

1773 tr_loss_step = self.training_step(model, inputs)

1774 else:

-> 1775 tr_loss_step = self.training_step(model, inputs)

1777 if (

1778 args.logging_nan_inf_filter

1779 and not is_torch_tpu_available()

1780 and (torch.isnan(tr_loss_step) or torch.isinf(tr_loss_step))

1781 ):

1782 # if loss is nan or inf simply add the average of previous logged losses

1783 tr_loss += tr_loss / (1 + self.state.global_step - self._globalstep_last_logged)

File /databricks/python/lib/python3.9/site-packages/transformers/trainer.py:2523, in Trainer.training_step(self, model, inputs)

2520 return loss_mb.reduce_mean().detach().to(self.args.device)

2522 with self.compute_loss_context_manager():

-> 2523 loss = self.compute_loss(model, inputs)

2525 if self.args.n_gpu > 1:

2526 loss = loss.mean() # mean() to average on multi-gpu parallel training

File /databricks/python/lib/python3.9/site-packages/transformers/trainer.py:2555, in Trainer.compute_loss(self, model, inputs, return_outputs)

2553 else:

2554 labels = None

-> 2555 outputs = model(**inputs)

2556 # Save past state if it exists

2557 # TODO: this needs to be fixed and made cleaner later.

2558 if self.args.past_index >= 0:

File /databricks/python/lib/python3.9/site-packages/torch/nn/modules/module.py:1190, in Module._call_impl(self, *input, **kwargs)

1186 # If we don't have any hooks, we want to skip the rest of the logic in

1187 # this function, and just call forward.

1188 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1189 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1190 return forward_call(*input, **kwargs)

1191 # Do not call functions when jit is used

1192 full_backward_hooks, non_full_backward_hooks = [], []

File /databricks/python/lib/python3.9/site-packages/transformers/models/bart/modeling_bart.py:1561, in BartForSequenceClassification.forward(self, input_ids, attention_mask, decoder_input_ids, decoder_attention_mask, head_mask, decoder_head_mask, cross_attn_head_mask, encoder_outputs, inputs_embeds, decoder_inputs_embeds, labels, use_cache, output_attentions, output_hidden_states, return_dict)

1559 elif self.config.problem_type == "single_label_classification":

1560 loss_fct = CrossEntropyLoss()

-> 1561 loss = loss_fct(logits.view(-1, self.config.num_labels), labels.view(-1))

1562 elif self.config.problem_type == "multi_label_classification":

1563 loss_fct = BCEWithLogitsLoss()

File /databricks/python/lib/python3.9/site-packages/torch/nn/modules/module.py:1190, in Module._call_impl(self, *input, **kwargs)

1186 # If we don't have any hooks, we want to skip the rest of the logic in

1187 # this function, and just call forward.

1188 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1189 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1190 return forward_call(*input, **kwargs)

1191 # Do not call functions when jit is used

1192 full_backward_hooks, non_full_backward_hooks = [], []

File /databricks/python/lib/python3.9/site-packages/torch/nn/modules/loss.py:1174, in CrossEntropyLoss.forward(self, input, target)

1173 def forward(self, input: Tensor, target: Tensor) -> Tensor:

-> 1174 return F.cross_entropy(input, target, weight=self.weight,

1175 ignore_index=self.ignore_index, reduction=self.reduction,

1176 label_smoothing=self.label_smoothing)

File /databricks/python/lib/python3.9/site-packages/torch/nn/functional.py:3026, in cross_entropy(input, target, weight, size_average, ignore_index, reduce, reduction, label_smoothing)

3024 if size_average is not None or reduce is not None:

3025 reduction = _Reduction.legacy_get_string(size_average, reduce)

-> 3026 return torch._C._nn.cross_entropy_loss(input, target, weight, _Reduction.get_enum(reduction), ignore_index, label_smoothing)

IndexError: Target 11 is out of bounds.

The number 11 changes from time to time.

11as a target. Load your model with:model = BartForSequenceClassification.from_pretrained("facebook/bart-large-mnli", ignore_mismatched_sizes=True, num_labels=NUM_UNIQUE_LABELS)and try it again. – Rehearing