I am currently working on a project that requires some communication over the network of a different data types from some entities of a distributed system and I am using ZMQ.

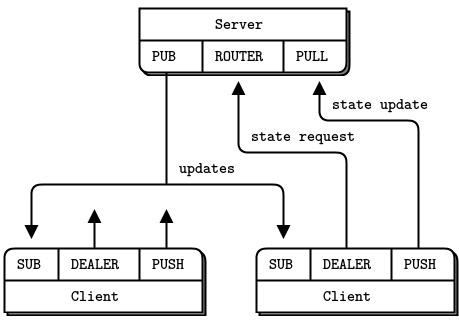

The main goal of the project is to have a central node which services clients which can connect at any time. For each client connected, the central node should manage the message communication between the two.

Currently, and by the moment, all communication is happening over TCP.

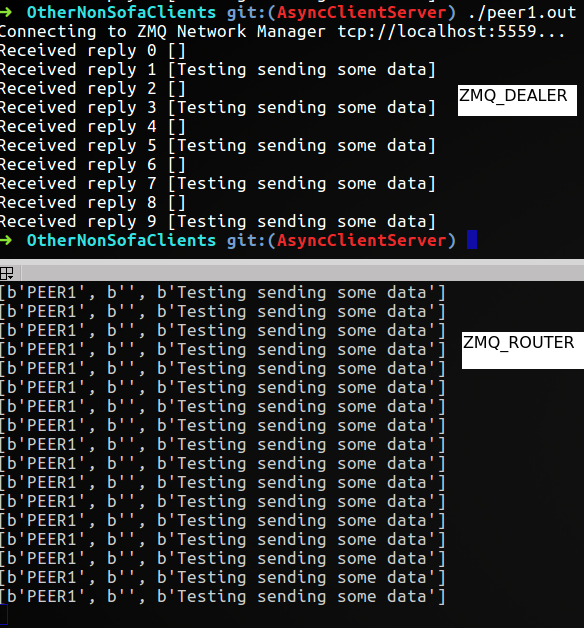

The clients need to send and receive messages at any time so they are ZMQ_DEALER type sockets and the central node is ZMQ_ROUTER

Initially, the goal is that one message from some client, this message arrive at other clients. This means that the other clients can see the same data all.

I have using the Asynchronous Client/Server pattern because I am interested in having several clients talking to each other in a collaborative way, having maybe a server broker or middleware.

I have a ZMQ_DEALER socket client which connect to ZMQ_ROUTER socket server

#include <zmq.hpp>

#include "zhelpers.hpp"

using namespace std;

int main(int argc, char *argv[])

{

zmq::context_t context(1);

zmq::socket_t client(context, ZMQ_DEALER);

const string endpoint = "tcp://localhost:5559";

client.setsockopt(ZMQ_IDENTITY, "PEER1", 5);

cout << "Connecting to ZMQ Network Manager " << endpoint << "..." << endl;

client.connect(endpoint);

for (int request = 0; request < 10; request++)

{

s_sendmore(client, "");

s_send(client, "Testing sending some data");

std::string string = s_recv(client);

std::cout << "Received reply " << request

<< " [" << string << "]" << std::endl;

}

}

On my server code, I have a ZMQ_ROUTER which receive and manage the messages is, making bind it to a well port. This server is made in Python

import zmq

context = zmq.Context()

frontend = context.socket(zmq.ROUTER)

frontend.bind("tcp://*:5559")

# Initialize a poll set

poller = zmq.Poller()

poller.register(frontend, zmq.POLLIN)

print("Creating Server Network Manager Router")

while True:

socks = dict(poller.poll())

if socks.get(frontend) == zmq.POLLIN:

message = frontend.recv_multipart()

print(message)

frontend.send_multipart(message)

On my other peer/client I have the following:

#include <zmq.hpp>

#include "zhelpers.hpp"

using namespace std;

int main (int argc, char *argv[])

{

zmq::context_t context(1);

zmq::socket_t peer2(context, ZMQ_DEALER);

const string endpoint = "tcp://localhost:5559";

peer2.setsockopt(ZMQ_IDENTITY, "PEER2", 5);

cout << "Connecting to ZMQ Network Manager " << endpoint << "..." << endl;

peer2.connect(endpoint);

//s_sendmore(peer2, "");

//s_send(peer2, "Probando");

//std::string string = s_recv(peer2);

//std::cout << "Received reply " << " [" << string << "]" << std::endl;

for (int request = 0; request < 10; request++)

{

s_sendmore(peer2, "");

s_send(peer2, "Probando");

std::string string = s_recv(peer2);

std::cout << "Received reply " << request

<< " [" << string << "]" << std::endl;

}

}

UPDATE

But each that I execute some client, their respective messages do not arrive at another peer client.

The messages arrive at ZMQ_ROUTER, and are returned to the ZMQ_DEALER sender origin.

This is because the identity frame was preceded by the ROUTER at the time of reception and the message is sent back through the ROUTER; where it removes the identity and uses the value to route the message back to the relevant DEALER, according to the ZMQ_ROUTER section to the end page here.

And this is logic, I am sending the identity of my DEALER to the ROUTER, the ROUTER take that identity frame and return to my DEALER the message

In the first instance, to starting in my implementation, I need that some message sent by any DEALER, this will be visualized by any another DEALER without matter how many DEALERS (one or many) are connected to the ZMQ_ROUTER. In this sense ... Is necessary meet about of the identity frame of other DEALER or other DEALERS?

If I have DEALER A, DEALER B, and DEALER C, and ROUTER

then:

DEALER A send a message ...

And I want that message from DEALER A to arrive at DEALER B and DEALER C and so other DEALERS that can be joined to my session conversation ...

In this ideas order, is necessary met the identity frame of DEALER B and DEALER C previously on the DEALER A side so that this message to arrive him?

How to know the identity frames of each DEALER existent on my implementation? This is made on the ROUTER side? I haven't clear this

ROUTERandPUBLISHERon the server side ... really? This may be implemented performing somezmq.POLLINin together (ROUTERandPUBLISHER) sockets? – Alienist