Herb Sutter definitely knows his stuff, but I generally do not like it if one argues about possible "reorderings", because such arguments usually don't provide the full details why a reordering is actually possible. The C++ standard says nothing about if or when instructions can be reordered. It only defines a "happens-before" relation, and any reorderings that may be applied are ultimately a result of applying the rules for the happens-before relation (and of course the ubiquitous "as-if"-rule).

Why is this so important? Because the happens-before relation is also the basis for the definition of a data race:

Two expression evaluations conflict if one of them modifies a memory location (4.4) and the other one reads or modifies the same memory location. [..]

The execution of a program contains a data race if it contains two potentially concurrent conflicting actions, at least one of which is not atomic, and neither happens before the other [..]

And as we all know, data races result in UB, so any argument about correctness of multi-threaded code using atomics has to be based on happens-before. That is, which happens-before relations are required, and how are they established.

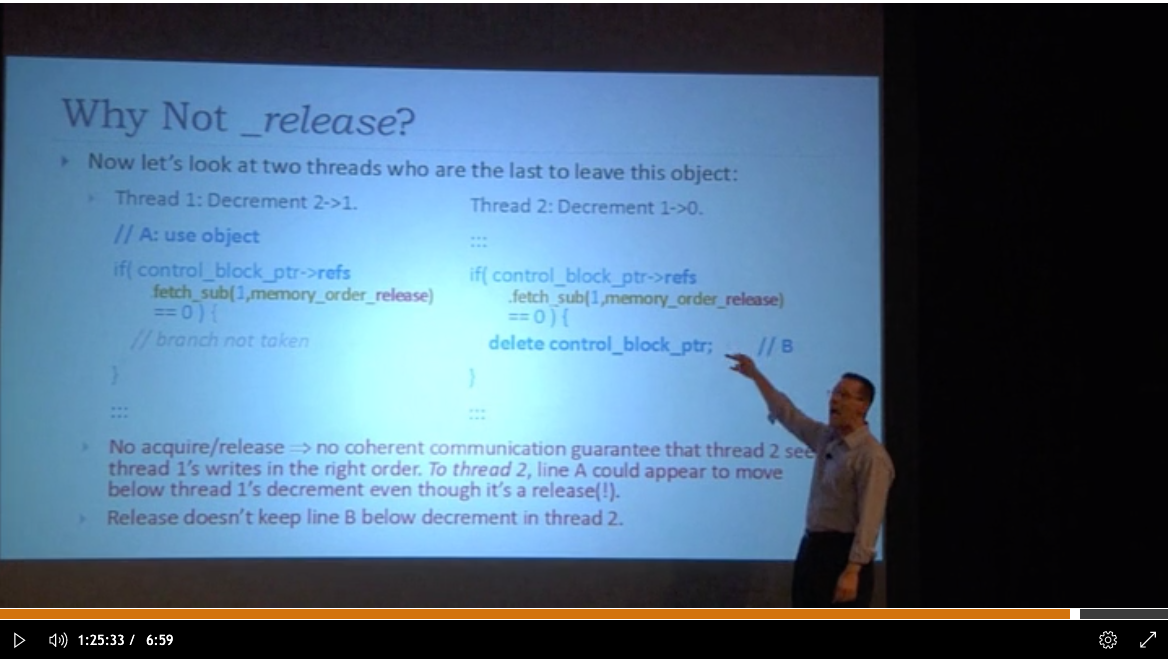

So let's take a look at the example:

Thread 1:

... // use object

if (control_block_ptr->refs.fetch_sub(1, std::memory_order_release) == 1) {

// branch not taken

}

Thread 2:

if (control_block_ptr->refs.fetch_sub(1, std::memory_order_release) == 1) {

delete control_block_ptr;

}

Your requirement here is that the delete must happen-after the use.

Suppose the object contains a std::string and thread 1 assigns a value to that string, i.e., we have a write operation. When thread 2 deletes the object, we also need to delete string member, which obviously involves at least a read operation. This is a textbook example of conflicting operations, so we have to ensure that the assignment in thread 1 happens-before the destruction, because otherwise we would have a data race! Using only memory_order_release, the fetch_sub operation cannot synchronize-with anything (a release-operation by itself is practically pointless), so no happens-before relation exists.

How can we fix it? By making sure that the operations on refs synchronize-with each other. If we use memory_order_acq_rel, then the fetch_sub in thread 2 synchronizes-with the fetch_sub in thread 1, thereby establishing a happens-before relation. "use object" is sequenced-before the fetch_sub in thread 1, and the fetch_sub in thread 2 is sequenced-before the delete. Thus we have:

t1.use -sb-> t1.fetch_sub -sw-> t2.fetch_sub -sb-> t2.delete

where -sb-> denotes "sequenced-before" and -sw-> "synchronizes-with". And because happens-before is transitive, this implies that t1.use happens-before t2.delete.

Another solution would be to add an additional refs.load(memory_order_acquire) right before the delete, then we could stick with memory_order_release for the fetch_sub, because then the t2.load would synchronize-with the t1.fetch_sub.

Update

This update is due to the questions by @plasmacel in the comments.

The standard states the following (see [intro.races]):

If an operation A that modifies an atomic object M happens before an operation B that modifies M, then A shall be earlier than B in the modification order of M.

If a value computation A of an atomic object M happens before a value computation B of M, and A takes its value from a side effect X on M, then the value computed by B shall either be the value stored by X or the value stored by a side effect Y on M, where Y follows X in the modification order of M.

If a value computation A of an atomic object M happens before an operation B that modifies M, then A shall take its value from a side effect X on M, where X precedes B in the modification order of M.

If a side effect X on an atomic object M happens before a value computation B of M, then the evaluation B shall take its value from X or from a side effect Y that follows X in the modification order of M.

So this should make clear that the happens-before relation is not a property of the observable behavior, but rather defines the behavior (and therefore what is observable).

Without a happens-before relation, the only guarantee we have is that writes will become visible eventually, but not when or in which order. Without such guarantees, cross-thread communication is very limited.

However, above all there is the ubiquitous "as-if" rule, which allows the compiler to perform any and all optimizations as long as they do not affect the observable behavior. With respect to the happens-before relation this means the compiler is free to reorder operations (and therefore invalidate the happens-before relation), iff such a change cannot be observed by anyone.

Suppose we have the following code:

int x, y;

// Thread 1

x = 1;

y = 2;

Clearly, x = 1 is sequenced-before y = 2 and therefore x = 1 should happen before y = 2, so one might assume that these operations cannot be reordered. However, reordering these assignments does not affect observable behavior from the perspective of Thread 1, so according to the as-if rule the compiler is free to do so. If another thread would read both variables, it could theoretically be able to observe such a change. But since the variables are not atomic, this would be a data race and therefore undefined behavior. So the compiler can safely assume that no other thread will read these variables while we are performing these writes.

Now let's make the variables atomic to avoid the data race so another thread can actually read the values:

std::atomic<int> x, y;

// Thread 1

x.store(1, std::memory_order_relaxed);

y.store(2, std::memory_order_relaxed);

// Thread 2

int a = y.load(std::memory_order_relaxed);

int b = x.load(std::memory_order_relaxed);

Thread 1 first writes to x and then to y, while Thread 2 first loads y and then x, so one might assume that when Thread 2 reads y == 2, that it is guaranteed that it also reads x == 1. However, since all operations are relaxed, there are no guarantees in which order operations on different objects become visible. For example, there are systems (ARM, Power) where, due to the quirky memory subsystem architecture, the second write can become visible before the first. So even though x.store is sequenced before y.store, the write to y can become visible first. But then the compiler can just as well reorder the two stores, because we would not be able to distinguish such a reorderings from the case were writes become visible in different order. And following the same argument, we could also reorder the two load operations.

Now let's try with a release:

std::atomic<int> x, y;

// Thread 1

x.store(1, std::memory_order_relaxed);

y.store(2, std::memory_order_release);

// Thread 2

int a = y.load(std::memory_order_relaxed);

int b = x.load(std::memory_order_relaxed);

A release-store synchronizes-with an acquire-store, thereby establishing a happens-before relation. However, it is important to note that we need both sides! A release-operation by itself achieves nothing. In this example, we have a release-store, but no acquire-operation. The loads are both still relaxed, so the compiler could still reorder them, based on the same argument as before. So essentially we still have no guarantees about the values Thread 2 reads! Assuming we have no other code accessing x and y, this basically means the compiler would also be free to reorder the stores, even though one of them uses memory_order_release. If the compiler can prove that a release-operation cannot synchronize with any acquire-operation, then it can never participate in any happens-before relation and therefore cannot provide any visibility guarantees, so essentially it is not stronger than a relaxed operation. I don't think any compiler actually does this (not just because such a proof would be difficult for any non-trivial code), but it would be valid.

So why do release/acquire-operations usually prevent reordering? Because when they do participate in a happens-before relation, some reorderings would indeed change observable behavior.

std::atomic<int> x, y, z;

// Thread 1

x.store(1, std::memory_order_relaxed);

y.store(2, std::memory_order_release);

z.store(3, std::memory_order_relaxed);

// Thread 2

int c = z.load(std::memory_order_relaxed);

int a = y.load(std::memory_order_acquire);

int b = x.load(std::memory_order_relaxed);

Suppose y.load observes the value written by y.store, then the two operations synchronize with each other and thereby establish a happens-before relation. In other words, we now know that y.store happened before y.load, so the observable behavior must reflect that. x.store is sequenced-before y.store and y.load is sequenced before x.load. Due to the transitivity of happens-before, it now follows that x.store happens-before x.load. In other words, x.load must observe the value written by x.store (or some later value). That is the actual reason why x.store cannot be reordered past y.store, and also why x.load cannot be reordered before y.load. However, z.store and z.load can still be freely reordered.

Let's take a last look at the original code in question and see how the absence of a happens-before relation can result in incorrect code:

Thread 1:

... // use object

if (control_block_ptr->refs.fetch_sub(1, std::memory_order_release) == 1) {

// branch not taken

}

Thread 2:

if (control_block_ptr->refs.fetch_sub(1, std::memory_order_release) == 1) {

delete control_block_ptr;

}

Suppose the object in question looks something like this:

struct Object {

~Object() {

if (foo) delete foo;

...

}

Foo* foo;

...

};

Then the code for Thread 2 could look like this:

if (control_block_ptr->refs.fetch_sub(1, std::memory_order_release) == 1) {

// inlined control_block_ptr.~Object()

auto f = control_block_ptr->foo;

if (f) delete f;

...

}

Even though a release-operation usually prevents operations to be reordered past it, it is perfectly legal the reorder operations before it. So the code could be optimized like this:

auto f = control_block_ptr->foo;

if (control_block_ptr->refs.fetch_sub(1, std::memory_order_release) == 1) {

// inlined control_block_ptr.~Object()

if (f) delete f;

...

}

So now we read foo before the fetch_sub, but that means we might read foo at a time when Thread 1 still uses the object (and potentially writes to foo).

Instruction reorderings by the compiler are rather simple ways to demonstrate how/why the memory model is relevant, but it is important to note that there are also other aspect that can affect the order in which code is executed or writes become visible, like out-of-order execution or peculiarities of the memory subsystem. So focusing on instruction reordering alone is not sufficient. Instead, it is important to understand that above all is the concept of happens-before relations.

By focusing on instruction reorderings we miss the bigger picture. If a certain instruction reordering would be problematic, then there must be an implicit requirement of a happens-before relation - and that is what we should focus on!