If I want switch from a Kaggle notebook to a Colab notebook, I can download the notebook from Kaggle and open the notebook in Google Colab. The problem with this is that you would normally also need to download and upload the Kaggle dataset, which is quite an effort.

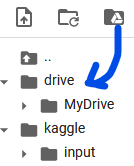

If you have a small dataset or if you need just a smaller file of a dataset, you can put the datasets into the same folder structure that the Kaggle notebook expects. Thus, you will need to create that structure in Google Colab, like kaggle/input/ or whatever, and upload it there. That is not the issue.

If you have a large dataset, though, you can either:

- mount your Google Drive and use the dataset / file from there

- or you download the Kaggle dataset from Kaggle into colab, following the official Colab guide at Easiest way to download kaggle data in Google Colab, please use the link for more details:

Please follow the steps below to download and use kaggle data within Google Colab:

Go to your Kaggle account, Scroll to API section and Click Expire API Token to remove previous tokens

Click on Create New API Token - It will download kaggle.json file on your machine.

Go to your Google Colab project file and run the following commands:

! pip install -q kaggleChoose the kaggle.json file that you downloaded

from google.colab import files files.upload()Make directory named kaggle and copy kaggle.json file there.

! mkdir ~/.kaggle ! cp kaggle.json ~/.kaggle/Change the permissions of the file.

! chmod 600 ~/.kaggle/kaggle.jsonThat's all ! You can check if everything's okay by running this command.

! kaggle datasets listDownload Data

! kaggle competitions download -c 'name-of-competition'

Or if you want to download datasets (taken from a comment):

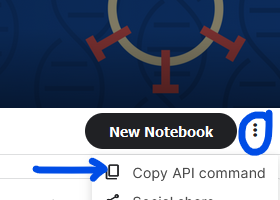

! kaggle datasets download -d USERNAME/DATASET_NAMEYou can get these dataset names (if unclear) from "copy API command" in the "three-dots drop down" next to "New Notebook" button on the Kaggle dataset page.

And here comes the issue: This seems to work only on smaller datasets. I have tried it on

kaggle datasets download -d allen-institute-for-ai/CORD-19-research-challenge

and it does not find that API, probably because downloading 40 GB of data is just restricted: 404 - Not Found.

In such a case, you can only download the needed file and use the mounted Google Drive, or you need to use Kaggle instead of Colab.

Is there a way to download into Colab only the 800 MB metadata.csv file of the 40 GB CORD-19 Kaggle dataset? Here is the link to the file's information page:

https://www.kaggle.com/allen-institute-for-ai/CORD-19-research-challenge?select=metadata.csv

I have now loaded the file in Google Drive, and I am curious whether that is already the best approach. It is quite a lot of effort if in contrast, on Kaggle, the whole dataset is already available, no need to download, and quickly loaded.

PS: After having downloaded the zip file from Kaggle to Colab, it needs to be extracted. Further quoting the quide again:

Use unzip command to unzip the data:

For example, create a directory named train,

! mkdir trainunzip train data there,

! unzip train.zip -d train

Update: I recommend mounting Google Drive

After having tried both ways (either mounting Google Drive or loading directly from Kaggle) I recommend mounting Google Drive if your architecture allows this. The advantage there is that the file needs to be uploaded only once: Google Colab and Google Drive are directly connected. Mounting Google Drive costs you the extra steps to download the file from Kaggle, unzip and upload it to Google Drive, and get and activate a token for each Python session to mount the Google Drive, but activating the token is done quickly. With Kaggle, you need to upload the file from Kaggle to Google Colab at each session instead, which takes more time and traffic.

!kaggle datasets download allen-institute-for-ai/CORD-19-research-challenge -f metadata.csvit works. :) Please answer. – Genip