You will need to use FFmpeg directly, where you may optionally add the vp9_superframe and the vp9_raw_reorder bitstream filters in the same command line if you enable B-frames in the vp9_vaapi encoder.

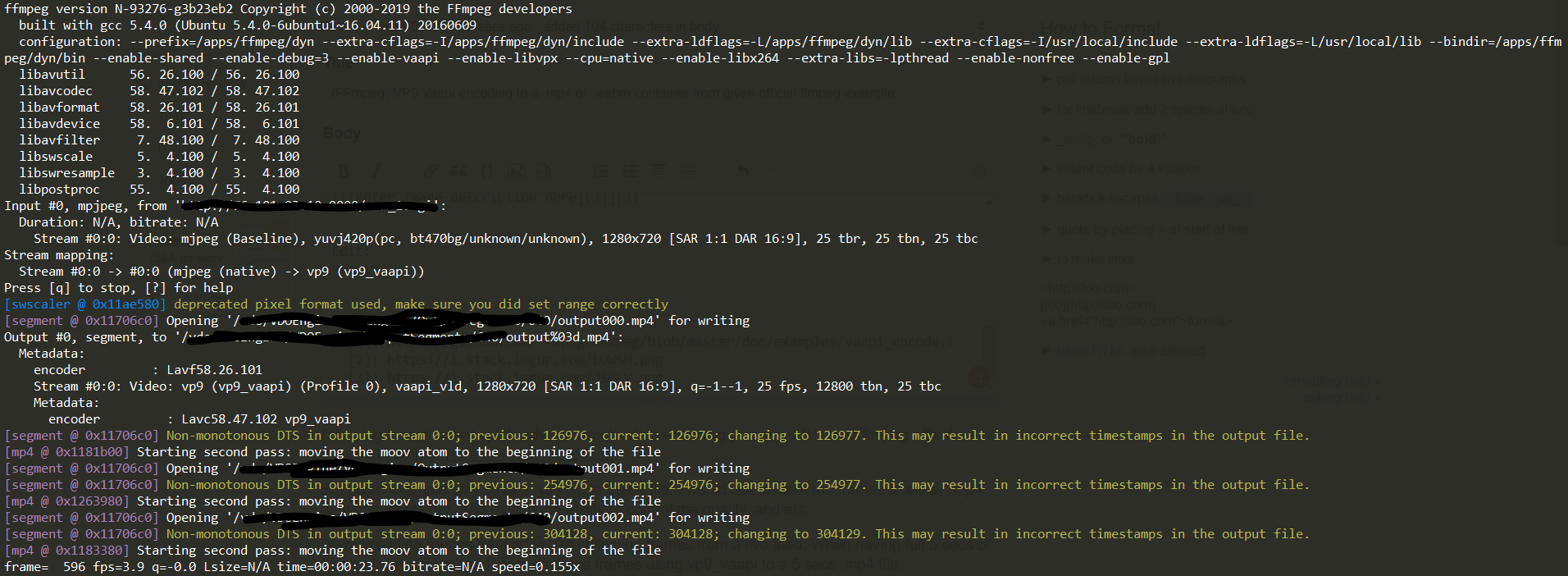

Example:

ffmpeg -threads 4 -vaapi_device /dev/dri/renderD128 \

-hwaccel vaapi -hwaccel_output_format vaapi \

-i http://server:port \

-c:v vp9_vaapi -global_quality 50 -bf 1 \

-bsf:v vp9_raw_reorder,vp9_superframe \

-f segment -segment_time 5 -segment_format_options movflags=+faststart output%03d.mp4

Adjust your input and output paths/urls as needed.

What this command does:

It will create 5 second long mp4 segments, via the segment muxer.

See the usage of the movflags=+faststart , and how it has been passed as a format option to the underlying mp4 muxer via -segment_format_options flag above.

The segment lengths may not be exactly 5 seconds long, as each segment begins (gets cut on) (with) a keyframe.

However, I'd not recommend enabling B-frames in that encoder, as these bitstream filters have other undesired effects, such as mucking around with the encoder's rate control and triggering bugs like this one. This is not desirable in a production environment. This is why the scripts below do not have that option enabled, and instead, we define a set rate control mode directly in the encoder options.

If you need to take advantage of 1:N encoding with VAAPI, use these snippets:

- If you need to deinterlace, call up the

deinterlace_vaapi filter:

ffmpeg -loglevel debug -threads 4 \

-init_hw_device vaapi=va:/dev/dri/renderD128 -hwaccel vaapi \

-hwaccel_device va -filter_hw_device va \

-hwaccel_output_format vaapi \

-i 'http://server:port' \

-filter_complex "[0:v]deinterlace_vaapi,split=3[n0][n1][n2]; \

[n0]scale_vaapi=1152:648[v0]; \

[n1]scale_vaapi=848:480[v1];

[n2]scale_vaapi=640:360[v2]" \

-b:v:0 2250k -maxrate:v:0 2250k -bufsize:v:0 360k -c:v:0 vp9_vaapi -g:v:0 50 -r:v:0 25 -rc_mode:v:0 2 \

-b:v:1 1750k -maxrate:v:1 1750k -bufsize:v:1 280k -c:v:1 vp9_vaapi -g:v:1 50 -r:v:1 25 -rc_mode:v:1 2 \

-b:v:2 1000k -maxrate:v:2 1000k -bufsize:v:2 160k -c:v:2 vp9_vaapi -g:v:2 50 -r:v:2 25 -rc_mode:v:2 2 \

-c:a aac -b:a 128k -ar 48000 -ac 2 \

-flags -global_header -f tee -use_fifo 1 \

-map "[v0]" -map "[v1]" -map "[v2]" -map 0:a \

"[select=\'v:0,a\':f=segment:segment_time=5:segment_format_options=movflags=+faststart]$output_path0/output%03d.mp4| \

[select=\'v:1,a\':f=segment:segment_time=5:segment_format_options=movflags=+faststart]$output_path1/output%03d.mp4| \

[select=\'v:2,a\':f=segment:segment_time=5:segment_format_options=movflags=+faststart]$output_path2/output%03d.mp4"

- Without deinterlacing:

ffmpeg -loglevel debug -threads 4 \

-init_hw_device vaapi=va:/dev/dri/renderD128 -hwaccel vaapi \

-hwaccel_device va -filter_hw_device va -hwaccel_output_format vaapi \

-i 'http://server:port' \

-filter_complex "[0:v]split=3[n0][n1][n2]; \

[n0]scale_vaapi=1152:648[v0]; \

[n1]scale_vaapi=848:480[v1];

[n2]scale_vaapi=640:360[v2]" \

-b:v:0 2250k -maxrate:v:0 2250k -bufsize:v:0 2250k -c:v:0 vp9_vaapi -g:v:0 50 -r:v:0 25 -rc_mode:v:0 2 \

-b:v:1 1750k -maxrate:v:1 1750k -bufsize:v:1 1750k -c:v:1 vp9_vaapi -g:v:1 50 -r:v:1 25 -rc_mode:v:1 2 \

-b:v:2 1000k -maxrate:v:2 1000k -bufsize:v:2 1000k -c:v:2 vp9_vaapi -g:v:2 50 -r:v:2 25 -rc_mode:v:2 2 \

-c:a aac -b:a 128k -ar 48000 -ac 2 \

-flags -global_header -f tee -use_fifo 1 \

-map "[v0]" -map "[v1]" -map "[v2]" -map 0:a \

"[select=\'v:0,a\':f=segment:segment_time=5:segment_format_options=movflags=+faststart]$output_path0/output%03d.mp4| \

[select=\'v:1,a\':f=segment:segment_time=5:segment_format_options=movflags=+faststart]$output_path1/output%03d.mp4| \

[select=\'v:2,a\':f=segment:segment_time=5:segment_format_options=movflags=+faststart]$output_path2/output%03d.mp4"

- Using Intel's QuickSync (on supported platforms):

On Intel Icelake and above, you can use the vp9_qsv encoder wrapper with the following known limitations (for now):

(a). You must enable low_power mode because only the VDENC decode path is exposed by the iHD driver for now.

(b). Coding option1 and extra_data are not supported by MSDK.

(c). The IVF header will be inserted in MSDK by default, but it is not needed for FFmpeg, and remains disabled by default.

See the examples below:

- If you need to deinterlace, call up the

vpp_qsv filter:

ffmpeg -nostdin -y -fflags +genpts \

-init_hw_device vaapi=va:/dev/dri/renderD128,driver=iHD \

-filter_hw_device va -hwaccel vaapi -hwaccel_output_format vaapi

-threads 4 -vsync 1 -async 1 \

-i 'http://server:port' \

-filter_complex "[0:v]hwmap=derive_device=qsv,format=qsv,vpp_qsv=deinterlace=2:async_depth=4,split[n0][n1][n2]; \

[n0]vpp_qsv=w=1152:h=648:async_depth=4[v0]; \

[n1]vpp_qsv=w=848:h=480:async_depth=4[v1];

[n2]vpp_qsv=w=640:h=360:async_depth=4[v2]" \

-b:v:0 2250k -maxrate:v:0 2250k -bufsize:v:0 360k -c:v:0 vp9_qsv -g:v:0 50 -r:v:0 25 -low_power:v:0 2 \

-b:v:1 1750k -maxrate:v:1 1750k -bufsize:v:1 280k -c:v:1 vp9_qsv -g:v:1 50 -r:v:1 25 -low_power:v:1 2 \

-b:v:2 1000k -maxrate:v:2 1000k -bufsize:v:2 160k -c:v:2 vp9_qsv -g:v:2 50 -r:v:2 25 -low_power:v:2 2 \

-c:a aac -b:a 128k -ar 48000 -ac 2 \

-flags -global_header -f tee -use_fifo 1 \

-map "[v0]" -map "[v1]" -map "[v2]" -map 0:a \

"[select=\'v:0,a\':f=segment:segment_time=5:segment_format_options=movflags=+faststart]$output_path0/output%03d.mp4| \

[select=\'v:1,a\':f=segment:segment_time=5:segment_format_options=movflags=+faststart]$output_path1/output%03d.mp4| \

[select=\'v:2,a\':f=segment:segment_time=5:segment_format_options=movflags=+faststart]$output_path2/output%03d.mp4"

- Without deinterlacing:

ffmpeg -nostdin -y -fflags +genpts \

-init_hw_device vaapi=va:/dev/dri/renderD128,driver=iHD \

-filter_hw_device va -hwaccel vaapi -hwaccel_output_format vaapi

-threads 4 -vsync 1 -async 1 \

-i 'http://server:port' \

-filter_complex "[0:v]hwmap=derive_device=qsv,format=qsv,split=3[n0][n1][n2]; \

[n0]vpp_qsv=w=1152:h=648:async_depth=4[v0]; \

[n1]vpp_qsv=w=848:h=480:async_depth=4[v1];

[n2]vpp_qsv=w=640:h=360:async_depth=4[v2]" \

-b:v:0 2250k -maxrate:v:0 2250k -bufsize:v:0 2250k -c:v:0 vp9_qsv -g:v:0 50 -r:v:0 25 -low_power:v:0 2 \

-b:v:1 1750k -maxrate:v:1 1750k -bufsize:v:1 1750k -c:v:1 vp9_qsv -g:v:1 50 -r:v:1 25 -low_power:v:1 2 \

-b:v:2 1000k -maxrate:v:2 1000k -bufsize:v:2 1000k -c:v:2 vp9_qsv -g:v:2 50 -r:v:2 25 -low_power:v:2 2 \

-c:a aac -b:a 128k -ar 48000 -ac 2 \

-flags -global_header -f tee -use_fifo 1 \

-map "[v0]" -map "[v1]" -map "[v2]" -map 0:a \

"[select=\'v:0,a\':f=segment:segment_time=5:segment_format_options=movflags=+faststart]$output_path0/output%03d.mp4| \

[select=\'v:1,a\':f=segment:segment_time=5:segment_format_options=movflags=+faststart]$output_path1/output%03d.mp4| \

[select=\'v:2,a\':f=segment:segment_time=5:segment_format_options=movflags=+faststart]$output_path2/output%03d.mp4"

Note that we use the vpp_qsv filter with the async_depth option set to 4. This massively improves transcode performance over using scale_qsv and deinterlace_qsv. See this commit on FFmpeg's git.

Note: If you use the QuickSync path, note that MFE (Multi-Frame encoding mode) will be enabled by default if the Media SDK library on your system supports it.

Formulae used to derive the snippet above:

Optimal bufsize:v = target bitrate (-b:v value)

Set GOP size as: 2 * fps (GOP interval set to 2 seconds).

We limit the thread counts for the video encoder(s) via -threads:v to prevent VBV overflows.

Resolution ladder used: 640p, 480p and 360p in 16:9, see this link.

Adjust this as needed.

Substitute the variables above ($output_path{0-2}, the input, etc) as needed.

Test and report back.

Current observations:

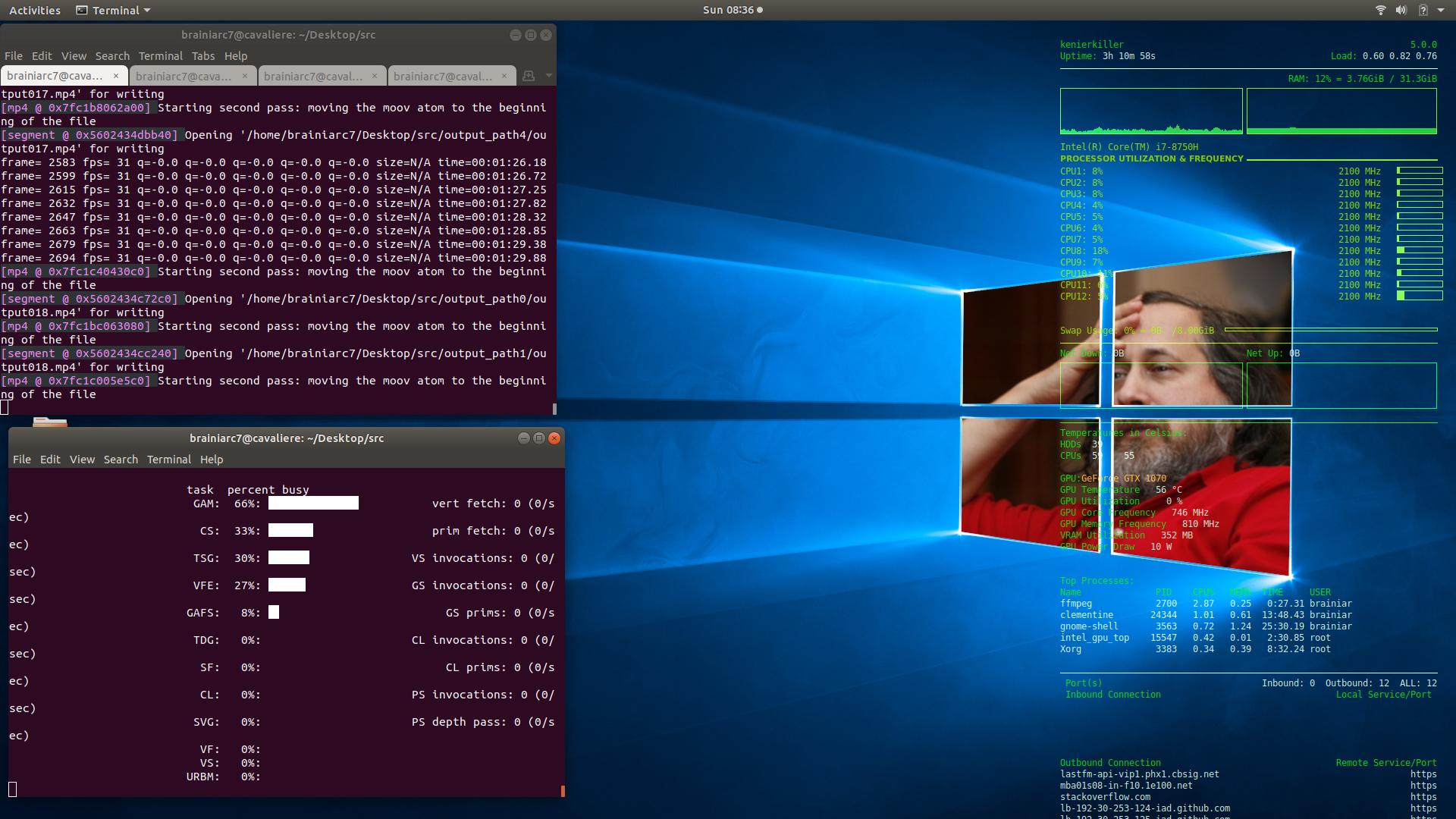

On my system, I'm able to encode up to 5 streams at real-time with VP9 using Apple's recommended resolutions and bit-rates for HEVC encoding for HLS as a benchmark. See the picture below on system load, etc.

![system load and stats with 5 simultaneous VAAPI encodes in progress]()

Platform details:

I'm on a Coffee-lake system, using the i965 driver for this workflow:

libva info: VA-API version 1.5.0

libva info: va_getDriverName() returns 0

libva info: User requested driver 'i965'

libva info: Trying to open /usr/lib/x86_64-linux-gnu/dri/i965_drv_video.so

libva info: Found init function __vaDriverInit_1_5

libva info: va_openDriver() returns 0

vainfo: VA-API version: 1.5 (libva 2.4.0.pre1)

vainfo: Driver version: Intel i965 driver for Intel(R) Coffee Lake - 2.4.0.pre1 (2.3.0-11-g881e67a)

vainfo: Supported profile and entrypoints

VAProfileMPEG2Simple : VAEntrypointVLD

VAProfileMPEG2Simple : VAEntrypointEncSlice

VAProfileMPEG2Main : VAEntrypointVLD

VAProfileMPEG2Main : VAEntrypointEncSlice

VAProfileH264ConstrainedBaseline: VAEntrypointVLD

VAProfileH264ConstrainedBaseline: VAEntrypointEncSlice

VAProfileH264ConstrainedBaseline: VAEntrypointEncSliceLP

VAProfileH264Main : VAEntrypointVLD

VAProfileH264Main : VAEntrypointEncSlice

VAProfileH264Main : VAEntrypointEncSliceLP

VAProfileH264High : VAEntrypointVLD

VAProfileH264High : VAEntrypointEncSlice

VAProfileH264High : VAEntrypointEncSliceLP

VAProfileH264MultiviewHigh : VAEntrypointVLD

VAProfileH264MultiviewHigh : VAEntrypointEncSlice

VAProfileH264StereoHigh : VAEntrypointVLD

VAProfileH264StereoHigh : VAEntrypointEncSlice

VAProfileVC1Simple : VAEntrypointVLD

VAProfileVC1Main : VAEntrypointVLD

VAProfileVC1Advanced : VAEntrypointVLD

VAProfileNone : VAEntrypointVideoProc

VAProfileJPEGBaseline : VAEntrypointVLD

VAProfileJPEGBaseline : VAEntrypointEncPicture

VAProfileVP8Version0_3 : VAEntrypointVLD

VAProfileVP8Version0_3 : VAEntrypointEncSlice

VAProfileHEVCMain : VAEntrypointVLD

VAProfileHEVCMain : VAEntrypointEncSlice

VAProfileHEVCMain10 : VAEntrypointVLD

VAProfileHEVCMain10 : VAEntrypointEncSlice

VAProfileVP9Profile0 : VAEntrypointVLD

VAProfileVP9Profile0 : VAEntrypointEncSlice

VAProfileVP9Profile2 : VAEntrypointVLD

My ffmpeg build info:

ffmpeg -buildconf

ffmpeg version N-93308-g1144d5c96d Copyright (c) 2000-2019 the FFmpeg developers

built with gcc 7 (Ubuntu 7.3.0-27ubuntu1~18.04)

configuration: --pkg-config-flags=--static --prefix=/home/brainiarc7/bin --bindir=/home/brainiarc7/bin --extra-cflags=-I/home/brainiarc7/bin/include --extra-ldflags=-L/home/brainiarc7/bin/lib --enable-cuda-nvcc --enable-cuvid --enable-libnpp --extra-cflags=-I/usr/local/cuda/include/ --extra-ldflags=-L/usr/local/cuda/lib64/ --enable-nvenc --extra-cflags=-I/opt/intel/mediasdk/include --extra-ldflags=-L/opt/intel/mediasdk/lib --extra-ldflags=-L/opt/intel/mediasdk/plugins --enable-libmfx --enable-libass --enable-vaapi --disable-debug --enable-libvorbis --enable-libvpx --enable-libdrm --enable-opencl --enable-gpl --cpu=native --enable-opengl --enable-libfdk-aac --enable-libx265 --enable-openssl --extra-libs='-lpthread -lm' --enable-nonfree

libavutil 56. 26.100 / 56. 26.100

libavcodec 58. 47.103 / 58. 47.103

libavformat 58. 26.101 / 58. 26.101

libavdevice 58. 6.101 / 58. 6.101

libavfilter 7. 48.100 / 7. 48.100

libswscale 5. 4.100 / 5. 4.100

libswresample 3. 4.100 / 3. 4.100

libpostproc 55. 4.100 / 55. 4.100

configuration:

--pkg-config-flags=--static

--prefix=/home/brainiarc7/bin

--bindir=/home/brainiarc7/bin

--extra-cflags=-I/home/brainiarc7/bin/include

--extra-ldflags=-L/home/brainiarc7/bin/lib

--enable-cuda-nvcc

--enable-cuvid

--enable-libnpp

--extra-cflags=-I/usr/local/cuda/include/

--extra-ldflags=-L/usr/local/cuda/lib64/

--enable-nvenc

--extra-cflags=-I/opt/intel/mediasdk/include

--extra-ldflags=-L/opt/intel/mediasdk/lib

--extra-ldflags=-L/opt/intel/mediasdk/plugins

--enable-libmfx

--enable-libass

--enable-vaapi

--disable-debug

--enable-libvorbis

--enable-libvpx

--enable-libdrm

--enable-opencl

--enable-gpl

--cpu=native

--enable-opengl

--enable-libfdk-aac

--enable-libx265

--enable-openssl

--extra-libs='-lpthread -lm'

--enable-nonfree

And output from inxi:

inxi -F

System: Host: cavaliere Kernel: 5.0.0 x86_64 bits: 64 Desktop: Gnome 3.28.3 Distro: Ubuntu 18.04.2 LTS

Machine: Device: laptop System: ASUSTeK product: Zephyrus M GM501GS v: 1.0 serial: N/A

Mobo: ASUSTeK model: GM501GS v: 1.0 serial: N/A

UEFI: American Megatrends v: GM501GS.308 date: 10/01/2018

Battery BAT0: charge: 49.3 Wh 100.0% condition: 49.3/55.0 Wh (90%)

CPU: 6 core Intel Core i7-8750H (-MT-MCP-) cache: 9216 KB

clock speeds: max: 4100 MHz 1: 2594 MHz 2: 3197 MHz 3: 3633 MHz 4: 3514 MHz 5: 3582 MHz 6: 3338 MHz

7: 3655 MHz 8: 3684 MHz 9: 1793 MHz 10: 3651 MHz 11: 3710 MHz 12: 3662 MHz

Graphics: Card-1: Intel Device 3e9b

Card-2: NVIDIA GP104M [GeForce GTX 1070 Mobile]

Display Server: x11 (X.Org 1.19.6 ) drivers: modesetting,nvidia (unloaded: fbdev,vesa,nouveau)

Resolution: [email protected]

OpenGL: renderer: GeForce GTX 1070/PCIe/SSE2 version: 4.6.0 NVIDIA 418.43

Audio: Card-1 Intel Cannon Lake PCH cAVS driver: snd_hda_intel Sound: ALSA v: k5.0.0

Card-2 NVIDIA GP104 High Definition Audio Controller driver: snd_hda_intel

Card-3 Kingston driver: USB Audio

Network: Card: Intel Wireless-AC 9560 [Jefferson Peak] driver: iwlwifi

IF: wlo1 state: up mac: (redacted)

Drives: HDD Total Size: 3050.6GB (94.5% used)

ID-1: /dev/nvme0n1 model: Samsung_SSD_960_EVO_1TB size: 1000.2GB

ID-2: /dev/sda model: Crucial_CT2050MX size: 2050.4GB

Partition: ID-1: / size: 246G used: 217G (94%) fs: ext4 dev: /dev/nvme0n1p5

ID-2: swap-1 size: 8.59GB used: 0.00GB (0%) fs: swap dev: /dev/nvme0n1p6

RAID: No RAID devices: /proc/mdstat, md_mod kernel module present

Sensors: System Temperatures: cpu: 64.0C mobo: N/A gpu: 61C

Fan Speeds (in rpm): cpu: N/A

Info: Processes: 412 Uptime: 3:32 Memory: 4411.3/32015.5MB Client: Shell (bash) inxi: 2.3.56

Why that last bit is included:

I'm running the latest Linux kernel to date, version 5.0.

The same also applies to the graphics driver stack, on Ubuntu 18.04LTS.

FFmpeg was built as shown here as this laptop has both NVIDIA+ Intel GPU enabled via Optimus. That way, I can tap into VAAPI, QuickSync and NVENC hwaccels as needed. Your mileage may vary even if our hardware is identical.

References:

- See the encoder options, including rate control methods supported:

ffmpeg -h encoder=vp9_vaapi

- See the deinterlace_vaapi filter usage options:

ffmpeg -h filter=deinterlace_vaapi

- On the

vpp_qsv filter usage, see:

ffmpeg -h filter=vpp_qsv

For instance, if you want field-rate output rather than frame-rate output from the deinterlacer, you could pass the rate=field option to it instead:

-vf=vaapi_deinterlace=rate=field

This feature, for instance, is tied to encoders that support MBAFF. Others, such as the NVENC-based ones in FFmpeg, do not have this implemented (as of the time of writing).

Tips on getting ahead with FFmpeg:

Where possible, infer to the built-in docs, as with the examples shown above.

They can uncover potential pitfalls that you may be able to avoid by understanding how filter chaining and encoder initialization works, unsupported features, etc, and the impact on performance.

For example, you'll see that in the snippets above, we call up the deinterlacer only once, then split its' output via the split filter to separate scalers. This is done to lower the overhead that would be incurred had we called up the deinterlacer more than once, and it would've been wasteful.

Warning:

Note that the SDK requires at least 2 threads to prevent deadlock, see this code block. This is why we set -threads 4 in ffmpeg.