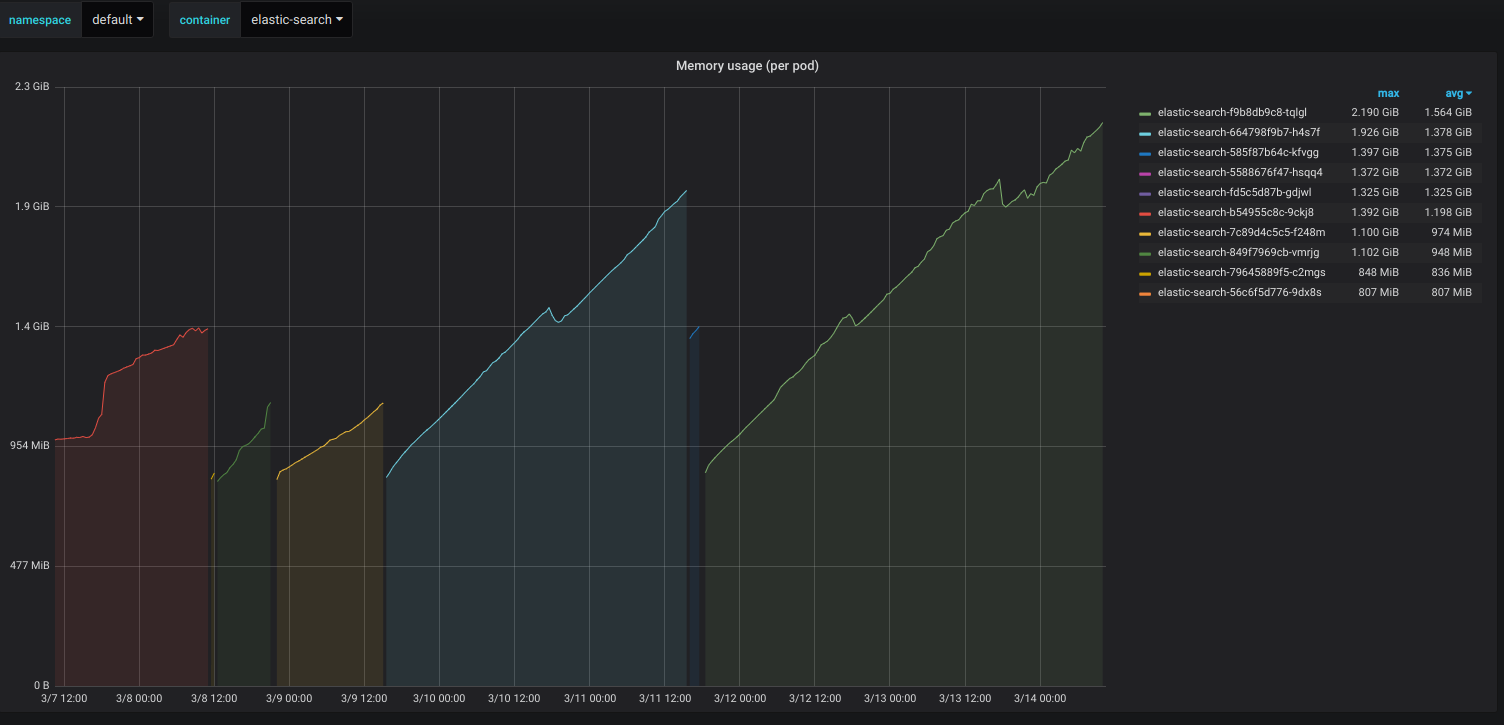

I have deployed Elastic-search container in aws using eks kubernetes cluster. The memory usage of the container keeps increasing even though there are only 3 indices and not used heavily. I am dumping cluster container logs into elastic search using FluentD. Other than this, there is no use of elastic-search. I tried applying min/max heap size using -Xms512m -Xmx512m. It applies successfully but still, the memory usage gets almost doubled in 24 hours. I am not sure what other options do i have to configure. I tried changing docker image from elasticsearch:6.5.4 to elasticsearch:6.5.1. But issue persists. I also tried -XX:MaxHeapFreeRatio=50 java option.

Check the screenshot from kibana.

Edit : Following are logs from Elastic-search start-up :

[2019-03-18T13:24:03,119][WARN ][o.e.b.JNANatives ] [es-79c977d57-v77gw] Unable to lock JVM Memory: error=12, reason=Cannot allocate memory

[2019-03-18T13:24:03,120][WARN ][o.e.b.JNANatives ] [es-79c977d57-v77gw] This can result in part of the JVM being swapped out.

[2019-03-18T13:24:03,120][WARN ][o.e.b.JNANatives ] [es-79c977d57-v77gw] Increase RLIMIT_MEMLOCK, soft limit: 16777216, hard limit: 16777216

[2019-03-18T13:24:03,120][WARN ][o.e.b.JNANatives ] [es-79c977d57-v77gw] These can be adjusted by modifying /etc/security/limits.conf, for example:

# allow user 'elasticsearch' mlockall

elasticsearch soft memlock unlimited

elasticsearch hard memlock unlimited

[2019-03-18T13:24:03,120][WARN ][o.e.b.JNANatives ] [es-79c977d57-v77gw] If you are logged in interactively, you will have to re-login for the new limits to take effect.

[2019-03-18T13:24:03,397][INFO ][o.e.e.NodeEnvironment ] [es-79c977d57-v77gw] using [1] data paths, mounts [[/usr/share/elasticsearch/data (/dev/xvda1)]], net usable_space [38.6gb], net total_space [96.8gb], types [ext4]

[2019-03-18T13:24:03,397][INFO ][o.e.e.NodeEnvironment ] [es-79c977d57-v77gw] heap size [503.6mb], compressed ordinary object pointers [true]

[2019-03-18T13:24:03,469][INFO ][o.e.n.Node ] [es-79c977d57-v77gw] node name [es-79c977d57-v77gw], node ID [qrCUCaHoQfa3SXuTpLjUUA]

[2019-03-18T13:24:03,469][INFO ][o.e.n.Node ] [es-79c977d57-v77gw] version[6.5.1], pid[1], build[default/tar/8c58350/2018-11-16T02:22:42.182257Z], OS[Linux/4.15.0-1032-aws/amd64], JVM[Oracle Corporation/OpenJDK 64-Bit Server VM/11.0.1/11.0.1+13]

[2019-03-18T13:24:03,469][INFO ][o.e.n.Node ] [es-79c977d57-v77gw] JVM arguments [-Xms1g, -Xmx1g, -XX:+UseConcMarkSweepGC, -XX:CMSInitiatingOccupancyFraction=75, -XX:+UseCMSInitiatingOccupancyOnly, -XX:+AlwaysPreTouch, -Xss1m, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djna.nosys=true, -XX:-OmitStackTraceInFastThrow, -Dio.netty.noUnsafe=true, -Dio.netty.noKeySetOptimization=true, -Dio.netty.recycler.maxCapacityPerThread=0, -Dlog4j.shutdownHookEnabled=false, -Dlog4j2.disable.jmx=true, -Djava.io.tmpdir=/tmp/elasticsearch.oEmM9oSp, -XX:+HeapDumpOnOutOfMemoryError, -XX:HeapDumpPath=data, -XX:ErrorFile=logs/hs_err_pid%p.log, -Xlog:gc*,gc+age=trace,safepoint:file=logs/gc.log:utctime,pid,tags:filecount=32,filesize=64m, -Djava.locale.providers=COMPAT, -XX:UseAVX=2, -Des.cgroups.hierarchy.override=/, -Xms512m, -Xmx512m, -Des.path.home=/usr/share/elasticsearch, -Des.path.conf=/usr/share/elasticsearch/config, -Des.distribution.flavor=default, -Des.distribution.type=tar]

[2019-03-18T13:24:05,082][INFO ][o.e.p.PluginsService ] [es-79c977d57-v77gw] loaded module [aggs-matrix-stats]

[2019-03-18T13:24:05,082][INFO ][o.e.p.PluginsService ] [es-79c977d57-v77gw] loaded module [analysis-common]

[2019-03-18T13:24:05,082][INFO ][o.e.p.PluginsService ] [es-79c977d57-v77gw] loaded module [ingest-common] ....

ES_JAVA_OPTS. When the container starts, it uses memory based on heap size specified but later gradually the memory consumption increases. – Kratz