I have the following table:

id | number_of _trip | start_date | end_date | seconds

1 637hui 2022-03-10 01:20:00 2022-03-10 01:32:00 720

2 384nfj 2022-03-10 02:18:00 2022-03-10 02:42:00 1440

3 102fiu 2022-03-10 02:10:00 2022-03-10 02:23:00 780

4 948pvc 2022-03-10 02:40:00 2022-03-10 03:20:00 2400

5 473mds 2022-03-10 02:45:00 2022-03-10 02:58:00 780

6 103fkd 2022-03-10 03:05:00 2022-03-10 03:28:00 1380

7 905783 2022-03-10 03:12:00 null 0

8 498wsq 2022-03-10 05:30:00 2022-03-10 05:48:00 1080

I want to get the time that is driven for each hour, but if a trip takes the space of two hours, the time must be taken for each hour.

If the end of the trip has not yet finished, the end_date field is null, but it must count the time it is taking in the respective hours from start_date.

I have the following query:

SELECT time_bucket(bucket_width := INTERVAL '1 hour',ts := start_date, "offset" := '0 minutes') AS init_date,

sum(seconds) as seconds

FROM trips

WHERE start_date >= '2022-03-10 01:00:00' AND start_date <= '2022-03-10 06:00:00'

GROUP BY init_date

ORDER BY init_date;

The result is:

| init_date | seconds

2022-03-10 01:00:00 720

2022-03-10 02:00:00 5400

2022-03-10 03:00:00 1380

2022-03-10 05:00:00 1080

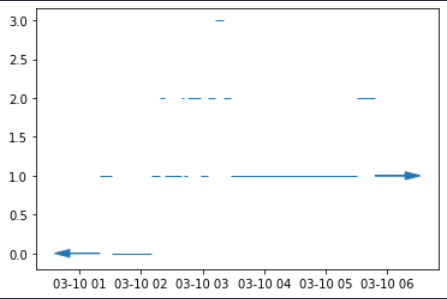

However I expect to receive a result like this:

| init_date | seconds solo como una ayuda visual

2022-03-10 01:00:00 720 id(1:720)

2022-03-10 02:00:00 4200 id(2: 1440 3: 780 4: 1200 5: 780)

2022-03-10 03:00:00 5460 id(4:1200 6:1380 7:2880)

2022-03-10 05:00:00 1080 id(8:1080)

EDIT

If I replace the null the result is still unwanted:

| init_date | seconds

2022-03-10 01:00:00 720

2022-03-10 02:00:00 5400

2022-03-10 03:00:00 1380

2022-03-10 05:00:00 1080

I have been thinking about getting all the data and solving the problem with pandas. I'll try and post if I get the answer. EDIT

My previous result was not entirely correct, since there were hours left of a trip that has not yet finished, the correct result should be:

start_date seconds

0 2022-03-10 01:00:00 720

1 2022-03-10 02:00:00 4200

2 2022-03-10 03:00:00 5460

3 2022-03-10 04:00:00 3600

4 2022-03-10 05:00:00 4680

NEW CODE

def bucket_count(bucket, data):

result = pd.DataFrame()

list_r = []

for row_bucket in bucket.to_dict('records'):

inicio = row_bucket['start_date']

fin = row_bucket['end_date']

df = data[

(inicio <= data['end_date']) & (inicio <= fin) & (data['start_date'] <= fin) & (data['start_date'] <= data['end_date'])

]

df_dict = df.to_dict('records')

for row in df_dict:

seconds = 0

if row['start_date'] >= inicio and fin >= row['end_date']:

seconds = (row['end_date'] - row['start_date']).total_seconds()

elif row['start_date'] <= inicio <= row['end_date'] <= fin:

seconds = (row['end_date'] - inicio).total_seconds()

elif inicio <= row['start_date'] <= fin <= row['end_date']:

seconds = (fin - row['start_date']).total_seconds()

elif row['start_date'] < inicio and fin < row['end_date']:

seconds = (fin - inicio).total_seconds()

row['start_date'] = inicio

row['end_date'] = fin

row['seconds'] = seconds

list_r.append(row)

result = pd.DataFrame(list_r)

return result.groupby(['start_date'])["seconds"].apply(lambda x: x.astype(int).sum()).reset_index()

timescaledb- so it's PostgreSQL. – Riti