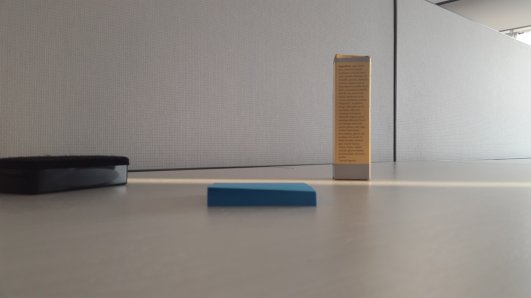

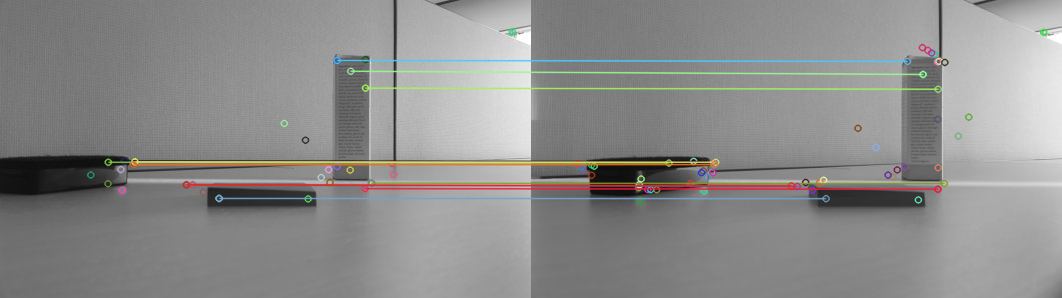

I am trying to use StereoBM to get disparity map of two images. I tried some sample code and images. They are working fine. However, when I try my own images, I got very bad map, very noisy.

my StereoBM parameters

sbm.state->SADWindowSize = 25;

sbm.state->numberOfDisparities = 128;

sbm.state->preFilterSize = 5;

sbm.state->preFilterCap = 61;

sbm.state->minDisparity = -39;

sbm.state->textureThreshold = 507;

sbm.state->uniquenessRatio = 0;

sbm.state->speckleWindowSize = 0;

sbm.state->speckleRange = 8;

sbm.state->disp12MaxDiff = 1;

My questions are

- Any problems about my images?

- Is possible to get good disparity map without calibration of camera? Do I need to rectify images before StereoBM

Thanks.

Here is my code for rectifying image

Mat img_1 = imread( "image1.jpg", CV_LOAD_IMAGE_GRAYSCALE );

Mat img_2 = imread( "image2.jpg", CV_LOAD_IMAGE_GRAYSCALE );

int minHessian = 430;

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_1, keypoints_2;

detector.detect( img_1, keypoints_1 );

detector.detect( img_2, keypoints_2 );

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_1, descriptors_2;

extractor.compute( img_1, keypoints_1, descriptors_1 );

extractor.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: Matching descriptor vectors with a brute force matcher

BFMatcher matcher(NORM_L1, true); //BFMatcher matcher(NORM_L2);

std::vector< DMatch > matches;

matcher.match( descriptors_1, descriptors_2, matches );

double max_dist = 0; double min_dist = 100;

//-- Quick calculation of max and min distances between keypoints

for( int i = 0; i < matches.size(); i++ )

{ double dist = matches[i].distance;

if( dist < min_dist ) min_dist = dist;

if( dist > max_dist ) max_dist = dist;

}

std::vector< DMatch > good_matches;

vector<Point2f>imgpts1,imgpts2;

for( int i = 0; i < matches.size(); i++ )

{

if( matches[i].distance <= max(4.5*min_dist, 0.02) ){

good_matches.push_back( matches[i]);

imgpts1.push_back(keypoints_1[matches[i].queryIdx].pt);

imgpts2.push_back(keypoints_2[matches[i].trainIdx].pt);

}

}

std::vector<uchar> status;

cv::Mat F = cv::findFundamentalMat(imgpts1, imgpts2, cv::FM_8POINT, 3., 0.99, status); //FM_RANSAC

Mat H1,H2;

cv::stereoRectifyUncalibrated(imgpts1, imgpts1, F, img_1.size(), H1, H2);

cv::Mat rectified1(img_1.size(), img_1.type());

cv::warpPerspective(img_1, rectified1, H1, img_1.size());

cv::Mat rectified2(img_2.size(), img_2.type());

cv::warpPerspective(img_2, rectified2, H2, img_2.size());

StereoBM sbm;

sbm.state->SADWindowSize = 25;

sbm.state->numberOfDisparities = 128;

sbm.state->preFilterSize = 5;

sbm.state->preFilterCap = 61;

sbm.state->minDisparity = -39;

sbm.state->textureThreshold = 507;

sbm.state->uniquenessRatio = 0;

sbm.state->speckleWindowSize = 0;

sbm.state->speckleRange = 8;

sbm.state->disp12MaxDiff = 1;

Mat disp,disp8;

sbm(rectified1, rectified2, disp);

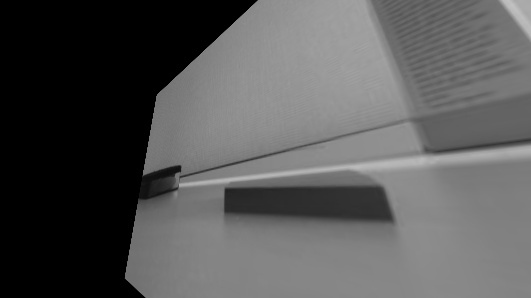

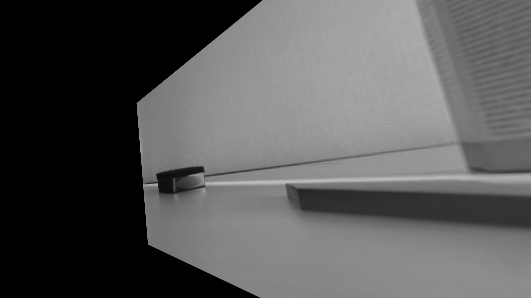

the rectified images and disparity map are here