We are trying to understand how Windows CPU Scheduler works in order to optimize our applications to achieve maximum possible infrastructure/real work ratio. There's some things in xperf that we don't understand and would like to ask the community to shed some light on what's really happening. We initially started to investigate these issues when we got reports that some servers were "slow" or "unresponsive".

Background information

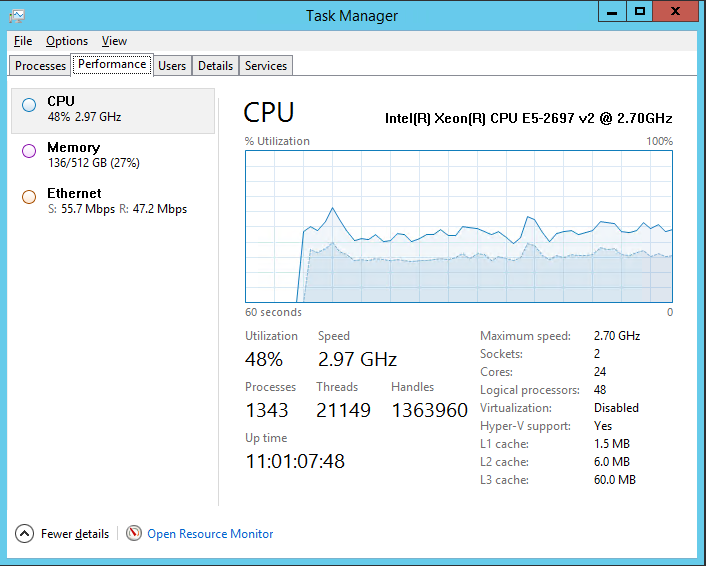

We have a Windows 2012 R2 Server that runs our middleware infrastructure with the following specs.

We found concerning that 30% of CPU is getting wasted on kernel, so we started to dig deeper.

The server above runs "host" ~500 processes (as windows services), each of these "host" processes has an inner while loop with a ~250 ms delay (yuck!), and each of those "host" processes may have ~1..2 "child" processes that are executing the actual work.

While having the infinite loop with 250 ms delay between iterations, the actual useful work for the "host" application to execute may appear only every 10..15 seconds. So there's a lot of cycles wasted for unnecessary looping.

We are aware that design of the "host" application is sub-optimal, to say the least, as applied to our scenario. The application is getting changed to an event-based model which will not require the loop and therefore we expect a significant reduction of "kernel" time in CPU utilization graph.

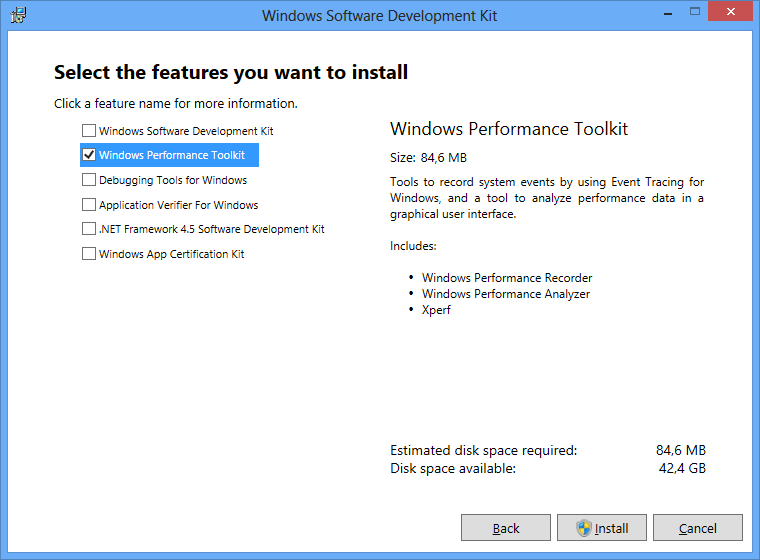

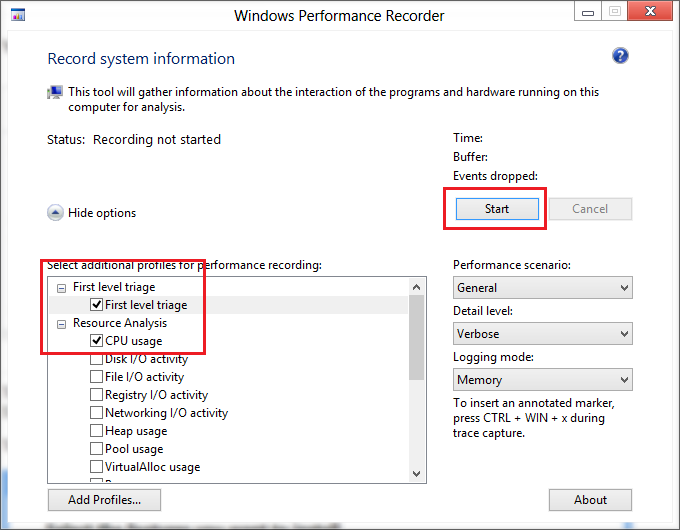

However, while we were investigating this problem, we've done some xperf analysis which raised several general questions about Windows CPU Scheduler for which we were unable to find any clear/concise explanation.

What we don't understand

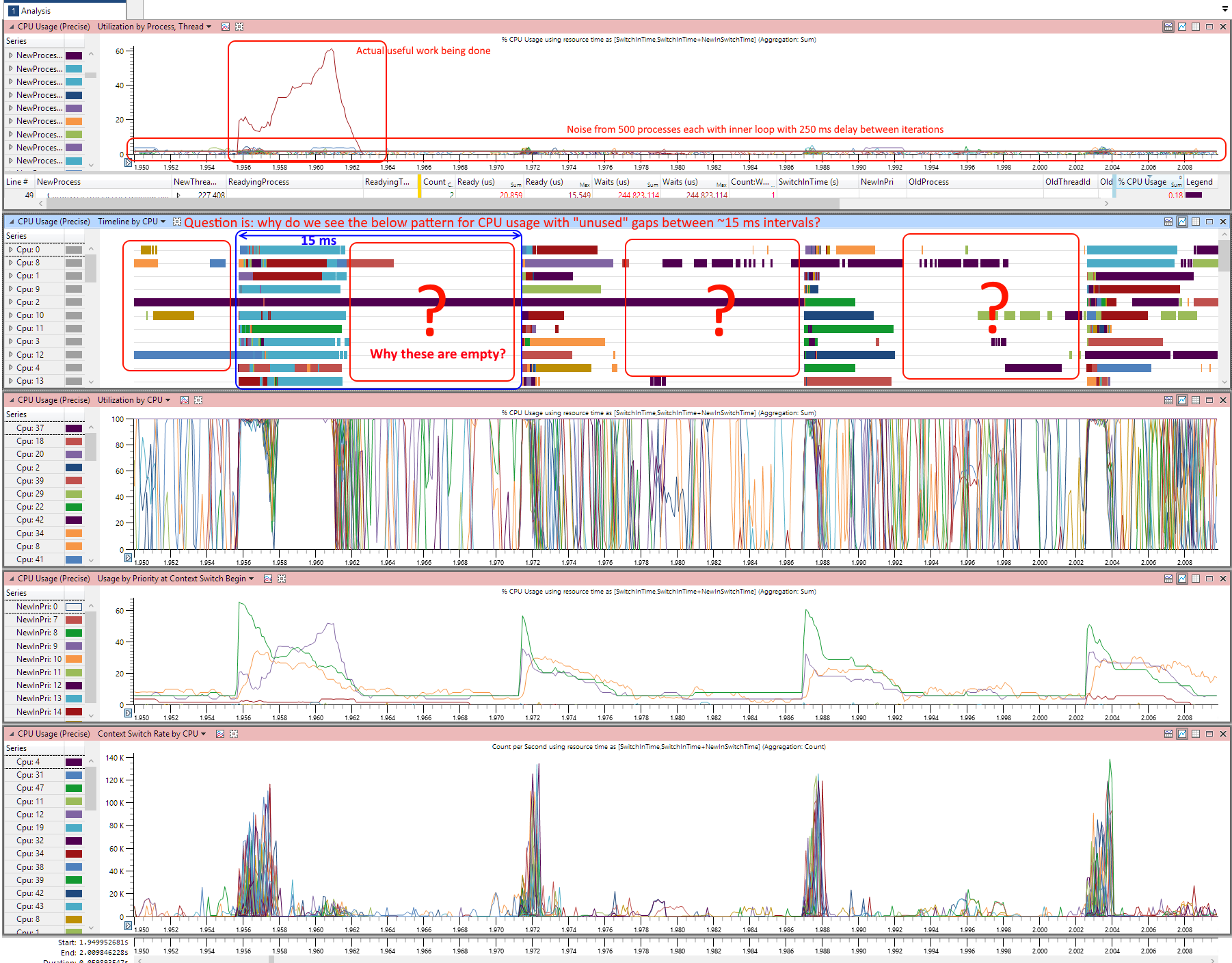

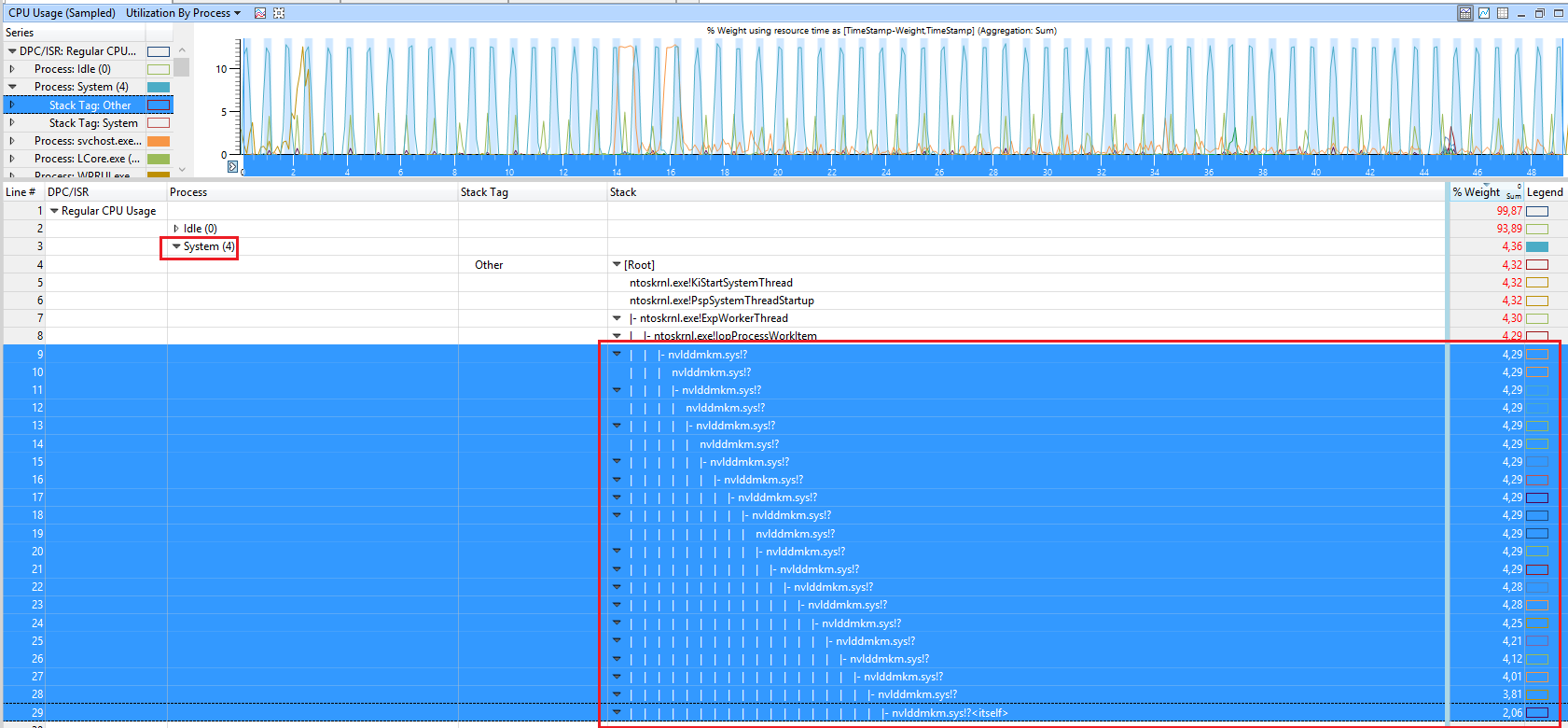

Below is the screenshot from one of xperf sessions.

You can see from the "CPU Usage (Precise)" that

There's 15 ms time slices, of which majority are under-utilized. The utilization of those slices is ~35-40%. So I assume that this in turn means that CPU gets utilized about ~35-40% of the time, yet the system's performance (let's say observable through casual tinkering around the system) is really sluggish.

With this we have this "mysterious" 30% kernel time cost, judged by the task manager CPU utilization graph.

Some CPU's are obviously utilized for the whole 15 ms slice and beyond.

Questions

As far as Windows CPU Scheduling on multiprocessor systems is concerned:

- What causes 30% kernel cost? Context switching? Something else? What consideration should be made when applications are written to reduce this cost? Or even - achieve perfect utilization with minimal infrastructure cost (on multiprocessor systems, where number of processes is higher than the number of cores)

- What are these 15 ms slices?

- Why CPU utilization has gaps in these slices?