UPDATE: I have to re-write this question as after some investigation I realise that this is a different problem.

Context: running keras in a gridsearch setting using the kerasclassifier wrapper with scikit learn. Sys: Ubuntu 16.04, libraries: anaconda distribution 5.1, keras 2.0.9, scikitlearn 0.19.1, tensorflow 1.3.0 or theano 0.9.0, using CPUs only.

Code: I simply used the code here for testing: https://machinelearningmastery.com/use-keras-deep-learning-models-scikit-learn-python/, the second example 'Grid Search Deep Learning Model Parameters'. Pay attention to line 35, which reads:

grid = GridSearchCV(estimator=model, param_grid=param_grid)

Symptoms: When grid search uses more than 1 jobs (means cpus?), e.g.,, setting 'n_jobs' on the above line A to '2', line below:

grid = GridSearchCV(estimator=model, param_grid=param_grid, n_jobs=2)

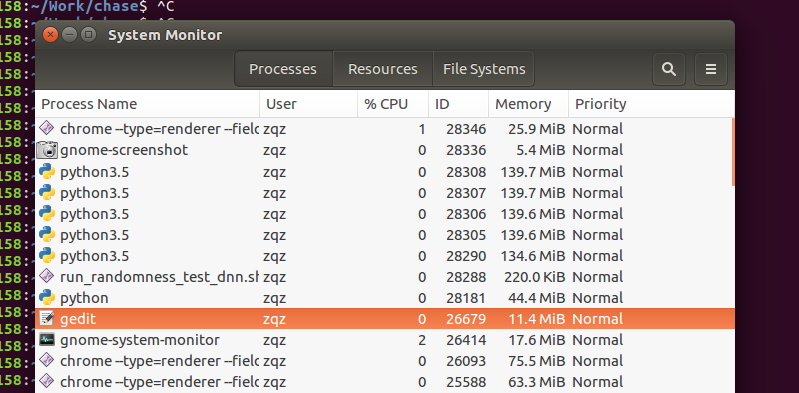

will cause the code to hang indefinitely, either with tensorflow or theano, and there is no cpu usage (see attached screenshot, where 5 python processes were created but none is using cpu).

By debugging, it appears to be the following line with 'sklearn.model_selection._search' that causes problems:

line 648: for parameters, (train, test) in product(candidate_params,

cv.split(X, y, groups)))

, on which the program hangs and cannot continue.

I would really appreciate some insights as to what this means and why this could happen.

Thanks in advance