The values mentioned in the original question look like the "color map" values, which could be obtained by getpalette() function from PIL Image module.

For the annotated values of the VOC images, I use the following code snip to check them:

import numpy as np

from PIL import Image

files = [

'SegmentationObject/2007_000129.png',

'SegmentationClass/2007_000129.png',

'SegmentationClassRaw/2007_000129.png', # processed by _remove_colormap()

# in captainst's answer...

]

for f in files:

img = Image.open(f)

annotation = np.array(img)

print('\nfile: {}\nanno: {}\nimg info: {}'.format(

f, set(annotation.flatten()), img))

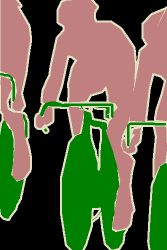

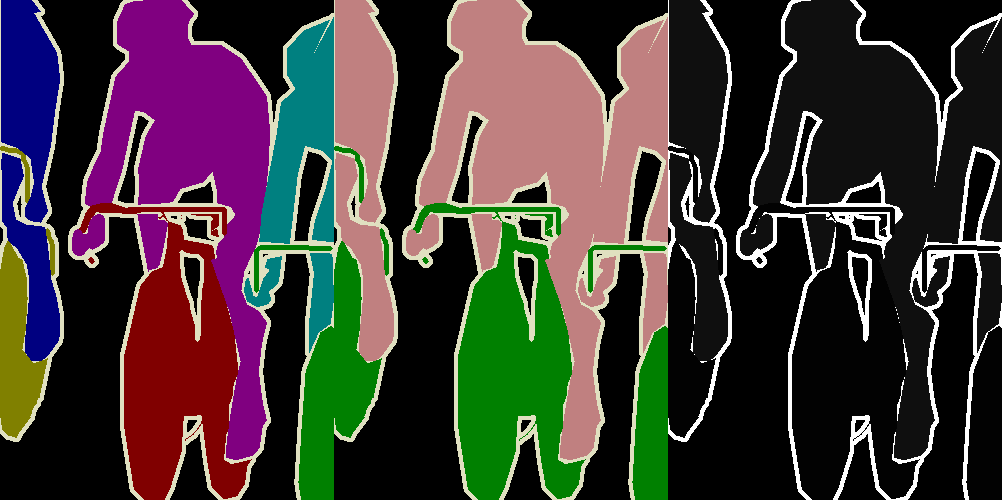

The three images used in the code are shown below (left to right, respectively):

![enter image description here]()

The corresponding outputs of the code are as follows:

file: SegmentationObject/2007_000129.png

anno: {0, 1, 2, 3, 4, 5, 6, 255}

img info: <PIL.PngImagePlugin.PngImageFile image mode=P size=334x500 at 0x7F59538B35F8>

file: SegmentationClass/2007_000129.png

anno: {0, 2, 15, 255}

img info: <PIL.PngImagePlugin.PngImageFile image mode=P size=334x500 at 0x7F5930DD5780>

file: SegmentationClassRaw/2007_000129.png

anno: {0, 2, 15, 255}

img info: <PIL.PngImagePlugin.PngImageFile image mode=L size=334x500 at 0x7F5930DD52E8>

There are two things I learned from the above output.

First, the annotation values of the images in SegmentationObject folder are assigned by the number of objects. In this case there are 3 people and 3 bicycles, and the annotated values are from 1 to 6. However, for images in SegmentationClass folder, their values are assigned by the class value of the objects. All the people belong to class 15 and all the bicycles are class 2.

Second, as mkisantal has already mentioned, after the np.array() operation, the color palette was removed (I "know" it by observing the results but I still don't understand the mechanism under the hood...). We can confirm this by checking the image mode of the outputs: