It's easy to both create and delete blob data. There are ways to protect from accidental data loss, ex:

- Resource locks to protect against accidental storage account deletion

- Azure RBAC to limit access to account/keys.

- Soft delete to recover from accidental blob deletion.

This is already a good package, but it feels like there's a weak link. AFAIK, blob container lacks such safety as for account/blobs.

Considering that containers are a good unit to work with for blob enumeration and batch-deleting, that's bad.

How to protect against accidental/malicious container deletion and mitigate the risk of data loss?

What I've considered..

Idea 1: Sync copy of all data to another storage account - but this brings the synchronization complexity (incremental copy?) and notable cost increase.

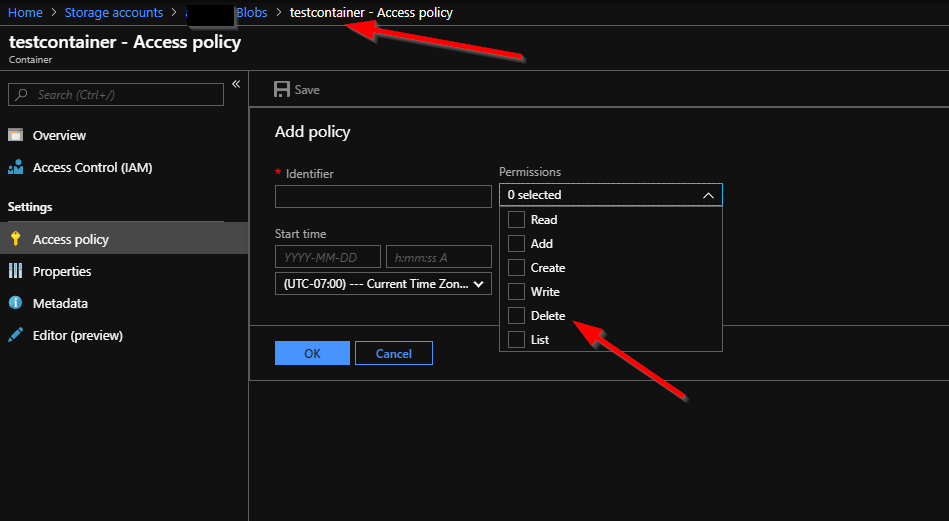

Idea 2: Lock up the keys and force everyone to work on carefully scoped SAS keys, but that's a lot of hassle with dozens of SAS tokens and their renewals, + sometimes container deletion actually is required and authorized. Feels complex enough to break. I'd prefer a safety anyway.

Idea 3: Undo deletion somehow? According to Delete Container documentation, the container data is not gone immediately:

The Delete Container operation marks the specified container for deletion. The container and any blobs contained within it are later deleted during garbage collection.

Though, there is no information on when/how the storage account garbage collection works or if/how/for how long could the container data be recovered.

Any better options I've missed?