How to scrape tables preceded with some title text from PDF? I am experimenting with tabulizer package. Here an example of getting a table from a specific page (Polish "Map of Public Health Needs")

library(tabulizer)

library(tidyverse)

options(java.parameters = "-Xmx8000m")

location<-"http://www.mpz.mz.gov.pl/wp-content/uploads/sites/4/2019/01/mpz_choroby_ukladu_kostno_miesniowego_woj_dolnoslaskie.pdf"

(out<-extract_tables(location, pages = 8,encoding = "UTF-8", method = "stream", outdir = getwd())[[4]] %>%

as.tibble())

This gets me one table at specific page. But I will have plenty of such pdfs to scrape, from the site: http://www.mpz.mz.gov.pl/mapy-dla-30-grup-chorob-2018/ and then subpages with many links for each illness, getting the links with rvest, for each province of Poland and I need to scrape tables after a specific title string eg.

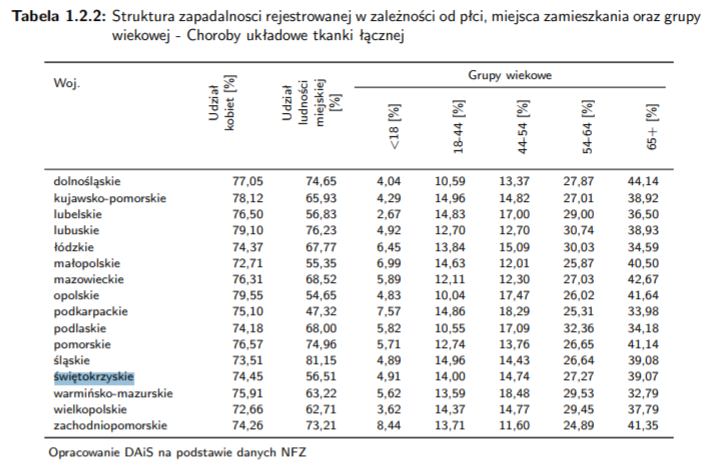

Tabela 1.2.2: Struktura zapadalnosci rejestrowanej w zależności od płci, miejsca zamieszkania oraz grupy wiekowej - Choroby układowe tkanki łącznej"

I need to detect Tabela(...) Struktura zapadalnosci(...)", because the tables may not be at the same page. Many thanks for any directions and ideas in advance.

EDIT: After I asked the question I succeeded so far to find pages where the table might be, maybe very ineffective:

library(pdfsearch)

pages <-

keyword_search(

location,

keyword = c(

'Tabela',

'Struktura zapadalnosci rejestrowanej'

),

path = TRUE,

surround_lines = FALSE

) %>%

group_by(page_num) %>%

mutate(keyword = paste0(keyword, collapse = ";")) %>%

filter(

str_detect(keyword, "Tabela") &

str_detect(keyword, "Struktura zapadalnosci rejestrowanej")

) %>%

pull(page_num) %>%

unique()