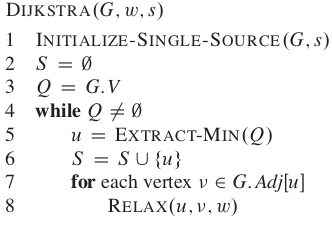

As per my understanding, I have calculated time complexity of Dijkstra Algorithm as big-O notation using adjacency list given below. It didn't come out as it was supposed to and that led me to understand it step by step.

- Each vertex can be connected to (V-1) vertices, hence the number of adjacent edges to each vertex is V - 1. Let us say E represents V-1 edges connected to each vertex.

- Finding & Updating each adjacent vertex's weight in min heap is O(log(V)) + O(1) or

O(log(V)). - Hence from step1 and step2 above, the time complexity for updating all adjacent vertices of a vertex is E*(logV). or

E*logV. - Hence time complexity for all V vertices is V * (E*logV) i.e

O(VElogV).

But the time complexity for Dijkstra Algorithm is O(ElogV). Why?