I am trying to match SIFT features between two images which I have detected using OpenCV:

sift = cv2.xfeatures2d.SIFT_create()

kp, desc = sift.detectAndCompute(img, None)

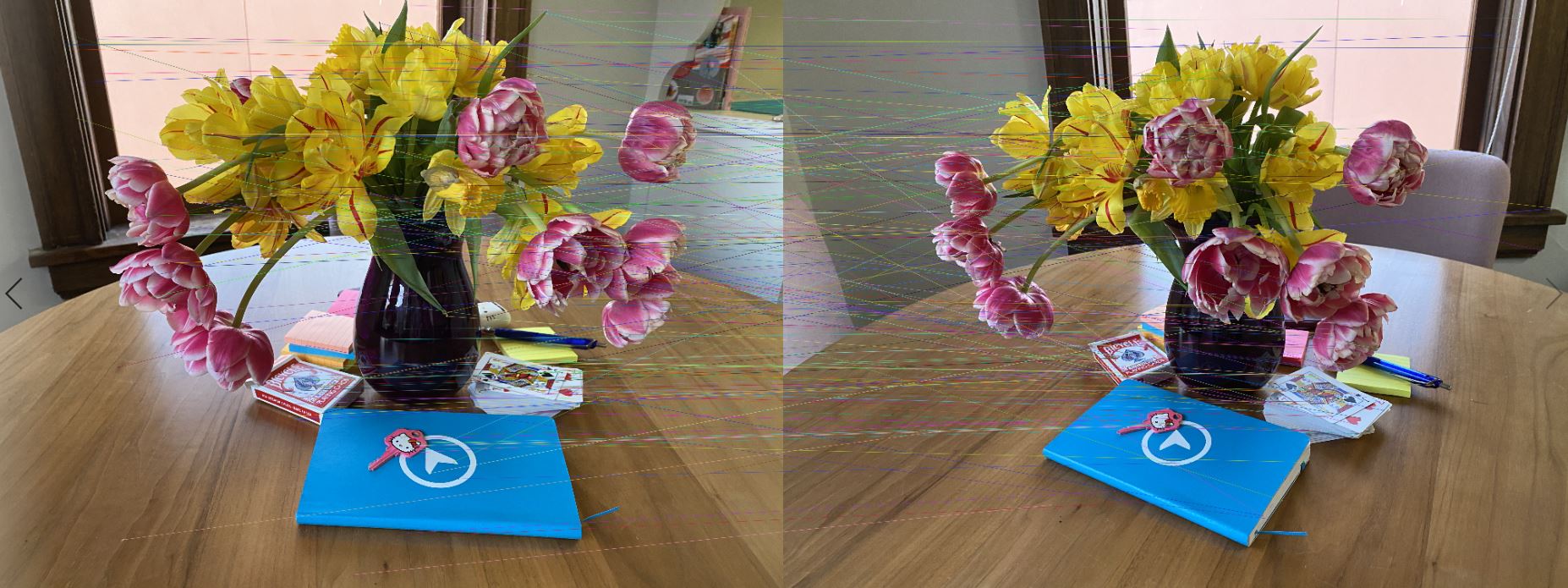

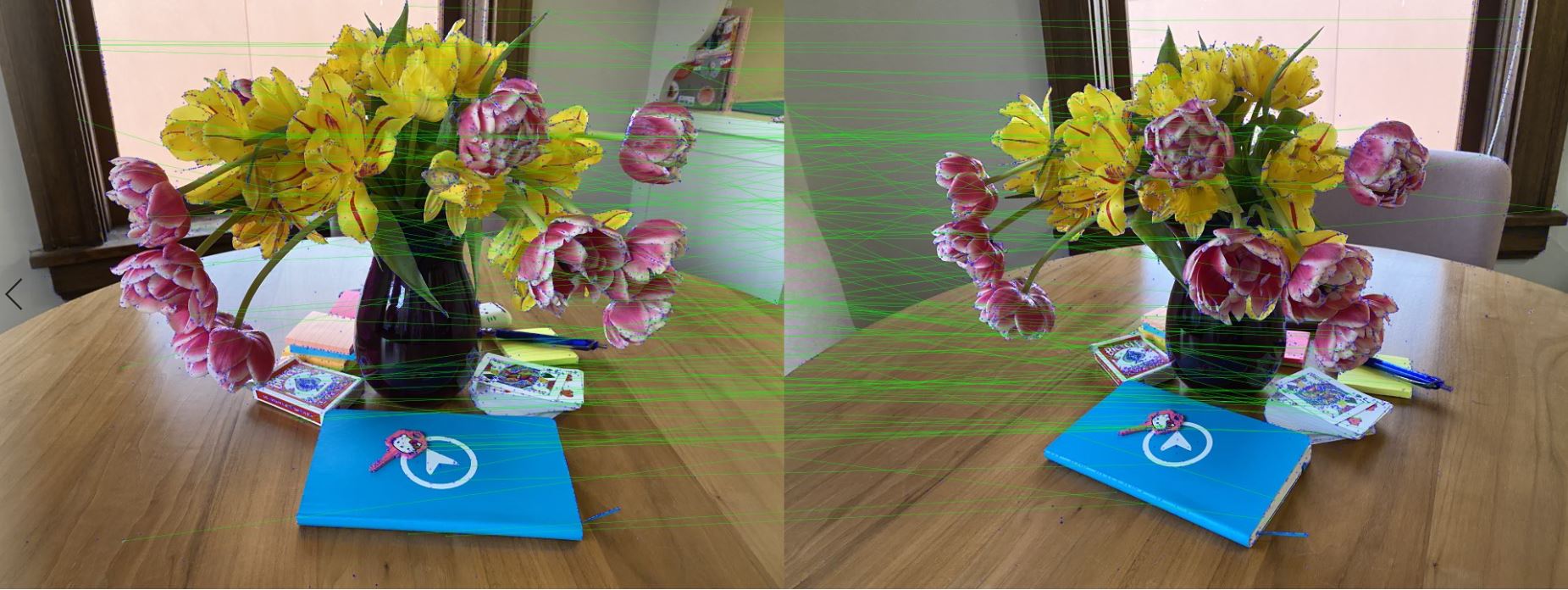

The images both seem to contains lots of features, around 15,000 each, shown with the green dots.

But after matching them I only retain 87 and some are outliers.

I'm trying to figure out if I'm doing something wrong. My code for matching the two images is:

def match(this_filename, this_desc, this_kp, othr_filename, othr_desc, othr_kp):

E_RANSAC_PROB = 0.999

F_RANSAC_PROB = 0.999

E_PROJ_ERROR = 15.0

F_PROJ_ERROR = 15.0

LOWE_RATIO = 0.9

# FLANN Matcher

# FLANN_INDEX_KDTREE = 1 # 1? https://opencv-python-tutroals.readthedocs.io/en/latest/py_tutorials/py_feature2d/py_matcher/py_matcher.html#basics-of-brute-force-matcher

# index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)

# search_params = dict(checks=50) # or pass empty dictionary

# flann = cv2.FlannBasedMatcher(index_params, search_params)

# matcherij = flann.knnMatch(this_desc, othr_desc, k=2)

# matcherji = flann.knnMatch(othr_desc, this_desc, k=2)

# BF Matcher

this_matches = {}

othr_matches = {}

bf = cv2.BFMatcher()

matcherij = bf.knnMatch(this_desc, othr_desc, k=2)

matcherji = bf.knnMatch(othr_desc, this_desc, k=2)

matchesij = []

matchesji = []

for i,(m,n) in enumerate(matcherij):

if m.distance < LOWE_RATIO*n.distance:

matchesij.append((m.queryIdx, m.trainIdx))

for i,(m,n) in enumerate(matcherji):

if m.distance < LOWE_RATIO*n.distance:

matchesji.append((m.trainIdx, m.queryIdx))

# Make sure matches are symmetric

symmetric = set(matchesij).intersection(set(matchesji))

symmetric = list(symmetric)

this_matches[othr_filename] = [ (a, b) for (a, b) in symmetric ]

othr_matches[this_filename] = [ (b, a) for (a, b) in symmetric ]

src = np.array([ this_kp[index[0]].pt for index in this_matches[othr_filename] ])

dst = np.array([ othr_kp[index[1]].pt for index in this_matches[othr_filename] ])

if len(this_matches[othr_filename]) == 0:

print("no symmetric matches")

return 0

# retain inliers that fit x.F.xT == 0

F, inliers = cv2.findFundamentalMat(src, dst, cv2.FM_RANSAC, F_PROJ_ERROR, F_RANSAC_PROB)

if F is None or inliers is None:

print("no F matrix estimated")

return 0

inliers = inliers.ravel()

this_matches[othr_filename] = [ this_matches[othr_filename][x] for x in range(len(inliers)) if inliers[x] ]

othr_matches[this_filename] = [ othr_matches[this_filename][x] for x in range(len(inliers)) if inliers[x] ]

return this_matches, othr_matches, inliers.sum()

Here are the two original images: https://www.dropbox.com/s/pvi247be2ds0noc/images.zip?dl=0