I am fighting with an internal caching (about 90 MB for 15 mp image ) in CGContextDrawImage/CGDataProviderCopyData functions.

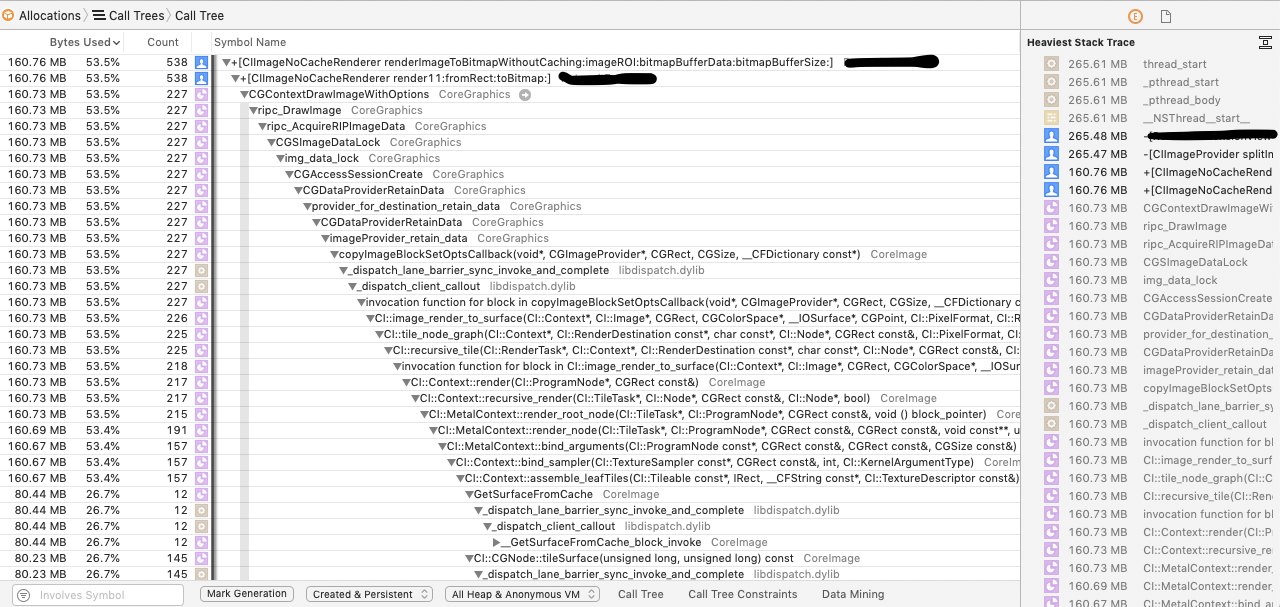

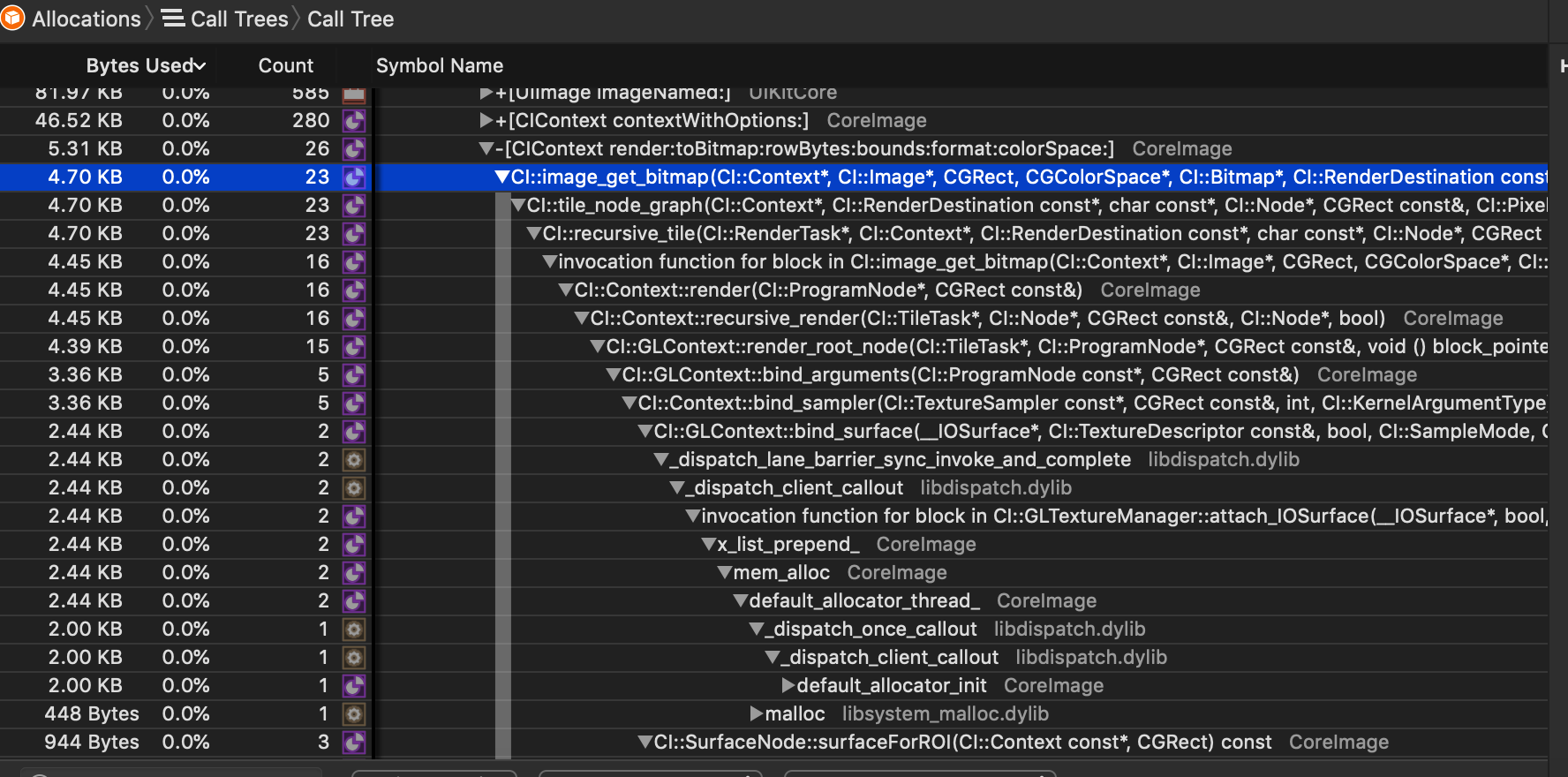

Here is the stack-trace in profiler:

In all cases, IOSurface is created as a "cache", and isn't cleaned after @autoreleasepool is drained.

This leaves a very few chances for an app to survive.

Caching doesn't depend on image size: I tried to render 512x512, as well as 4500x512 and 4500x2500 (full-size) image chunks.

I use @autoreleasepool, CFGetRetainCount returns 1 for all CG-objects before cleaning them.

The code which manipulates the data:

+ (void)render11:(CIImage*)ciImage fromRect:(CGRect)roi toBitmap:(unsigned char*)bitmap {

@autoreleasepool

{

int w = CGRectGetWidth(roi), h = CGRectGetHeight(roi);

CIContext* ciContext = [CIContext contextWithOptions:nil];

CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

CGContextRef cgContext = CGBitmapContextCreate(bitmap, w, h,

8, w*4, colorSpace,

kCGImageAlphaPremultipliedLast | kCGBitmapByteOrder32Big);

CGImageRef cgImage = [ciContext createCGImage:ciImage

fromRect:roi

format:kCIFormatRGBA8

colorSpace:colorSpace

deferred:YES];

CGContextDrawImage(cgContext, CGRectMake(0, 0, w, h), cgImage);

assert( CFGetRetainCount(cgImage) == 1 );

CGColorSpaceRelease(colorSpace);

CGContextRelease(cgContext);

CGImageRelease(cgImage);

}

}

What I know about IOSurface: it's from the previously private framework IOSurface.

CIContext has a function render: ... toIOSurface:.

I've created my IOSurfaceRef and passed it to this function, and the internal implementation still creates its own surface, and doesn't clean it.

So, do you know (or assume):

1. Are there other ways to read CGImage's data buffer except

CGContextDrawImage/CGDataProviderCopyData ?

2. Is there a way to disable caching at render?

3. Why does the caching happen?

4. Can I use some lower-level (while non-private) API to manually clean up system memory?

Any suggestions are welcome.

clearCacheson theciContextafter you created thecgImage? Also you could try to init theciContextwithcontextWithCGContext:options:after creating thecgContext, passing it as an argument. That should tell Core Image to render directly into that context (instead of an intermediate buffer) and you don't need to callCGContextDrawImage. I haven't tried it, though. – Lacker[CIContext contextWithOptions:nil]), I'll try. – Setup[CIContext contextWithOptions:]and passedkCIContextCacheIntermediates:NO. The functionCreateCachedSurfaceis still called inside[CIContext render: toBitmap:]. – Setup