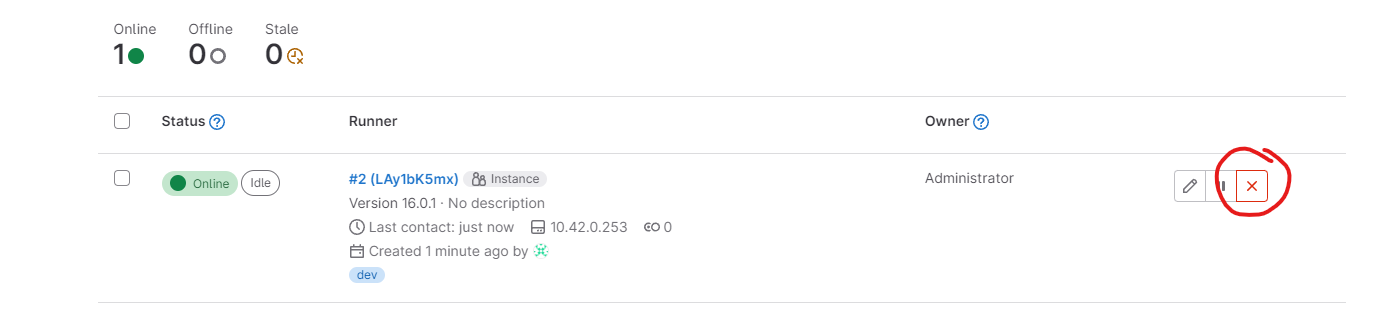

I am trying to do docker scan by using Trivy and integrating it in GitLab the pipeline is passed. However the job is failed, not sure why the job is failed. the docker image is valid. updated new error after enabled shared runner

gitlab.yml

Trivy_container_scanning:

stage: test

image: docker:stable-git

variables:

# Override the GIT_STRATEGY variable in your `.gitlab-ci.yml` file and set it to `fetch` if you want to provide a `clair-whitelist.yml`

# file. See https://docs.gitlab.com/ee/user/application_security/container_scanning/index.html#overriding-the-container-scanning-template

# for details

GIT_STRATEGY: none

IMAGE: "$CI_REGISTRY_IMAGE:$CI_COMMIT_SHA"

allow_failure: true

before_script:

- export TRIVY_VERSION=${TRIVY_VERSION:-v0.20.0}

- apk add --no-cache curl docker-cli

- docker login -u "$CI_REGISTRY_USER" -p "$CI_REGISTRY_PASSWORD" $CI_REGISTRY

- curl -sfL https://raw.githubusercontent.com/aquasecurity/trivy/main/contrib/install.sh | sh -s -- -b /usr/local/bin ${TRIVY_VERSION}

- curl -sSL -o /tmp/trivy-gitlab.tpl https://github.com/aquasecurity/trivy/raw/${TRIVY_VERSION}/contrib/gitlab.tpl

script:

- trivy --exit-code 0 --cache-dir .trivycache/ --no-progress --format template --template "@/tmp/trivy-gitlab.tpl" -o gl-container-scanning-report.json $IMAGE

#- ./trivy — exit-code 0 — severity HIGH — no-progress — auto-refresh trivy-ci-test

#- ./trivy — exit-code 1 — severity CRITICAL — no-progress — auto-refresh trivy-ci-test

cache:

paths:

- .trivycache/

artifacts:

reports:

container_scanning: gl-container-scanning-report.json

dependencies: []

only:

refs:

- branches

Dockerfile

FROM composer:1.7.2

RUN git clone https://github.com/aquasecurity/trivy-ci-test.git && cd trivy-ci-test && rm Cargo.lock && rm Pipfile.lock

CMD apk add — no-cache mysql-client

ENTRYPOINT [“mysql”]

job error:

Running with gitlab-runner 13.2.4 (264446b2)

on gitlab-runner-gitlab-runner-76f48bbd84-8sc2l GCJviaG2

Preparing the "kubernetes" executor

30:00

Using Kubernetes namespace: gitlab-managed-apps

Using Kubernetes executor with image docker:stable-git ...

Preparing environment

30:18

Waiting for pod gitlab-managed-apps/runner-gcjviag2-project-1020-concurrent-0pgp84 to be running, status is Pending

Waiting for pod gitlab-managed-apps/runner-gcjviag2-project-1020-concurrent-0pgp84 to be running, status is Pending

Waiting for pod gitlab-managed-apps/runner-gcjviag2-project-1020-concurrent-0pgp84 to be running, status is Pending

Waiting for pod gitlab-managed-apps/runner-gcjviag2-project-1020-concurrent-0pgp84 to be running, status is Pending

Waiting for pod gitlab-managed-apps/runner-gcjviag2-project-1020-concurrent-0pgp84 to be running, status is Pending

Waiting for pod gitlab-managed-apps/runner-gcjviag2-project-1020-concurrent-0pgp84 to be running, status is Pending

ERROR: Job failed (system failure): prepare environment: image pull failed: Back-off pulling image "docker:stable-git". Check https://docs.gitlab.com/runner/shells/index.html#shell-profile-loading for more information

another error:

Running with gitlab-runner 13.2.4 (264446b2)

on gitlab-runner-gitlab-runner-76f48bbd84-8sc2l GCJviaG2

Preparing the "kubernetes" executor

30:00

Using Kubernetes namespace: gitlab-managed-apps

Using Kubernetes executor with image $CI_REGISTRY/devops/docker-alpine-sdk:19.03.15 ...

Preparing environment

30:03

Waiting for pod gitlab-managed-apps/runner-gcjviag2-project-1020-concurrent-0t7plc to be running, status is Pending

ERROR: Job failed (system failure): prepare environment: image pull failed: Failed to apply default image tag "/devops/docker-alpine-sdk:19.03.15": couldn't parse image reference "/devops/docker-alpine-sdk:19.03.15": invalid reference format. Check https://docs.gitlab.com/runner/shells/index.html#shell-profile-loading for more information