When you call a method in code, the compiler will try to find that method provided the argument list you pass (if any). Take this method:

public static void M(int i) { }

You can call it using M(42) and all is well. When there are multiple candidates:

public static void M(byte b) { }

public static void M(int i) { }

The compiler will apply "overload resolution" to determine "applicable function members", and when more than one, the compiler will calculate the "betterness" of the overloads to determine the "better function member" that you intend to call.

In the case of the above example, M(42), the int overload is chosen because non-suffixed numeric literals in C# default to int, so M(int) is the "better" overload.

(All parts in "quotes" can be looked up in the C# language specification, 12.6.4 Overload resolution.)

Now onto your code.

Given the literal 100 could be stored in both a byte and an int (and then some), and your second argument doesn't match either overload, the compiler doesn't know which overload you intend to call.

It has to pick any to present you with an error message. In this case it appears to pick the first one in declaration order.

Given this code:

public static void Main()

{

int? i = null;

M(100, i);

}

public static void M(int i, int i2) {}

public static void M(byte b, byte b2) {}

It mentions:

cannot convert from 'int?' to 'int'

If we swap the method declarations:

public static void M(byte b, byte b2) {}

public static void M(int i, int i2) {}

It complains:

cannot convert from 'int?' to 'byte'

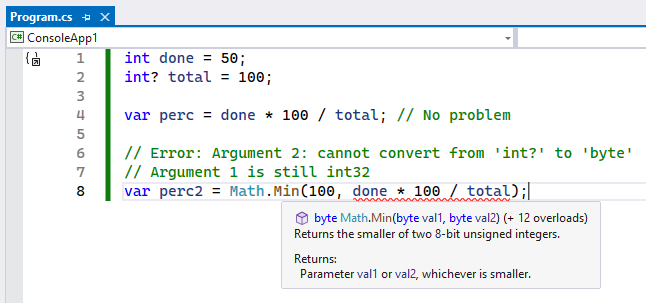

Minoverload operating onint, int?, so you then get the complex rules C# has for overloading methods to get the "best fit". Undoubtedly by parsing the standard closely enough it then becomes clear why the first argument is resolved to abyteand not any other integral type, but this is the most difficult part in the standard, so I just trust the compiler is doing the right thing there. – Manakerpercis of typeint?, so that's OK. But the value passed toMath.Min()must beint, NOTint?, so that won't work. – Caffeypercisint?as well (which isNullable<int>, a totally different type ofint). – Modestaint?, it can just inteprete however it wants. It's strange that if I hover on the first argument, it says it'sint32, but it picks the first overload (which isbyte, byte) instead. – Modesta1 + default(int?) == null,1 / default(int?) == null. This is analogous to how things work in database systems, ifnullis interpreted as "unknown" (and mapping to database types was one of the motivations for adding nullables). It's not particularly dangerous in that the result type is also nullable, and that's typically not that easy to overlook (since the compiler will complain if you do, as evidenced by this very question). – Manakerpercisint?but even in Nullable Reference Type context, there is no warning or anything onpercbeingint?. The final consumption of the value was in Razor and it would be troublesome if it wasnull(printing empty string) but Razor gladly acceptnullvalue. I wish there is an opt-in option that this should result in a compile error instead. – Modestaint?is a nullable value type; NRTs are really something quite different yet still comparable enough that?is also used to indicate nullability. If you don't want nullability the remedy is very simple -- declare your type explicitly asint. No implicit conversion fromint?tointis permitted. Outlawing any use ofNullableis not possible with a compiler switch and probably quite problematic if you want to consume external APIs, but you could write a custom Roslyn analyzer for it (or even a simple linting rule that regexes\w\?, which should exclude ternary expressions). – Manaker