When trying to execute local spark code with databricks-connect 13.2.0, it does not work.

I have the following issue:

Error:

- details =

"INVALID_STATE: cluster xxxxx is not Shared or Single User Cluster. (requestId=05bc3105-4828-46d4-a381-7580f3b55416)" - debug_error_string =

"UNKNOWN:Error received from peer {grpc_message:"INVALID_STATE: cluster 0711-122239-bb999j6u is not Shared or Single User Cluster. (requestId=05bc3105-4828-46d4-a381-7580f3b55416)", grpc_status:9, created_time:"2023-07-11T15:26:08.9729+02:00"}"

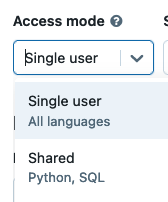

The cluster is Shared and I tried several cluster configurations but it doest not work ! The cluster runtime version is 13.2.

Also, I use:

- Python 3.10

- openjdk version "1.8.0_292"

- Azure Databricks

Any one had a similar issue with new databricks connect ?

Thanks for help!

I tried the following code:

from databricks.connect import DatabricksSession

from pyspark.sql.types import *

from delta.tables import DeltaTable

from datetime import date

if __name__ == "__main__":

spark = DatabricksSession.builder.getOrCreate()

# Create a Spark DataFrame consisting of high and low temperatures

# by airport code and date.

schema = StructType([

StructField('AirportCode', StringType(), False),

StructField('Date', DateType(), False),

StructField('TempHighF', IntegerType(), False),

StructField('TempLowF', IntegerType(), False)

])

data = [

[ 'BLI', date(2021, 4, 3), 52, 43],

[ 'BLI', date(2021, 4, 2), 50, 38],

[ 'BLI', date(2021, 4, 1), 52, 41],

[ 'PDX', date(2021, 4, 3), 64, 45],

[ 'PDX', date(2021, 4, 2), 61, 41],

[ 'PDX', date(2021, 4, 1), 66, 39],

[ 'SEA', date(2021, 4, 3), 57, 43],

[ 'SEA', date(2021, 4, 2), 54, 39],

[ 'SEA', date(2021, 4, 1), 56, 41]

]

temps = spark.createDataFrame(data, schema)

print(temps)

And I expect to display the dataframe in may local terminal with remote spark execution