My understanding at this point in time is that probabilistic programming is similar to Bayesian networks, just translated into programming language for creation of automated inference models?

TL;DR: That is correct. A probabilistic program can be viewed as equivalent to a Bayesian network, but is expressed in a much richer language. Probabilistic programming as a field proposes such representations, as well as algorithms that take advantage of those representations, because sometimes a richer representation makes the problem easier.

TL;DR (continued): also note that "probabilistic machine learning" is a much more general concept than Bayesian networks (graphical models), though. It refers to any machine learning method that uses probabilities. Some examples of probabilistic machine learning that are not Bayesian networks (graphical models) are: probabilistic classifiers such as probabilistic decision trees or a neural network with a softmax last layer (outputting a class probability), Gaussian processes (which can be understood in terms of graphical models but are often done in specialized ways), Bayesian neural networks (which learn distributions on the neural net weights rather than point values for them).

Anyway, to understand more about the relationships between probabilistic programming and Bayesian networks, please continue reading.

Consider for example a probabilistic program that models a disease that is more likely to afflict men:

N = 1000000;

for i = 1:N {

male[i] ~ Bernoulli(0.5);

disease[i] ~ if male[i] then Bernoulli(0.8) else Bernoulli(0.3)

}

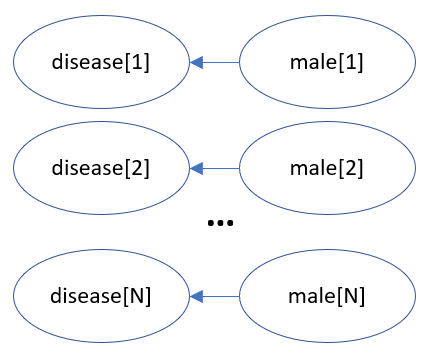

This probabilistic program is equivalent to the following Bayesian network accompanied by the appropriate conditional probability tables:

![Bayesian network equivalent to probabilistic program]()

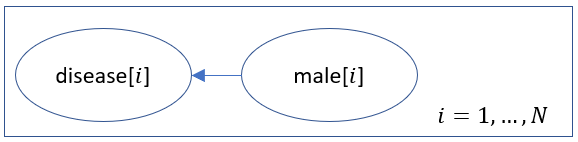

For highly repetitive networks such as this, authors often use plate notation to make their depiction more succinct:

![enter image description here]()

However, plate notation is a device for human-readable publications, not a formal language in the same sense that a programming language is. The advantage of a formal language is that it can be automatically processed by an algorithm, instead of just understood by humans and manipulated manually as it happens with plate notation. Also, for more complex models, plate notation could become harder to understand and maintain. Finally, a programming language brings other benefits, such as primitive operations that make it easier to specify conditional probabilities succinctly.

So, is it just a matter of having a convenient representation? No, because a more abstract representation contains more high-level information that can be used to improve inference performance.

Suppose that we want to compute the probability distribution on the number of people among N individuals with the disease. A straightforward and generic Bayesian network algorithm would have to consider the large number of 2^N combinations of assignments to the disease variables in order to compute that answer.

The probabilistic program representation, however, explicitly indicates that the conditional probabilities for disease[i] and male[i] are identical for all i. An inference algorithm can exploit that to compute the marginal probability of disease[i], which is identical for all i, use the fact that the number of diseased people will therefore be a binomial distribution B(N, P(disease[i])) and provide that as the desired answer, in time constant in N. It would also be able to provide an explanation of this conclusion that would be more understandable and insightful to a user.

One could argue that this comparison is unfair because a knowledgeable user would not pose the query as defined for the explicit O(N)-sized Bayesian network, but instead simplify the problem in advance by exploiting its simple structure. However, the user may not be knowledgeable enough for such a simplification, particularly for more complex cases, or may not have the time to do it, or may make a mistake, or may not know in advance what the model will be so she cannot simplify it manually like that. Probabilistic programming offers the possibility that such simplification be made automatically.

To be fair, most current probabilistic programming tools (such as JAGS and Stan) do not perform this more sophisticated mathematical reasoning (often called lifted probabilistic inference) and instead simply perform Markov Chain Monte Carlo (MCMC) sampling over the Bayesian network equivalent to the probabilistic program (but often without having to build the entire network in advance, which is also another possible gain). In any case, this convenience already more than justifies their use.